AI-powered search & chat for Data / Computer Science Students

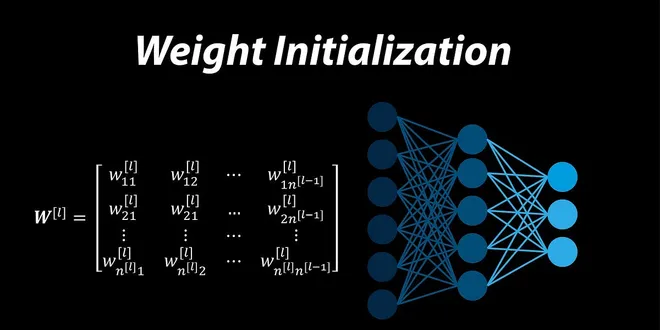

Weights Initialization in Neural Network

Weight initialization helps a lot in optimization for deep learning. Without it, SGD and its variants would be much slower and tricky to converge to the optimal weights. The aim of weight…

Read more at Analytics Vidhya

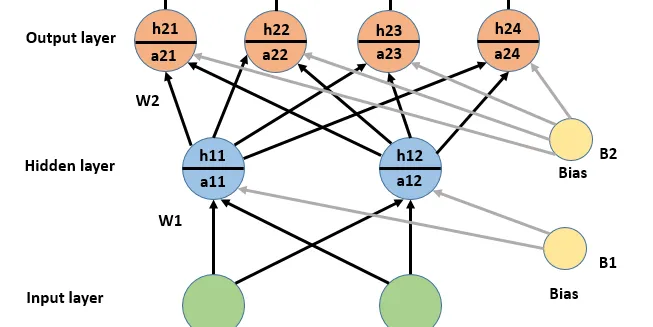

Weight Initialization in Deep Neural Networks

Weight and bias are the adjustable parameters of a neural network, and during the training phase, they are changed using the gradient descent algorithm to minimize the cost function of the network…

Read more at Towards Data Science

Weight Initialization Techniques in Neural Networks

Building even a simple neural network can be a confusing task and upon that tuning it to get a better result is extremely tedious. But, the first step that comes in consideration while building a…

Read more at Towards Data Science

Weight Initialization for Deep Learning Neural Networks

Last Updated on February 8, 2021 Weight initialization is an important design choice when developing deep learning neural network models. Historically, weight initialization involved using small rando...

Read more at Machine Learning Mastery

Weight Initialization for Neural Networks — Does it matter?

Weight initialization techniques changes the behavior of the artificial neural network model over the course of its training. Hence we need to understand how the choice of weight(kernel) initializatio...

Read more at Towards Data Science

Weight Initializer in Deep Learning

In Neural Networks, it is very necessary to understand how the weights are updated to help the optimizer find the parameters that are best suited for the data to land on to the global minima. A lot…

Read more at Analytics Vidhya

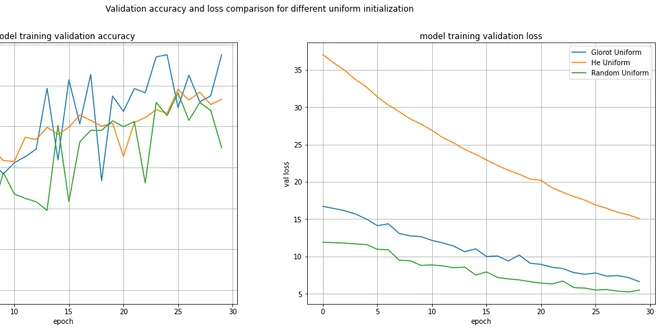

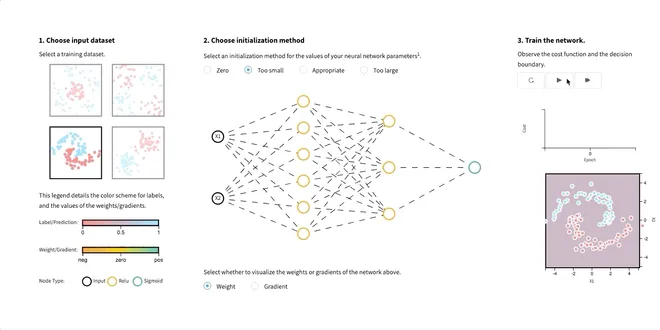

Hyper-parameters in Action! Part II - Weight Initializers

This is the second post of my series on hyper-parameters. In this post, I will show you the importance of properly initializing the weights of your deep neural network. We will start with a naive…

Read more at Towards Data Science

Weight Initialization in Neural Networks: A Journey From the Basics to Kaiming

I’d like to invite you to join me on an exploration through different approaches to initializing layer weights in neural networks. Step-by-step, through various short experiments and thought…

Read more at Towards Data Science

Why better weight initialization is important in neural networks?

At the beginning of my deep learning journey, I always underrated weight initialization. I believed weights should be initialized to random values without knowing answers to the questions like why…

Read more at Towards Data Science

Parameter Initialization

Now that we know how to access the parameters, let’s look at how to initialize them properly. We discussed the need for proper initialization in Section 5.4 . The deep learning framework provides defa...

Read more at Dive intro Deep Learning Book

Selecting the right weight initialization for your deep neural network

The weight initialization technique you choose for your neural network can determine how quickly the network converges or whether it converges at all. Although the initial values of these weights are…...

Read more at Towards Data Science

Weight Initialization and Activation Functions in Deep Learning

Developing effective deep learning models requires fine-tuning. Take the time to select the correct activation function and weight initialization method.

Read more at Towards Data Science

Kaiming He Initialization in Neural Networks — Math Proof

Deriving optimal initial variance of weight matrices in neural network layers with ReLU activation function Continue reading on Towards Data Science

Read more at Towards Data Science

What is Transfer Learning & Weight Initialization?

Welcome everyone! This is my seventh writing on my journey of Completing the Deep Learning Nanodegree in a month! I’ve done 46% of the third module out of a total of six modules of the degree…

Read more at Analytics Vidhya

A Visual Exploration of Weight Initialisation in Neural Networks

I’ve recently started exploring the visual potential of neural networks, and in the process I came across David Ha’s fascinating blog. In one of his posts he created a series of images by passing the…...

Read more at Analytics Vidhya

How weights are initialized in Neural networks (Quick Revision)

The neural network is one of the fundamental concepts for modern Deep learning. This article covers Only the How and Why Initialization of the Neural network, So I assume you know the basic…

Read more at Analytics Vidhya

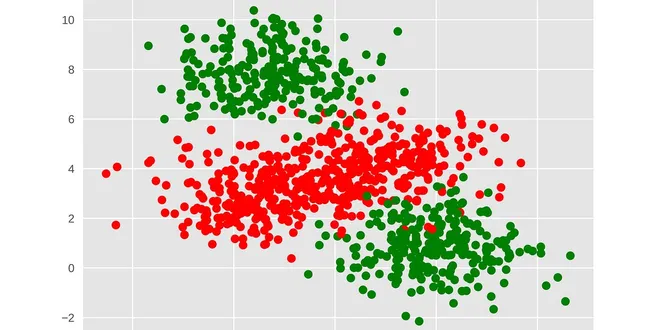

Initializing Weights for Deep Learning Models

Last Updated on April 8, 2023 In order to build a classifier that accurately classifies the data samples and performs well on test data, you need to initialize the weights in a way that the model conv...

Read more at MachineLearningMastery.com

Implementing different Activation Functions and Weight Initialization Methods Using Python

we will analyze how the choice of activation function and weight initialization method will have an effect on accuracy and the rate at which we reduce our loss in a deep neural network

Read more at Towards Data Science

Xavier Glorot Initialization in Neural Networks — Math Proof

Detailed derivation for finding optimal initial distributions of weight matrices in deep learning layers with tanh activation function Continue reading on Towards Data Science

Read more at Towards Data Science

Why Initialize a Neural Network with Random Weights?

Last Updated on March 26, 2020 The weights of artificial neural networks must be initialized to small random numbers. This is because this is an expectation of the stochastic optimization algorithm us...

Read more at Machine Learning Mastery

Generalised Method For Initializing Weights in CNN

Initialising the parameters with right values is one of the most important conditions for getting accurate results from a neural network. If all the weights are initialized with zero, the derivative…

Read more at Analytics Vidhya

The Importance and Reasoning behind Initialisation

Neural networks work by learning what parameters best fit a dataset to predict an output. In order to learn the best parameters, the ML engineer must initialise and then optimise them using the…

Read more at Towards Data Science

Introduction to Gradient Descent: Weight Initiation and Optimizers

Gradient Descent is one of the main driving algorithms behind all machine learning and deep learning methods. This mechanism has undergone several modifications over time in several ways to make it…

Read more at Towards Data Science

Initialization Techniques for Neural Networks

In this blog, we will see some initialization techniques used in Deep Learning. Anyone that even has little background in Machine Learning must know that we need to learn weights or hyperparameters…

Read more at Towards Data Science- «

- ‹

- …