AI-powered search & chat for Data / Computer Science Students

Transformers: A curious case of “attention”

In this post we will go through the intricacies behind the hypothesis of transformers and how this laid a foundational path for the BERT model. Also, we shall note that the stream of transfer…

Read more at Analytics Vidhya

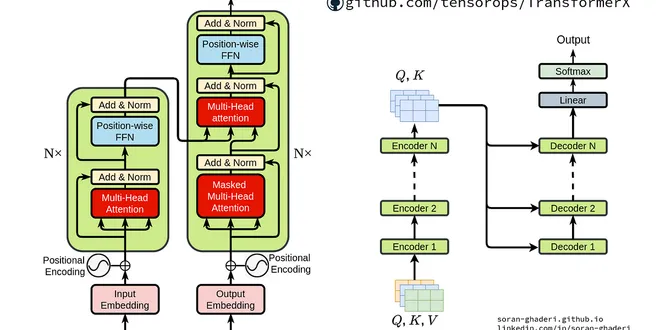

The Transformer: Attention Is All You Need

The Transformer paper, “Attention is All You Need” is the 1 all-time paper on Arxiv Sanity Preserver as of this writing (Aug 14, 2019). This paper showed that using attention mechanisms alone, it’s…

Read more at Towards Data Science

All you need to know about ‘Attention’ and ‘Transformers’ — In-depth Understanding — Part 2

Attention, Self-Attention, Multi-head Attention, Masked Multi-head Attention, Transformers, BERT, and GPT Continue reading on Towards Data Science

Read more at Towards Data Science

Transformers: Attention is all You Need

Introduction In one of the previous blogs, we discussed LSTMs and their structures. However, they are slow and need the inputs to be passed sequentially. Because today’s GPUs are designed for paralle...

Read more at Python in Plain English

The Intuition Behind Transformers — Attention is All You Need

Traditionally recurrent neural networks and their variants have been used extensively for Natural Language Processing problems. In recent years, transformers have outperformed most RNN models. Before…...

Read more at Towards Data Science

Self Attention and Transformers

This is really a continuation of an earlier post on “Introduction to Attention”, where we saw some of the key challenges that were addressed by the attention architecture introduced there (and…

Read more at Towards Data Science

Transformers in Action: Attention Is All You Need

Transformers A brief survey, illustration, and implementation Fig. 1. AI-generated artwork. Prompt: Street View Of A Home In The Style Of Storybook Cottage. Photo generated by Stable diffusion. Link ...

Read more at Towards Data Science

Transformers — You just need Attention

Natural language processing or NLP is a subset of machine learning that deals with text analytics. It is concerned with the interaction of human language and computers. There have been different NLP…

Read more at Towards Data Science

Attention Is All You Need — Transformer

Discussing the Transformer model

Read more at Towards AI

All you need to know about ‘Attention’ and ‘Transformers’ — In-depth Understanding — Part 1

This is a long article that talks about almost everything one needs to know about the Attention mechanism including Self-Attention, Query, Keys, Values, Multi-Head Attention, Masked-Multi Head…

Read more at Towards Data Science

Understanding Attention In Transformers Models

I thought that it would be cool to build a language translator. At first, I thought that I would do so utilizing a recurrent neural network (RNN), or an LSTM. But as I did my research I started to…

Read more at Analytics Vidhya

Deep Dive into Self-Attention by Hand✍︎

Explore the intricacies of the attention mechanism responsible for fueling the transformers Attention! Attention! Because ‘Attention is All You Need’. No, I am not saying that, the Transformer is. Im...

Read more at Towards Data Science

Transformer — Attention is all you need

In the previous post, we discussed attention-based seq2seq models and the logic behind their inception. The plan was to create a PyTorch implementation story about the same but turns out, PyTorch…

Read more at Towards Data Science

Self-Attention in Transformers

A Beginner-Friendly Guide to Self-Attention Mechanism Photo by @redcharlie1 on Unsplash Are you intrigued by recent technologies like OpenAI’s ChatGPT, DALL-E, Stable Diffusion, Midjourney, and more?...

Read more at Towards AI

Transformers Explained: A Beginner’s Guide to the Attention-Based Model

Photo by Sergey Pesterev on Unsplash Understanding Transformer Architecture Table of Contents: 1\. Introduction 1.1. Understanding Transformer Architecture 2\. Attention Is All You Need — Summary 2.1....

Read more at Level Up Coding

Building Blocks of Transformers: Attention

The Borrower, the Lender, and the Transformer: A Simple Look at Attention It’s been 5 years…and the Transformer architecture seems almost untouchable. During all this time, there was no significant c...

Read more at Towards AI

Explaining Attention in Transformers [From The Encoder Point of View]

Photo by Devin Avery on Unsplash In this article, we will take a deep dive into the concept of attention in Transformer networks, particularly from the encoder’s perspective. We will cover the followi...

Read more at Towards AI

Matters of Attention: What is Attention and How to Compute Attention in a Transformer Model

A comprehensive and easy guide to Attention in Transformer Models (with example code) Continue reading on Towards Data Science

Read more at Towards Data Science

Attention for Vision Transformers, Explained

Vision Transformers Explained Series The Math and the Code Behind Attention Layers in Computer Vision Since their introduction in 2017 with Attention is All You Need¹, transformers have established t...

Read more at Towards Data Science

From Transformers to Performers: Approximating Attention

A few weeks ago researchers from Google, the University of Cambridge, DeepMind and the Alan Turing Institute released the paper Rethinking Attention with Performers, which seeks to find a solution to…...

Read more at Towards Data Science

Transformers Explained Visually (Part 3): Multi-head Attention, deep dive

A Gentle Guide to the inner workings of Self-Attention, Encoder-Decoder Attention, Attention Score and Masking, in Plain English.

Read more at Towards Data Science

The Ultimate Transformer And The Attention You Need.

A Transformer is on the most popular state of the art deep learning architecture that is mostly used for NLP task. Ever since the advent of the transformer it has replaced the RNN and LSTM for…

Read more at Analytics Vidhya

The AiEdge+: Everything you need to know about the Attention Mechanism!

Transformers are taking every domain of ML by storm! Are you ready for the revolution? I think it is becoming more and more important to understand the basics, so pay Attention because Attention is th...

Read more at The AiEdge Newsletter

Attention and Transformer Models

“Attention Is All You Need” by Vaswani et al., 2017 was a landmark paper that proposed a completely new type of model — the Transformer. Nowadays, the Transformer model is ubiquitous in the realms of…...

Read more at Towards Data Science- «

- ‹

- …