AI-powered search & chat for Data / Computer Science Students

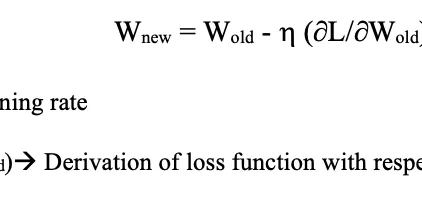

Exploding And Vanishing Gradient Problem: Math Behind The Truth

Hello Stardust! Today we’ll see mathematical reason behind exploding and vanishing gradient problem but first let’s understand the problem in a nutshell. “Usually, when we train a Deep model using…

Read more at Becoming Human: Artificial Intelligence Magazine

Vanishing and Exploding Gradients

In this blog, I will explain how a sigmoid activation can have both vanishing and exploding gradient problem. Vanishing and exploding gradients are one of the biggest problems that the neural network…...

Read more at Level Up Coding

Vanishing and Exploding Gradient Problems

One of the problems with training very deep neural network is that are vanishing and exploding gradients. (i.e When training a very deep neural network, sometimes derivatives becomes very very small…

Read more at Analytics Vidhya

How to Avoid Exploding Gradients With Gradient Clipping

Last Updated on August 28, 2020 Training a neural network can become unstable given the choice of error function, learning rate, or even the scale of the target variable. Large updates to weights duri...

Read more at Machine Learning Mastery

Alleviating Gradient Issues

Solve Vanishing or Exploding Gradient problem while training a Neural Network using Gradient Descent by using ReLU, SELU, activation functions, BatchNormalization, Dropout & weight initialization

Read more at Towards Data Science

Vanishing & Exploding Gradient Problem: Neural Networks 101

What are Vanishing & Exploding Gradients? In one of my previous posts, we explained neural networks learn through the backpropagation algorithm. The main idea is that we start on the output layer and ...

Read more at Towards Data Science

A Gentle Introduction to Exploding Gradients in Neural Networks

Last Updated on August 14, 2019 Exploding gradients are a problem where large error gradients accumulate and result in very large updates to neural network model weights during training. This has the ...

Read more at Machine Learning Mastery

What Are Gradients, and Why Do They Explode?

Gradients are arguably the most important fundamental concept in machine learning. In this post we will explore the concept of gradients, what makes them vanish and explode, and how to rein them in. W...

Read more at Towards Data Science

The Vanishing Gradient Problem

The problem: as more layers using certain activation functions are added to neural networks, the gradients of the loss function approaches zero, making the network hard to train. Why: The sigmoid…

Read more at Towards Data Science

More about The Gradient

In conversations with Eugene, I identified specific examples which may aid my most recent page, “Overcoming the Vanishing Gradient Problem”. Consider a recurrent neural network that plays a Maze…

Read more at Towards Data Science

The Vanishing/Exploding Gradient Problem in Deep Neural Networks

A difficulty that we are faced with when training deep Neural Networks is that of vanishing or exploding gradients. For a long period of time, this obstacle was a major barrier for training large…

Read more at Towards Data Science

The Problem of Vanishing Gradients

Vanishing gradients occur while training deep neural networks using gradient-based optimization methods. It occurs due to the nature of the backpropagation algorithm that is used to train the neural…

Read more at Towards Data Science

Vanishing Gradient Problem in Deep Learning

In 1980’s, at that time the researches were not able to find deep neural network in ANN because we have to use sigmoid in each and every neuron as the ReLU was not invented. Because of sigmoid…

Read more at Analytics Vidhya

Visualizing the vanishing gradient problem

Last Updated on November 26, 2021 Deep learning was a recent invention. Partially, it is due to improved computation power that allows us to use more layers of perceptrons in a neural network. But at ...

Read more at Machine Learning Mastery

Natural Gradient

We are taking a brief detour from the series to understand what Natural Gradient is. The next algorithm we examine in the Gradient Boosting world is NGBoost and to understand it completely, we need…

Read more at Towards Data Science

The Exploding and Vanishing Gradients Problem in Time Series

In this post, we focus on deep learning for sequential data techniques. All of us familiar with this kind of data. For example, the text is a sequence of words, video is a sequence of images. More…

Read more at Towards Data Science

How to Fix the Vanishing Gradients Problem Using the ReLU

Last Updated on August 25, 2020 The vanishing gradients problem is one example of unstable behavior that you may encounter when training a deep neural network. It describes the situation where a deep ...

Read more at Machine Learning Mastery

Gradient Centralization

A recently published paper proposes a simple answer: Gradient Centralization, a new optimization technique which can be easily integrated in the gradient-based optimization algorithm you already use…

Read more at Towards Data Science

torch.gradient

Estimates the gradient of a function g : R n → R g : \mathbb{R}^n \rightarrow \mathbb{R} g : R n → R in one or more dimensions using the second-order accurate central differences method . The gradient...

Read more at PyTorch documentation

Gradient-Based Optimization Demistified

In real life, you often have to deal with things you don’t completely understand. For instance, you drive a car, not knowing how the engine works. Most strange of all, you drive your body and your…

Read more at Becoming Human: Artificial Intelligence Magazine

Avoiding the vanishing gradients problem using gradient noise addition

A simple method of adding Gaussian noise to gradients during training together with batch normalization improves the test accuracy of a neural network as high as 54.47%.

Read more at Towards Data Science

Courage to Learn ML: Tackling Vanishing and Exploding Gradients (Part 2)

A Comprehensive Survey on Activation Functions, Weights Initialization, Batch Normalization, and Their Applications in PyTorch Continue reading on Towards Data Science

Read more at Towards Data Science

Integrated Gradients from Scratch

When reading a paper, I think it’s always good to dive into the related code for a better understanding of it. Often though that code is very long with a lot of optimizations and utility functions…

Read more at Towards Data Science

Vanishing gradient in Deep Neural Network

Nowadays, the networks used for image analysis are made by many layers stacked one after the other to form so-called deep networks. One of the biggest problems in training these architectures is the…

Read more at Towards Data Science- «

- ‹

- …