AI-powered search & chat for Data / Computer Science Students

Adam Optimization Algorithm

Optimization, as defined by the oxford dictionary, is the action of making the best or most effective use of a situation or resource, or simply, making things he best they can be. Often, if something…...

Read more at Towards Data Science

How to implement an Adam Optimizer from Scratch

Adam is algorithm the optimizes stochastic objective functions based on adaptive estimates of moments. The update rule of Adam is a combination of momentum and the RMSProp optimizer. The rules are…

Read more at Towards Data Science

ADAM in 2019 — What’s the next ADAM optimizer

Deep Learning has made a lot of progress, there are new models coming out every few weeks, yet we are still stuck with Adam in 2019. Do you know when did the Adam paper come out? It’s 2014, compare…

Read more at Towards Data Science

The Math behind Adam Optimizer

The Math Behind the Adam Optimizer Why is Adam the most popular optimizer in Deep Learning? Let’s understand it by diving into its math, and recreating the algorithm Image generated by DALLE-2 If you...

Read more at Towards Data Science

Complete Guide to Adam Optimization

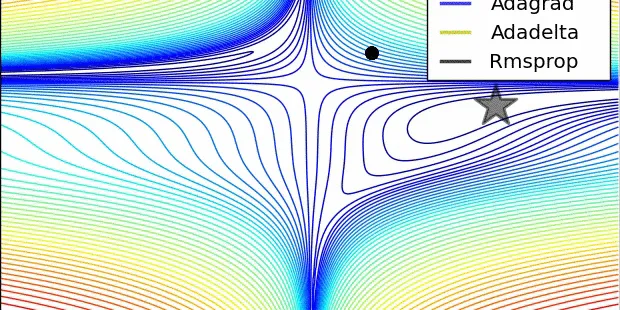

Adam optimizer from definition, math explanation, algorithm walkthrough, visual comparison, implementation, to finally the advantages and disadvantages of Adam compared to other optimizers.

Read more at Towards Data Science

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning

Last Updated on January 13, 2021 The choice of optimization algorithm for your deep learning model can mean the difference between good results in minutes, hours, and days. The Adam optimization algor...

Read more at Machine Learning Mastery

Adam

Implements Adam algorithm. For further details regarding the algorithm we refer to Adam: A Method for Stochastic Optimization . params ( iterable ) – iterable of parameters to optimize or dicts defini...

Read more at PyTorch documentation

Code Adam Optimization Algorithm From Scratch

Last Updated on October 12, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation ...

Read more at Machine Learning Mastery

Adamax

Implements Adamax algorithm (a variant of Adam based on infinity norm). For further details regarding the algorithm we refer to Adam: A Method for Stochastic Optimization . params ( iterable ) – itera...

Read more at PyTorch documentation

Why Learned Optimizers Outperform “hand-designed” Optimizers like Adam

Understanding how learned optimizers works allows us to take learned optimizers trained in one setting and know when and how to apply them to new problems. Use to extract insight from the high-dimensi...

Read more at Towards Data Science

Understanding Gradient Descent and Adam Optimization

Intuitive view to Gradient Descent, and basic understanding of Adam Optimization in Deep Learning

Read more at Towards Data Science

Optimizers Explained - Adam, Momentum and Stochastic Gradient Descent

Picking the right optimizer with the right parameters, can help you squeeze the last bit of accuracy out of your neural network model.

Read more at Machine Learning From Scratch

Gradient Descent Optimization With AdaMax From Scratch

Last Updated on September 25, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitatio...

Read more at Machine Learning Mastery

Momentum ,RMSprop And Adam Optimizer

Optimizer is a technique that we use to minimize the loss or increase the accuracy. We do that by finding the local minima of the cost function. When our cost function is convex in nature having only…...

Read more at Analytics Vidhya

AdamW

Implements AdamW algorithm. For further details regarding the algorithm we refer to Decoupled Weight Decay Regularization . params ( iterable ) – iterable of parameters to optimize or dicts defining p...

Read more at PyTorch documentation

Optimizers

In machine/deep learning main motive of optimizers is to reduce the cost/loss by updating weights, learning rates and biases and to improve model performance. Many people are already training neural…

Read more at Towards Data Science

Adam

In the discussions leading up to this section we encountered a number of techniques for efficient optimization. Let’s recap them in detail here: We saw that Section 12.4 is more effective than Gradien...

Read more at Dive intro Deep Learning Book

Multiclass Classification Neural Network using Adam Optimizer

I wanted to see the difference between Adam optimizer and Gradient descent optimizer in a more sort of hands-on way. So I decided to implement it instead. In this, I have taken the iris dataset and…

Read more at Towards Data Science

A Complete Guide to Adam and RMSprop Optimizer

Optimization is a mathematical discipline that determines the “best” solution in a quantitatively well-defined sense. Mathematical optimization of the processes governed by partial differential…

Read more at Analytics Vidhya

Flux.jl on MNIST — What about ADAM?

Photo by SIMON LEE on Unsplash Flux.jl on MNIST — What about ADAM? So far we’ve seen a performance analysis using the standard gradient descent optimizer. But which results do we get, if we use a more...

Read more at Towards Data Science

Optimizers

Optimizers What is Optimizer ? It is very important to tweak the weights of the model during the training process, to make our predictions as correct and optimized as possible. But how exactly do you ...

Read more at Machine Learning Glossary

Momentum, Adam’s optimizer and more

If you’ve checked the jupyter notebook related to my article on learning rates, you’d know that it had an update function which was basically calculating the outputs, calculating the loss and…

Read more at Becoming Human: Artificial Intelligence Magazine

Understanding Adam : how loss functions are minimized ?

While using the fast.ai library built on top of PyTorch, I realized that I have never had to interact with an optimizer so far. Since fast.ai already deals with it when calling the fit_one_cycle…

Read more at Towards Data Science

Adam — latest trends in deep learning optimization.

Adam [1] is an adaptive learning rate optimization algorithm that’s been designed specifically for training deep neural networks. First published in 2014, Adam was presented at a very prestigious…

Read more at Towards Data Science- «

- ‹

- …