AI-powered search & chat for Data / Computer Science Students

Extract-Transform-Load in Elasticsearch and Python

Key takeaways of connecting and working with Elasticsearch-Python interfaces for high data volumes on ETL processes. When we’re designing an enterprise-level solution, one specific layer we take…

Read more at Analytics Vidhya

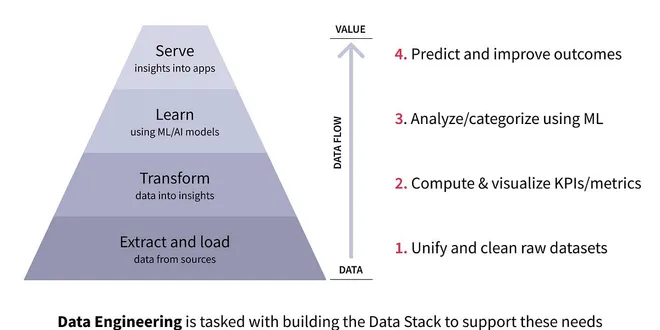

Extract & Load, Transform, Learn, and Serve — Getting Value from Data

Every company wants to deliver high-value data insights, but not every company is ready or able. Too often, they believe the marketing hype around point-and-click, no-code data connectors. Just set…

Read more at Towards Data Science

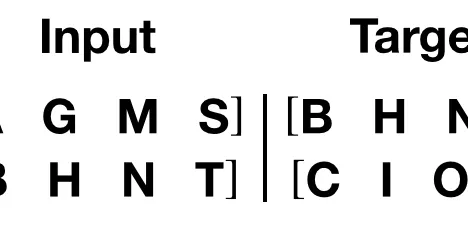

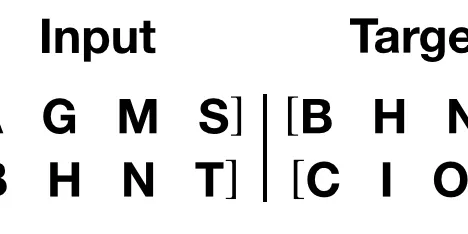

Inferencing the Transformer Model

Last Updated on January 6, 2023 We have seen how to train the Transformer model on a dataset of English and German sentence pairs and how to plot the training and validation loss curves to diagnose th...

Read more at MachineLearningMastery.com

Understanding Transformers from Start to End — A Step-by-Step Math Example

Transformer in NYC (created from phtofunia) Understanding Transformers from Start to End — A Step-by-Step Math Example I have already written a detailed blog on how transformers work using a very smal...

Read more at Level Up Coding

Transformers: How Do They Transform Your Data?

Diving into the Transformers architecture and what makes them unbeatable at language tasks Image by the author In the rapidly evolving landscape of artificial intelligence and machine learning, one i...

Read more at Towards Data Science

Extract Transform Load (ETL) for Books to Scrape

Web scraping is the process of extracting data from websites. All the job is carried out by a piece of code which is called a “scraper”. First, it sends a “GET” query to a specific website. Then, it…

Read more at Analytics Vidhya

How to Create and Train a Multi-Task Transformer Model

While working on an AI chatbot project, I did a short review of the available companies that offer NLP models as a service. I was surprised by the cost that some providers charge for basic intent…

Read more at Towards Data Science

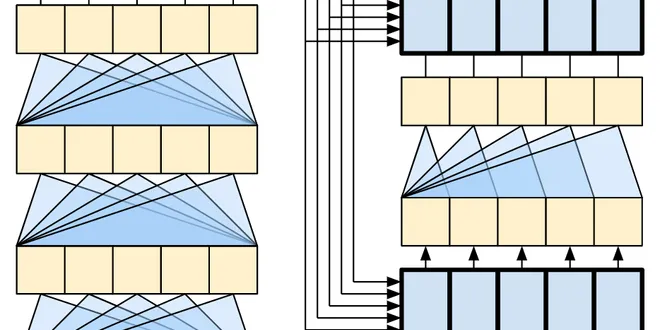

Training Transformer models using Pipeline Parallelism

Define the model In this tutorial, we will split a Transformer model across two GPUs and use pipeline parallelism to train the model. The model is exactly the same model used in the Sequence-to-Sequen...

Read more at PyTorch Tutorials

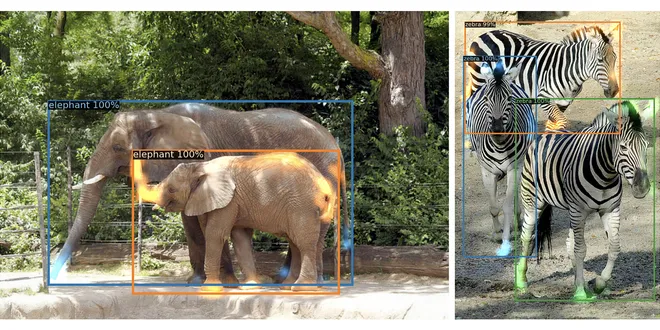

Transformer in CV

Transformer architecture has achieved state-of-the-art results in many NLP (Natural Language Processing) tasks. One of the main breakthroughs with the Transformer model could be the powerful GPT-3…

Read more at Towards Data Science

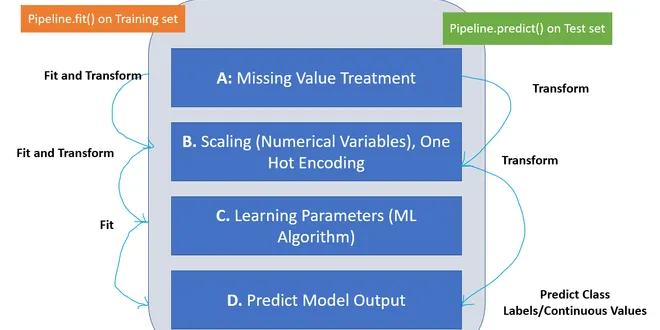

Pipeline and Custom Transformer with a Hands-On Case Study in Python

Pipelines in machine learning involve converting an end-to-end workflow into a set of codes to automate the entire data treatment and model development process. We can use pipelines to sequentially…

Read more at Towards Data Science

Extracting and Transforming Data in Python

It is important to be able to extract, filter, and transform data from DataFrames in order to drill into the data that really matters. The pandas library has many techniques that make this process…

Read more at Towards Data Science

Let’s Build a Transformer with TensorFlow

In our previous article , we introduced the Transformer and explained its components: Encoder, Decoder, Attention etc… Let’s Build a Transformer in TensorFlow How to Build a Transformer in TensorFlow ...

Read more at The Pythoneers

TransformerEncoder

TransformerEncoder is a stack of N encoder layers. Users can build the BERT( https://arxiv.org/abs/1810.04805 ) model with corresponding parameters. encoder_layer – an instance of the TransformerEncod...

Read more at PyTorch documentation

Training the Transformer Model

Last Updated on January 6, 2023 We have put together the complete Transformer model, and now we are ready to train it for neural machine translation. We shall use a training dataset for this purpose, ...

Read more at MachineLearningMastery.com

The Transformer Model

A Step by Step Breakdown of the Transformer's Encoder-Decoder Architecture source Introduction In 2017, Google researchers and developers released the paper "Attention is All You Need" that highlight...

Read more at Towards Data Science

A Simple Approach to Creating Custom Transformers Using Scikit-Learn Classes

In this article, I will be explaining how to create a transformer according to our processing needs using Scikit-Learn classes. Photo by Jeffery Ho on Unsplash Preprocessing the data is one of the mo...

Read more at Towards AI

Transformers for Multi-Regression — [PART1]

💎Transformers as Feature Extractor 💎 The FB3 competition that I joined in Kaggle has motivated me to write a post about the approaches that I tested out. Plus, I didn’t find any clear tutorial abou...

Read more at Towards AI

Preparing the data for Transformer pre-training — a write-up

Introduced only a little over a year ago, the best-known incarnation of the Transformer model introduced by Vaswani et al. (2017), the Bidirectional Encoder Representations from Transformers (better…

Read more at Towards Data Science

The Time Series Transformer

Attention Is All You Need they said. Is it a more robust convolution? Is it just a hack to squeeze more learning capacity out of fewer parameters? Is it supposed to be sparse? How did the original…

Read more at Towards Data Science

Training Transformer models using Distributed Data Parallel and Pipeline Parallelism

Define the model PositionalEncoding module injects some information about the relative or absolute position of the tokens in the sequence. The positional encodings have the same dimension as the embed...

Read more at PyTorch Tutorials

Transformer Models 101: Getting Started — Part 1

Transformer Models 101: Getting Started — Part 1 The complex math behind transformer models, in simple words Image by Kerttu from Pixabay It is no secret that transformer architecture was a breakthro...

Read more at Towards Data Science

Transformer

A transformer model. User is able to modify the attributes as needed. The architecture is based on the paper “Attention Is All You Need”. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Ll...

Read more at PyTorch documentation

Into The Transformer

Into TheTransformer The Data Flow, Parameters, and Dimensions Photo by Joshua Sortino on Unsplash The Transformer — a neural network architecture introduced in 2017 by researchers at Google — has pro...

Read more at Towards Data Science

The Map Of Transformers

Transformers A broad overview of Transformers research Fig. 1. Isometric map. Designed by vectorpocket / Freepik. 1\. Introduction The pace of research in deep learning has accelerated significantly ...

Read more at Towards Data Science- «

- ‹

- …