Object detection metrics get messy. They need to capture both the localization and classification of objects in a picture, which is less straightforward than simple classification accuracy. Because of the complexity of the problem throughout the years several competitions have established variants on the same metric: Average Precision. Unfortunately, it’s easy to get these slightly different implementations confused. How does one navigate this maze of differences between PASCAL VOC, ImageNet and COCO? And even if we make it out, is it all worth it? Is Average Precision even a suitable metric for object detection? Or do we need something else, something that captures more than precision and recall?

Stepping stones: precision and recall

Let’s start with the basics. Object detection models generate a set of detections where each detection consists of coordinates for a bounding box. Commonly models also generate a confidence score for each detection. Our question is how to evaluate these detections given bounding box annotations over the evaluation dataset.

With any new experiment, one of the most important questions is what exactly we want to measure. We could view object detection as a special case of a retrieval model. Precision and recall have a direct translation to object detection: precision tells us how many of the bounding boxes we detected are actual objects, where recall tells us how many of the objects we actually found. If we assume our model gives confidence scores for all detections we can rank all of our detections by this confidence, giving us the ability to compute precision and recall at a given rank K. When using the model in an application we usually choose a fixed confidence threshold c (often c=0.5) which we use to filter our detections to only the confident ones. Let’s fix the rank K to match where c=0.5 . The resulting precision and recall give us exactly the performance of our model as we would use it in the application.

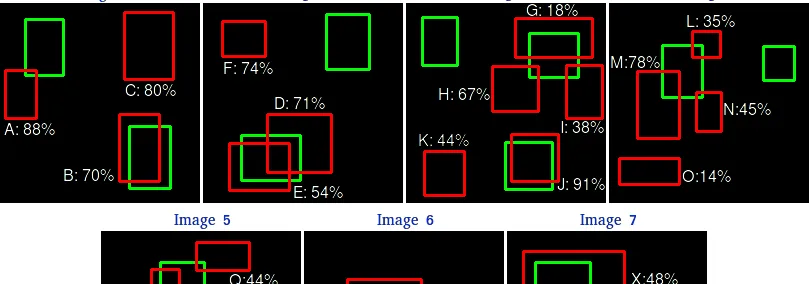

The final piece in this puzzle here is how we determine what detections are true and false positives respectively. Spoiler warning: this is one of the most tricky components of evaluating object detection. Guiding principle in all state-of-the-art metrics is the so-called Intersection-over-Union (IoU) overlap measure. It is quite literally defined as the intersection over union of the detection bounding box and the ground truth bounding box:

(Source: Adriana Kovashka, University of Pittsburgh via https://www.pyimagesearch.com/2016/11/07/intersection-over-union-iou-for-object-detection)

We count the detection as a true positive if the IoU overlap is above a fixed threshold r, which is usually r=0.5 . Note though that this value is quite arbitrarily set. There is no real justification of this specific value as far as we know. Even though it seems to work well in practice for relatively large faces, it is a known problem that for very small objects (e.g. 10×10 pixels) the IoU doesn’t work well. This seems to be because small variations in the predicted pixel coordinates can make the overlaps significantly worse on tiny objects.

This is not the only problem with using precision and recall. Recall (pun intended) that we had to cut our predictions at a confidence threshold to get these metrics. This means the precision and recall we get only represent one setting of the model. We trained our model to give us confidences, so theoretically we have access to a whole range of models each with its own confidence threshold. If we could get rid of this filtering by confidence threshold, then we could capture the performance of the model over the entire spectrum, giving a much more complete picture of our model.

Average Precision

Many improvements in Computer Vision have come from submissions to one of the many competitions hosted around conferences. Arguably the first major object detection competition was the 2007 PASCAL Visual Object Classes competition (VOC) [Everingham 2010]. This competition ran through 2012, when the authors started cooperating with the new Imagenet ILSVRC competition. Both competitions performed evaluation on their own dataset, but as the new ImageNet competition offered a much larger and shinier dataset to train on it was soon much more popular than PASCAL. Deep Computer Vision started gaining traction around that time, for which the ImageNet competition deserved much of the credit, with competitors such as the era-defining AlexNet publication [Krizhevsky 2012]. In 2018 the most popular successor of ImageNet is Microsoft’s Common Objects in Context (COCO) competition, expanding the training dataset size even further than ImageNet.

Of course, competitions need metrics to determine winners. The most prolific metric for object detection came about by the first of these competitions, the PASCAL VOC competition, whose authors decided to use the measure of Average Precision (AP) [Salton & McGill 1983] for their competition. They defined it as the area under the precision-recall curve obtained by sampling precision and recall at eleven discrete recall values [0.0:1.1:0.1]. In the case of a multi-class object detector precision and recall are to be computed for each object class separately, and the final metric mean Average Precision (mAP) is the mean of the average precision of each class.

The 2012 iteration of the VOC competition made a slight change to the computation of AP by sampling precision and recall at all unique recall intervals instead of the eleven pre-defined recall intervals. The competitions that came after VOC continued the use of AP as defined by VOC 2012, though each in their own way. ILSVRC only changed the IoU overlap threshold for tiny objects by effectively increasing the size of the ground truth annotation slightly:

As for the current state-of-the-art competition: COCO made a much bigger change to the metric, but before we dig into that, we will have to address another issue with AP.

Penalizing localizations

Recall that the assignment of true and false positives depends on the IoU overlap between detection and groundtruth. AP uses a fixed threshold on this IoU overlap, but doesn’t revisit the localization aspect of the detection model anywhere else in its definition. The only way a model gets penalized for having bad localizations is if they are so bad that it turns true positives into false positives.

This implicit penalization might not be what we are looking for. Sometimes we care so much about localization that we want to explicitly measure it. For example, take this image of the detections of two different object detectors on a cat.

(Source: https://www.catster.com/cats-101/savannah-cat-about-this-breed)

That tail can be tricky to detect and localize correctly. The question is: do we care about including the tail in the bounding box? Maybe your application is classifying cats in pictures into cat races. The shape and color of the tail might be important for that task, so you don’t want to lose the tail in detection. Maybe your application is part of an autonomous vehicle that needs to spot children and pets on the street to know when to apply the emergency brake. In that case, we care more about finding the cat than knowing where exactly its tail is, so we probably care less about the specific localization.

AP in its original form doesn’t allow for explicitly penalizing localization. This is a problem if you’re evaluating your detector for a localization-sensitive application. One way we could change AP though is by changing the IoU threshold used to determine true and false positives. If we increase the threshold, models that have worse localization will suffer in AP, since they will start to produce more false positives. This is the approach of the COCO competition, though instead of just upping the threshold they instead require AP to be computed over thresholds [0.5 : 0.95 : 0.05] and then averaged. This averaged number is denoted as AP, while the original VOC AP ( r=0.5¿ is denoted as ![]() ).

).

Many papers also report ![]() (which is the AP at τ=0.75). Comparisons between

(which is the AP at τ=0.75). Comparisons between ![]() and

and ![]() are used to show how models improve or degrade with stricter IoU overlap thresholds, as a surrogate for explicit localization penalties. This new AP still doesn’t explicitly penalize localization though. There is simply no penalty term for localization in AP, so it is impossible to get an explicit representation of the localization performance.

are used to show how models improve or degrade with stricter IoU overlap thresholds, as a surrogate for explicit localization penalties. This new AP still doesn’t explicitly penalize localization though. There is simply no penalty term for localization in AP, so it is impossible to get an explicit representation of the localization performance.

Localization Recall Precision

This is where we turn to a recently proposed metric called Localization Recall Precision (LRP) [Oksuz 2018]. At its core, it simply adds localization as an explicit third term to the recall and precision found in AP. The resulting metric works a bit differently from AP though. First, all three

components are computed for each unique confidence threshold of the c model. The overall metrics is actually an error metric (so lower is better), so the recall (![]() ) and precision (

) and precision (![]() ) components have to be inverted:

) components have to be inverted:

The localization component is quite straightforward. It is simply the average inverted IoU of true positive detections matched with ground truth y:

![]()

The overall LRP for a given confidence threshold is a normalized weighted sum of all three components:

![]()

We compute LRP for each confidence threshold in the domain [1.00: 0.00 : 0.01] , then plot these LRP (with confidence thresholds on the x-axis) to get the LRP-curve. See these example curves, where the rightmost curve is the LRP-curve and the others are curves of the three components:

Source: [Oksuz 2018])

At this point, we have a curve to represent our metric. However, we would very much like to have a single number. As we know AP uses area under the PR-curve to turn the curve into a single metric. For AP this is necessary because a single point doesn’t reflect the performance of the entire model. Even if we would have a method to choose a single point that point would be represented by two values: precision and recall. LRP doesn’t have the latter of these problems, as a single point on the LRP curve models the LRP metric value. The authors of the method therefore define the lowest point of the LRP curve to be the optimal LRP (oLRP). A benefit of this method is that as a side-effect we obtain the ideal confidence threshold c to use with this model, which is the confidence threshold that supposedly best balances the three components of LRP.

However, there is a downside to this metric. Earlier we said that the main motivation to use AP was to have our metric not just measure a single instance of our model but rather the entire spectrum of models we obtain by varying the confidence threshold. It seems like oLRP actually does not satisfy that property, as oLRP gives the LRP of the single best instance of our model. In other words, whereas AP integrates the performance of all models in the final metric, oLRP uses the performance of all models to determine the best model, but then only measures the performance of that single model.

There are many arguments to be made about which of these approaches is a more sound way to compare object detectors. One could argue that only the best instance of a model is important, as that is the one that will see usage. However, competitions might value methods with consistent results across all settings of a model over methods that could reward models that “overfit” to a specific setting. In the end, both approaches have their merit. It’s too early to say anything about the possible adoption of LRP as an object detection metric. The academic community will have to figure this out, so we will have to leave you without an answer for now.

So what use does oLRP have as an object detection metric? The biggest value in oLRP is actually in its decomposition into its component metrics. The individual recall, precision and localization error metrics are intuitive debugging measures to show alongside AP, for example by showing them for the oLRP model. Implementation is pretty simple: simply keep track of the components for each confidence threshold, then retrieve and show the component metric values for the oLRP model. Most importantly, this provides the user with an explicit measure for the localization performance of your model, which is the measure we set out to find. Even though it LRP might not be ideal as an overall object detection metric, at least it has use in this way.

Conclusion

We have reviewed two main metrics for object detection: Average Precision (AP) and Localization Recall Precision (LRP). State-of-the-art methods are expected to publish results using AP, mainly because the main competitions have always and continue to build on it. The new LRP metric serves its own purpose, which is mainly to provide explicit component metrics. The side-effect of LRP — getting the best confidence threshold — is also very useful. Overall though, the usage of LRP will possibly be limited to the role of providing additional analysis, at least for now. Time will tell if a bigger role in object detection literature is in its future.

Let’s get personal. What should you use? First of all, whatever metric you use, use the official implementations. They are often awfully written (why can’t researchers write readable code?!) but at least your results will be directly comparable against published results. As for the metrics, use AP to measure your progress and use LRP for additional analysis. You can get both of them from the official implementation of LRP, which is a fork of the official COCO AP implementation and includes both AP and LRP.

Finally, metrics are of course just a part of the puzzle. Figure out the unique requirements of your model, and use the metrics at hand to define the key results that satisfy them. Always keep in mind that metrics are just there to give you answers. You still need to ask the questions.

References

- Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., … Fei-Fei, L. (2014). ImageNet Large Scale Visual Recognition Challenge. Retrieved from http://arxiv.org/abs/1409.0575

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet Classification with Deep Convolutional Neural Networks. Advances In Neural Information Processing Systems, 1–9. https://doi.org/http://dx.doi.org/10.1016/j.protcy.2014.09.007

- Salton, G., & McGill, M. J. (1983). Introduction to modern information retrieval. McGraw-Hill. Retrieved from https://dl.acm.org/citation.cfm?id=576628

- Everingham, M., Van Gool, L., I Williams, C. K., Winn, J., Zisserman, A., Everingham, M., … Zisserman, A. (2010). The PASCAL Visual Object Classes (VOC) Challenge. Int J Comput Vis, 88, 303–338. https://doi.org/10.1007/s11263-009-0275-4

- Oksuz, K., Cam, B. C., Akbas, E., & Kalkan, S. (2018). Localization Recall Precision (LRP): A New Performance Metric for Object Detection. Retrieved from http://arxiv.org/abs/1807.01696