multiple layers

Multilayer Perceptrons

In this chapter, we will introduce your first truly deep network. The simplest deep networks are called multilayer perceptrons , and they consist of multiple layers of neurons each fully connected to ...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Implementation of Multilayer Perceptrons

Multilayer perceptrons (MLPs) are not much more complex to implement than simple linear models. The key conceptual difference is that we now concatenate multiple layers. 5.2.1. Implementation from Scr...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Multi layer Perceptron (MLP) Models on Real World Banking Data

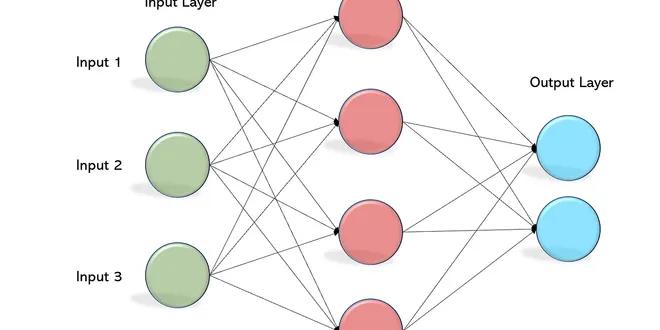

A multi layer perceptron (MLP) is a class of feed forward artificial neural network. MLP consists of at least three layers of nodes: an input layer, a hidden layer and an output layer. Except for the…...

📚 Read more at Becoming Human: Artificial Intelligence Magazine🔎 Find similar documents

Layers and Modules

When we first introduced neural networks, we focused on linear models with a single output. Here, the entire model consists of just a single neuron. Note that a single neuron (i) takes some set of inp...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Multilayer perceptrons for digit recognition with Core APIs

This notebook uses the TensorFlow Core low-level APIs to build an end-to-end machine learning workflow from scratch. Visit the Core APIs overview to learn more about TensorFlow Core and its intended u...

📚 Read more at TensorFlow Guide🔎 Find similar documents