Ahead of AI

“Ahead of AI” delves into the intricacies of architecting enterprise-level AI systems, focusing on the challenges and solutions in implementing Generative AI technologies. The document explores the importance of understanding and utilizing live data sources, such as APIs, to enhance the capabilities of AI models. It emphasizes the need for AI systems to move beyond static data libraries and adapt to the dynamic nature of Big Data. Additionally, the document highlights the significance of precision in AI applications, showcasing the critical role of context and rich data indexes in optimizing AI performance.

Categories of Inference-Time Scaling for Improved LLM Reasoning

And an Overview of Recent Inference-Scaling Papers

📚 Read more at Ahead of AI🔎 Find similar documents

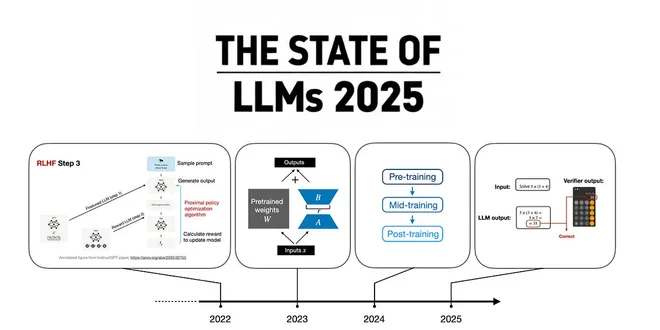

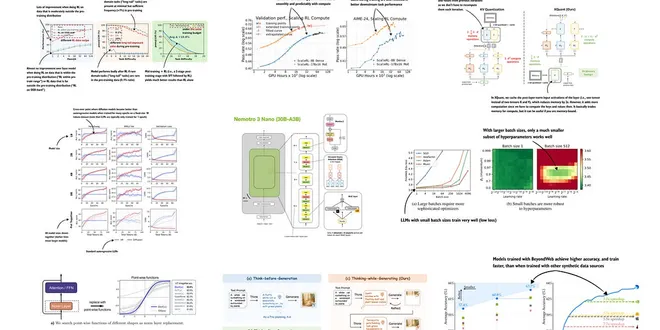

The State Of LLMs 2025: Progress, Progress, and Predictions

A 2025 review of large language models, from DeepSeek R1 and RLVR to inference-time scaling, benchmarks, architectures, and predictions for 2026.

📚 Read more at Ahead of AI🔎 Find similar documents

LLM Research Papers: The 2025 List (July to December)

In June, I shared a bonus article with my curated and bookmarked research paper lists to the paid subscribers who make this Substack possible.

📚 Read more at Ahead of AI🔎 Find similar documents

A Technical Tour of the DeepSeek Models from V3 to V3.2

Understanding How DeepSeek's Flagship Open-Weight Models Evolved

📚 Read more at Ahead of AI🔎 Find similar documents

Beyond Standard LLMs

Linear Attention Hybrids, Text Diffusion, Code World Models, and Small Recursive Transformers

📚 Read more at Ahead of AI🔎 Find similar documents

Understanding the 4 Main Approaches to LLM Evaluation (From Scratch)

Multiple-Choice Benchmarks, Verifiers, Leaderboards, and LLM Judges with Code Examples

📚 Read more at Ahead of AI🔎 Find similar documents

Understanding and Implementing Qwen3 From Scratch

A Detailed Look at One of the Leading Open-Source LLMs

📚 Read more at Ahead of AI🔎 Find similar documents

From GPT-2 to gpt-oss: Analyzing the Architectural Advances

And How They Stack Up Against Qwen3

📚 Read more at Ahead of AI🔎 Find similar documents

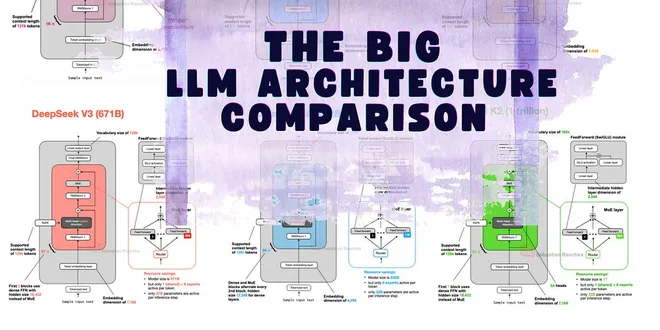

The Big LLM Architecture Comparison

From DeepSeek-V3 to Kimi K2: A Look At Modern LLM Architecture Design

📚 Read more at Ahead of AI🔎 Find similar documents

LLM Research Papers: The 2025 List (January to June)

A topic-organized collection of 200+ LLM research papers from 2025

📚 Read more at Ahead of AI🔎 Find similar documents

Understanding and Coding the KV Cache in LLMs from Scratch

KV caches are one of the most critical techniques for efficient inference in LLMs in production.

📚 Read more at Ahead of AI🔎 Find similar documents

Coding LLMs from the Ground Up: A Complete Course

Why build LLMs from scratch? It's probably the best and most efficient way to learn how LLMs really work. Plus, many readers have told me they had a lot of fun doing it.

📚 Read more at Ahead of AI🔎 Find similar documents