Dropout

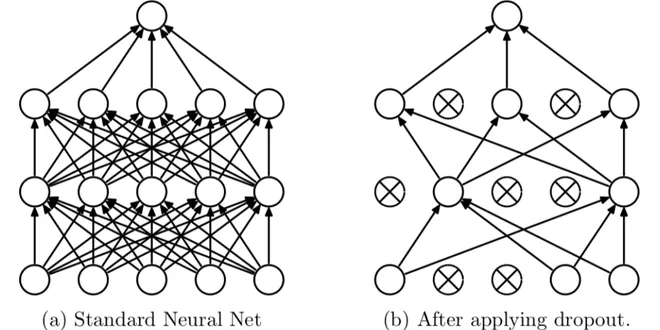

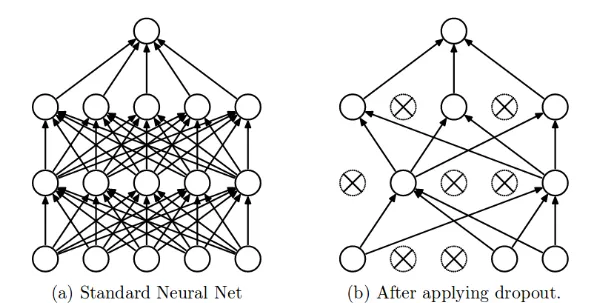

Dropout is a regularization technique used in neural networks to prevent overfitting. It works by randomly “dropping out” a subset of neurons during each training iteration, effectively ignoring them. This encourages the network to learn more robust features by not relying too heavily on any single neuron. Typically, a dropout rate is defined, indicating the percentage of neurons to be dropped. While dropout was once a popular choice for regularization, it has seen competition from techniques like batch normalization. Nonetheless, it remains a simple and effective method for improving model generalization in various deep learning applications.

Dropout

During training, randomly zeroes some of the elements of the input tensor with probability p using samples from a Bernoulli distribution. Each channel will be zeroed out independently on every forward...

📚 Read more at PyTorch documentation🔎 Find similar documents

Dropout is Drop-Dead Easy to Implement

We’ve all heard of dropout. Historically it’s one of the most famous ways of regularizing a neural network, though nowadays it’s fallen somewhat out of favor and has been replaced by batch…

📚 Read more at Towards Data Science🔎 Find similar documents

An Intuitive Explanation to Dropout

In this article, we will discover what is the intuition behind dropout, how it is used in neural networks, and finally how to implement it in Keras.

📚 Read more at Towards Data Science🔎 Find similar documents

Dropout3d

Randomly zero out entire channels (a channel is a 3D feature map, e.g., the j j j -th channel of the i i i -th sample in the batched input is a 3D tensor input [ i , j ] \text{input}[i, j] input [ i ,...

📚 Read more at PyTorch documentation🔎 Find similar documents

Dropout2d

Randomly zero out entire channels (a channel is a 2D feature map, e.g., the j j j -th channel of the i i i -th sample in the batched input is a 2D tensor input [ i , j ] \text{input}[i, j] input [ i ,...

📚 Read more at PyTorch documentation🔎 Find similar documents

Multi-Sample Dropout in Keras

Dropout is an efficient regularization instrument for avoiding overfitting of deep neural networks. It works very simply randomly discarding a portion of neurons during training; as a result, a…

📚 Read more at Towards Data Science🔎 Find similar documents

AlphaDropout

Applies Alpha Dropout over the input. Alpha Dropout is a type of Dropout that maintains the self-normalizing property. For an input with zero mean and unit standard deviation, the output of Alpha Drop...

📚 Read more at PyTorch documentation🔎 Find similar documents

Dropout in Neural Networks

Dropout layers have been the go-to method to reduce the overfitting of neural networks. It is the underworld king of regularisation in the modern era of deep learning. In this era of deep learning, a...

📚 Read more at Towards Data Science🔎 Find similar documents

Dropout

Let’s think briefly about what we expect from a good predictive model. We want it to peform well on unseen data. Classical generalization theory suggests that to close the gap between train and test p...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

torch.nn.functional.dropout

During training, randomly zeroes some of the elements of the input tensor with probability p using samples from a Bernoulli distribution. See Dropout for details. p ( float ) – probability of an eleme...

📚 Read more at PyTorch documentation🔎 Find similar documents

Unveiling the Dropout Layer: An Essential Tool for Enhancing Neural Networks

The dropout layer is a layer used in the construction of neural networks to prevent overfitting. In this process, individual nodes are excluded in various training runs using a probability, as if…

📚 Read more at Towards Data Science🔎 Find similar documents

FeatureAlphaDropout

Randomly masks out entire channels (a channel is a feature map, e.g. the j j j -th channel of the i i i -th sample in the batch input is a tensor input [ i , j ] \text{input}[i, j] input [ i , j ] ) o...

📚 Read more at PyTorch documentation🔎 Find similar documents