Data Science & Developer Roadmaps with Chat & Free Learning Resources

Adversarial-Training

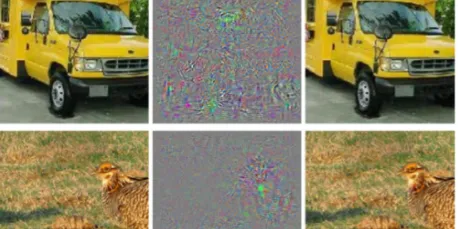

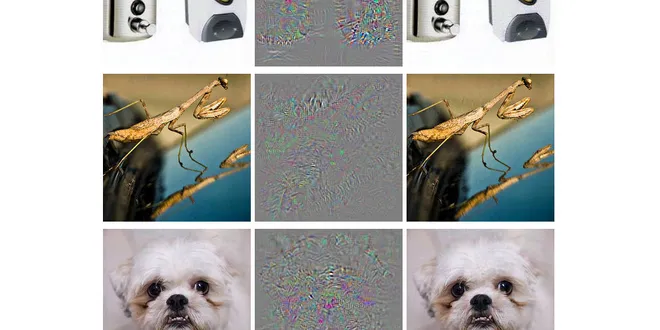

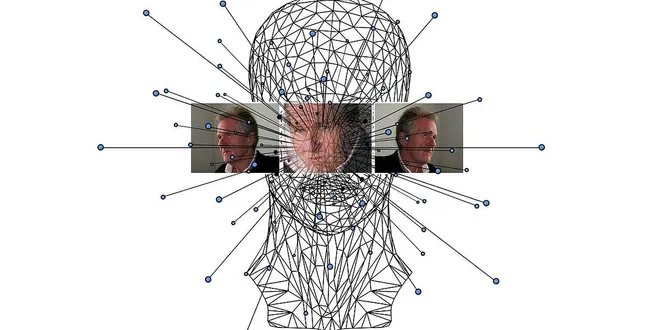

Adversarial training is a technique used in machine learning to enhance the robustness of models against adversarial examples—inputs intentionally designed to deceive the model into making incorrect predictions. This method involves incorporating adversarial examples into the training process, allowing the model to learn from these deceptive inputs. There are two primary approaches: one involves retraining the model with previously identified adversarial examples, while the other integrates perturbations directly into the training data. By doing so, adversarial training aims to improve the model’s generalization and resilience, making it less susceptible to various types of adversarial attacks.

Everything you need to know about Adversarial Training in NLP

Adversarial training is a fairly recent but very exciting field in Machine Learning. Since Adversarial Examples were first introduced by Christian Szegedy[1] back in 2013, they have brought to light…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Adversarial Examples

An adversarial example is an instance with small, intentional feature perturbations that cause a machine learning model to make a false prediction. I recommend reading the chapter about Counterfactual...

📚 Read more at Christophm Interpretable Machine Learning Book🔎 Find similar documents

Adversarial Examples — Rethinking the Definition

Adversarial examples are a large obstacle for a variety of machine learning systems to overcome. Their existence shows the tendency of models to rely on unreliable features to maximize performance…

📚 Read more at Towards Data Science🔎 Find similar documents

Adversarial Attacks in Textual Deep Neural Networks

Adversarial examples aim at causing target model to make a mistake on prediction. It can be either be intended or unintended to cause a model to perform poorly. For example, we may have a typo when…

📚 Read more at Towards AI🔎 Find similar documents

A Practical Guide To Adversarial Robustness

Introduction Machine learning models have been shown to be vulnerable to adversarial attacks, which consist of perturbations added to inputs during test-time designed to fool the model that are often…...

📚 Read more at Towards Data Science🔎 Find similar documents

Adversarial Machine Learning: A Deep Dive

Today morning, I suddenly had a thought that if we are using Machine Learning models at such a huge scale, how are the vulnerabilities checked in the models itself? Little bit searching and I found th...

📚 Read more at Towards AI🔎 Find similar documents

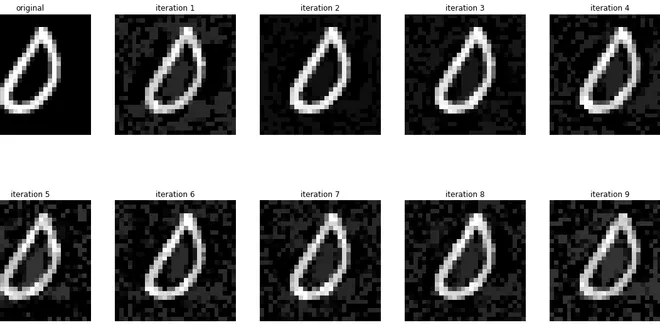

Does Iterative Adversarial Training Repel White-box Adversarial Attack

A quantitative and qualitative exploration of how well it guards against white-box generation of adversarial examples Machine learning is prone to adversarial examples — targeted input data that are…

📚 Read more at Level Up Coding🔎 Find similar documents

FreeLB: A Generic Adversarial Training method for Text

In 2013, Szegedy et al. published “Intriguing properties of neural networks”. One of the big takeaways of this paper is that models can be fooled by adversarial examples. These are examples that…

📚 Read more at Towards Data Science🔎 Find similar documents

Adversarial Machine Learning

Deploying machine learning for real systems, necessitates the need for robustness and reliability. Although many notions of robustness and reliability exists, topic of adversarial robustness is of…

📚 Read more at Analytics Vidhya🔎 Find similar documents

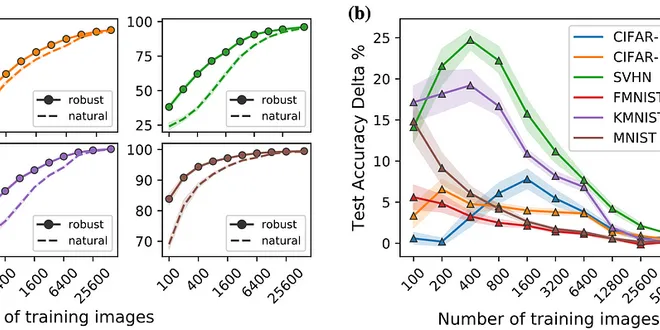

Adversarially-Trained Deep Nets Transfer Better

We discovered a novel way to use adversarially-trained deep neural networks (DNNs) in the context of transfer learning to quickly achieve higher accuracy on image classification tasks — even when only...

📚 Read more at Towards AI🔎 Find similar documents

Creating Adversarial Examples for Neural Networks with JAX

Firstly, let’s see some definitions. What are the Adversarial Examples? Simply put, Adversarial Examples are inputs to a neural network that are optimized to fool the algorithm i.e. result in…

📚 Read more at Towards Data Science🔎 Find similar documents

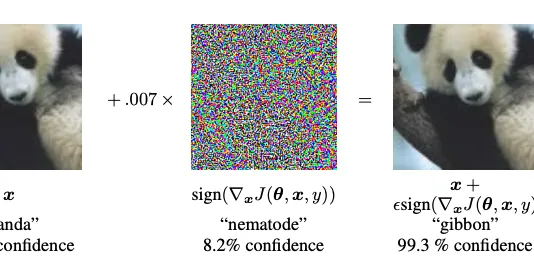

Adversarial example using FGSM

This tutorial creates an adversarial example using the Fast Gradient Signed Method (FGSM) attack as described in Explaining and Harnessing Adversarial Examples by Goodfellow et al . This was one of th...

📚 Read more at TensorFlow Tutorials🔎 Find similar documents