Data Science & Developer Roadmaps with Chat & Free Learning Resources

Bagging

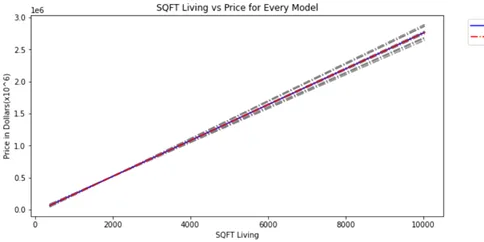

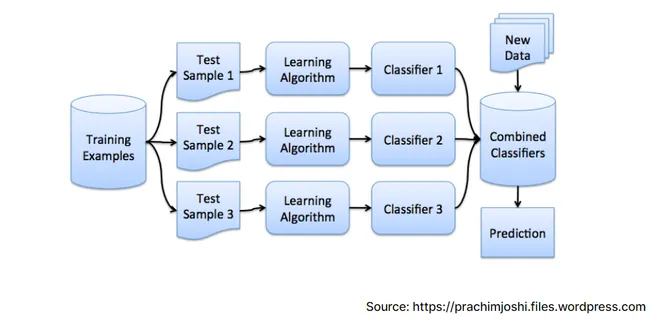

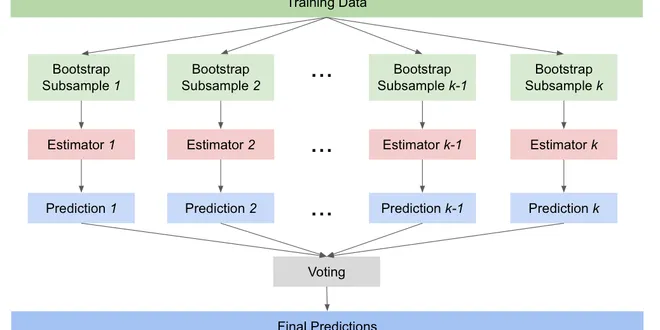

Bagging, short for Bootstrap Aggregating, is an ensemble learning technique that enhances the performance of machine learning models, particularly those with high variance, such as decision trees. The core idea behind bagging is to create multiple models by training them on different subsets of the training data, which are generated through a process called bootstrapping. This involves sampling the original dataset with replacement to create several bootstrap samples. By aggregating the predictions from these models, bagging reduces overfitting and improves the overall accuracy and robustness of the final model. It is widely used in various applications, especially in regression tasks.

Bagging on Low Variance Models

Bagging (also known as bootstrap aggregation) is a technique in which we take multiple samples repeatedly with replacement according to uniform probability distribution and fit a model on it. It…

📚 Read more at Towards Data Science🔎 Find similar documents

Bagging, Boosting, and Gradient Boosting

Bagging is the aggregation of machine learning models trained on bootstrap samples (Bootstrap AGGregatING). What are bootstrap samples? These are almost independent and identically distributed (iid)…

📚 Read more at Towards Data Science🔎 Find similar documents

Why Bagging Works

In this post I deep dive on bagging or bootstrap aggregating. The focus is on building intuition for the underlying mechanics so that you better understand why this technique is so powerful. Bagging…

📚 Read more at Towards Data Science🔎 Find similar documents

Simplified Approach to understand Bagging (Bootstrap Aggregation) and implementation without…

A very first ensemble method/functionality is called Bagging which mostly used for regression problems in machine learning. The name ‘Bagging’ is a conjunction of two words i.e. Bootstrap and…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Ensemble Learning — Bagging and Boosting

Bagging and Boosting are similar in that they are both ensemble techniques, where a set of weak learners are combined to create a strong learner that obtains better performance than a single one…

📚 Read more at Becoming Human: Artificial Intelligence Magazine🔎 Find similar documents

Develop a Bagging Ensemble with Different Data Transformations

Last Updated on April 27, 2021 Bootstrap aggregation, or bagging, is an ensemble where each model is trained on a different sample of the training dataset. The idea of bagging can be generalized to ot...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Bagging and Random Forest for Imbalanced Classification

Last Updated on January 5, 2021 Bagging is an ensemble algorithm that fits multiple models on different subsets of a training dataset, then combines the predictions from all models. Random forest is a...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Bagging v Boosting : The H2O Package

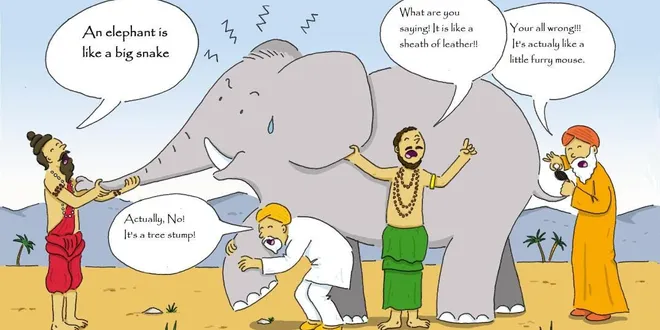

Before we dive deep into the complexities of Bagging and Boosting, we need to question the need of such complicated processes. Earlier, we’ve seen how the Decision Tree algorithm works and how easily…...

📚 Read more at Analytics Vidhya🔎 Find similar documents

Ensemble Methods Explained in Plain English: Bagging

In this article, I will go over a popular homogenous model ensemble method — bagging. Homogenous ensembles combine a large number of base estimators or weak learners of the same algorithm. The…

📚 Read more at Towards AI🔎 Find similar documents

How to Develop a Bagging Ensemble with Python

Last Updated on April 27, 2021 Bagging is an ensemble machine learning algorithm that combines the predictions from many decision trees. It is also easy to implement given that it has few key hyperpar...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Ensemble Models: Baggings vs. Boosting

What’s the difference between bagging and boosting? Bagging and Boosting are two of the most common ensemble techniques. Boosting models can perform better than bagging models if the hyperparameters…

📚 Read more at Analytics Vidhya🔎 Find similar documents

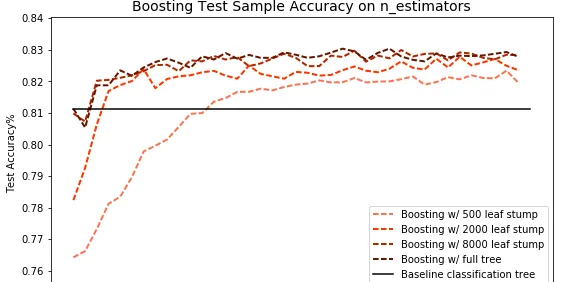

Using Bagging and Boosting to Improve Classification Tree Accuracy

Bagging and boosting are two techniques that can be used to improve the accuracy of Classification & Regression Trees (CART). In this post, I’ll start with my single 90+ point wine classification…

📚 Read more at Towards Data Science🔎 Find similar documents