Data Science & Developer Roadmaps with Chat & Free Learning Resources

Learning-Rate-Schedule

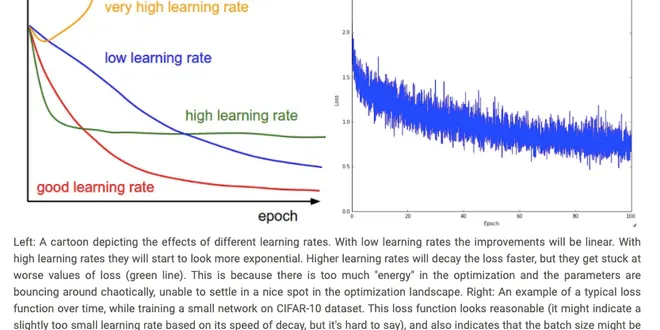

A learning rate schedule is a crucial technique in training machine learning and deep learning models, designed to optimize the learning rate over time. The learning rate determines how quickly a model updates its parameters based on the gradients computed during training. If set too high, the model may overshoot optimal values, while a low learning rate can lead to slow convergence or getting stuck in suboptimal solutions. By employing a learning rate schedule, practitioners can dynamically adjust the learning rate, enhancing model performance and training efficiency throughout the optimization process. Various strategies, such as warmup and cosine schedules, are commonly used.

Learning Rate Schedulers

Photo by Lucian Alexe on Unsplash In my previous Medium article, I talked about the crucial role that the learning rate plays in training Machine Learning and Deep Learning models. In the article, I l...

📚 Read more at Towards AI🔎 Find similar documents

Learning Rate Scheduling

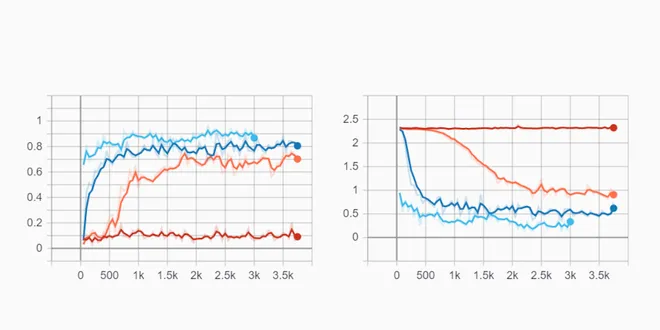

So far we primarily focused on optimization algorithms for how to update the weight vectors rather than on the rate at which they are being updated. Nonetheless, adjusting the learning rate is often j...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Using Learning Rate Schedule in PyTorch Training

Last Updated on April 8, 2023 Training a neural network or large deep learning model is a difficult optimization task. The classical algorithm to train neural networks is called stochastic gradient de...

📚 Read more at MachineLearningMastery.com🔎 Find similar documents

The Best Learning Rate Schedules

Practical and powerful tips for setting the learning rate Continue reading on Towards Data Science

📚 Read more at Towards Data Science🔎 Find similar documents

Cyclical Learning Rates

Learning rate influences the training time and model efficiency. Learning rate depends on the loss function landscape, which depends on the model architecture and dataset. To converge the model…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Using Learning Rate Schedules for Deep Learning Models in Python with Keras

Last Updated on August 6, 2022 Training a neural network or large deep learning model is a difficult optimization task. The classical algorithm to train neural networks is called stochastic gradient d...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

The Learning Rate Finder

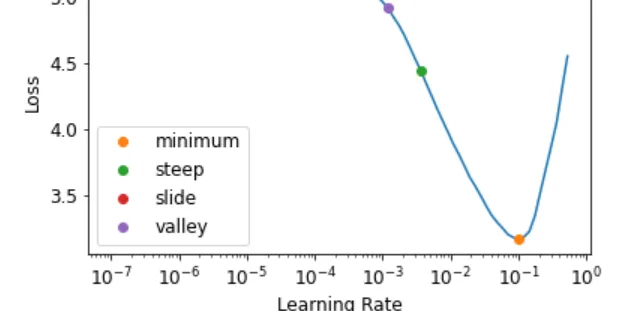

Learning rate is a very important hyper-parameter as it controls the rate or speed at which the model learns. How do we find a perfect learning rate that is not too high or not too low? Lesile Smith…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Adaptive - and Cyclical Learning Rates using PyTorch

The Learning Rate (LR) is one of the key parameters to tune in your neural net. SGD optimizers with adaptive learning rates have been popular for quite some time now: Adam, Adamax and its older…

📚 Read more at Towards Data Science🔎 Find similar documents

How to decide on learning rate

Among all the hyper-parameters used in machine learning algorithms, the learning rate is probably the very first one you learn about. Most likely it is also the first one that you start playing with…

📚 Read more at Towards Data Science🔎 Find similar documents

Why Using Learning Rate Schedulers In NNs May Be a Waste of Time

Why Using Learning Rate Schedulers in NNs May Be a Waste of Time Hint: Batch size is the key, and it might not be what you think! Photo by Andrik Langfield on Unsplash TL;DR: instead of decreasing th...

📚 Read more at Towards Data Science🔎 Find similar documents

Frequently asked questions on Learning Rate

This article is aimed to address common questions about learning rate, also known as step size, that are frequently asked by my students. So, I find it useful to gather them in the form of questions…

📚 Read more at Towards Data Science🔎 Find similar documents

Learning Rate Schedule in Practice: an example with Keras and TensorFlow 2.0

One of the painful things about training a neural network is the sheer number of hyperparameters we have to deal with. For example Among them, the most important parameter is the learning rate. If…

📚 Read more at Towards Data Science🔎 Find similar documents