Data Science & Developer Roadmaps with Chat & Free Learning Resources

apache-spark

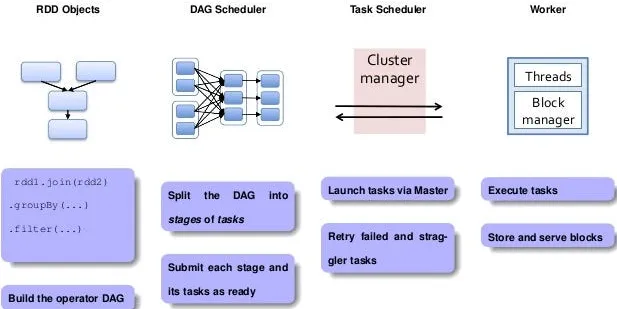

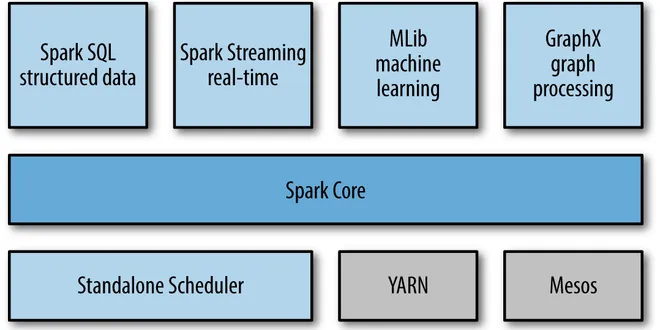

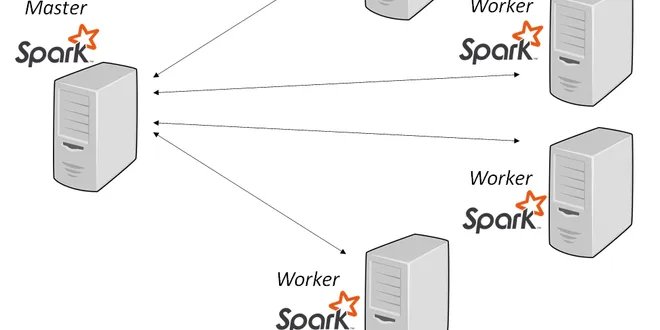

Apache Spark is an open-source, distributed computing framework designed for large-scale data processing. It excels in handling vast datasets by distributing tasks across multiple computers, making it ideal for big data applications and machine learning. Spark supports various processing types, including batch, real-time stream, and interactive processing, allowing developers to efficiently analyze and transform data. With its user-friendly API, Spark simplifies the complexities of distributed computing, enabling faster data processing compared to traditional methods like MapReduce. Originally developed at UC Berkeley, Spark has become a cornerstone in the big data ecosystem, widely adopted by leading tech companies.

Apache Spark — Fast and Furious.

Apache Spark is a data processing framework that can quickly perform processing tasks on very large data sets, and can also distribute data processing tasks across multiple computers, either on its…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Apache Spark Primer

Apache Spark is an open-source, fast, distributed cluster-computing framework for large-scale data processing. Spark is an execution engine that runs not only on Hadoop YARN but also on Apache Mesos…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Apache Spark: A Conceptual Orientation

Apache Spark, once part of the Hadoop ecosystem, is a powerful open-source, general-purpose distributed data-processing engine that provides real-time stream processing, interactive processing, graph…...

📚 Read more at Towards Data Science🔎 Find similar documents

Apache Spark Performance Boosting

Apache Spark is a common distributed data processing platform especially specialized for big data applications. It becomes the de facto standard in processing big data. By its distributed and…

📚 Read more at Towards Data Science🔎 Find similar documents

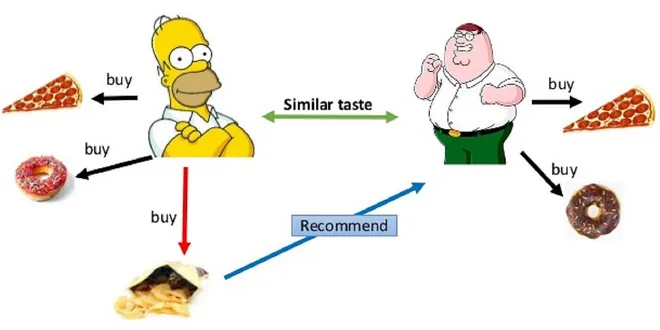

Introduction to Apache Spark and Implicit Collaborative Filtering in PySpark

Apache Spark is open-source, fast distribution computing framework. It provides APIs for programming clusters of machines with parallelism and fault tolerance [3]. It was originally developed in UC…

📚 Read more at Analytics Vidhya🔎 Find similar documents

A Beginner’s Guide to Apache Spark and Python

Apache Spark is an open source framework that has been making waves since its inception at UC Berkeley’s AMPLab in 2009; at its core it is a big data distributed processing engine that can scale at…

📚 Read more at Better Programming🔎 Find similar documents

Basics of Apache Spark Configuration Settings

Apache Spark is one of the most popular open-source distributed computing platforms for in-memory batch and stream processing. It, though promises to process millions of records very fast in a…

📚 Read more at Towards Data Science🔎 Find similar documents

Beginners Guide to Apache Pyspark

Apache Spark is an open-source analytics engine and cluster-computing framework that boosts your data processing performance. As they claim, Spark is a lightning-fast unified analytics engine. Spark…

📚 Read more at Towards Data Science🔎 Find similar documents

Creating Apache Spark Standalone Cluster with on Windows

Apache Spark is a powerful, fast and cost efficient tool for Big Data problems with having components like Spark Streaming, Spark SQL and Spark MLlib. Therefore Spark is like the Swiss army knife of…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Apache Spark: Optimization Techniques

Apache Spark is a well known Big Data Processing Engine out in market right now. It helps in lots of use cases, right from real time processing (Spark Streaming) till Graph processing (GraphX). As an…...

📚 Read more at Analytics Vidhya🔎 Find similar documents

Big Data? Meet Apache Spark!

Learn how to use Apache Spark with Python and PySpark code examples Official logo of Apache Spark Apache Spark is a powerful and very popular open-source framework for distributed computing, designed...

📚 Read more at Python in Plain English🔎 Find similar documents

Machine Learning in Apache Spark for Beginners — Healthcare Data Analysis

Apache Spark is a cluster computing framework designed for fast and efficient computation. It can handle millions of data points with a relatively low amount of computing power. Apache Spark is built…...

📚 Read more at Towards Data Science🔎 Find similar documents