Data Science & Developer Roadmaps with Chat & Free Learning Resources

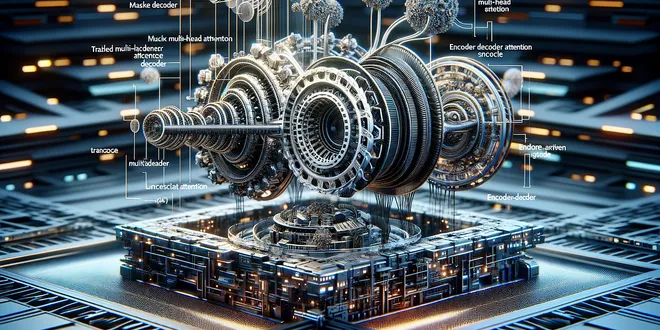

decoder

A decoder is a crucial component in various machine learning models, particularly in sequence-to-sequence (seq2seq) architectures. It is responsible for transforming encoded information, typically represented as a fixed-length vector, back into a sequence of outputs. This process is essential in applications such as language translation, where the decoder generates words in the target language based on the encoded input from the source language. Decoders can be implemented using different neural network architectures, including recurrent neural networks (RNNs) and transformers, enabling them to handle varying lengths of input and output sequences effectively.

TransformerDecoder

TransformerDecoder is a stack of N decoder layers decoder_layer – an instance of the TransformerDecoderLayer() class (required). num_layers – the number of sub-decoder-layers in the decoder (required)...

📚 Read more at PyTorch documentation🔎 Find similar documents

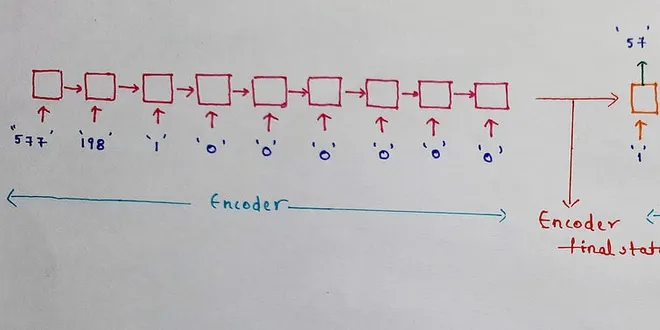

What is an encoder decoder model?

In this post, we introduce the encoder decoder structure in some cases known as Sequence to Sequence (Seq2Seq) model. For a better understanding of the structure of this model, previous knowledge on…

📚 Read more at Towards Data Science🔎 Find similar documents

LLMs and Transformers from Scratch: the Decoder

As always, the code is available on our GitHub . One Big While Loop After describing the inner workings of the encoder in transformer architecture in our previous article , we shall see the next segme...

📚 Read more at Towards Data Science🔎 Find similar documents

TransformerDecoderLayer

TransformerDecoderLayer is made up of self-attn, multi-head-attn and feedforward network. This standard decoder layer is based on the paper “Attention Is All You Need”. Ashish Vaswani, Noam Shazeer, N...

📚 Read more at PyTorch documentation🔎 Find similar documents

Encoder-Decoder Long Short-Term Memory Networks

Last Updated on August 14, 2019 Gentle introduction to the Encoder-Decoder LSTMs for sequence-to-sequence prediction with example Python code. The Encoder-Decoder LSTM is a recurrent neural network de...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Encoder-Decoder Seq2Seq for Machine Translation

In so-called seq2seq problems like machine translation (as discussed in Section 10.5 ), where inputs and outputs both consist of variable-length unaligned sequences, we generally rely on encoder-decod...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

How to Develop an Encoder-Decoder Model for Sequence-to-Sequence Prediction in Keras

Last Updated on August 27, 2020 The encoder-decoder model provides a pattern for using recurrent neural networks to address challenging sequence-to-sequence prediction problems such as machine transla...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

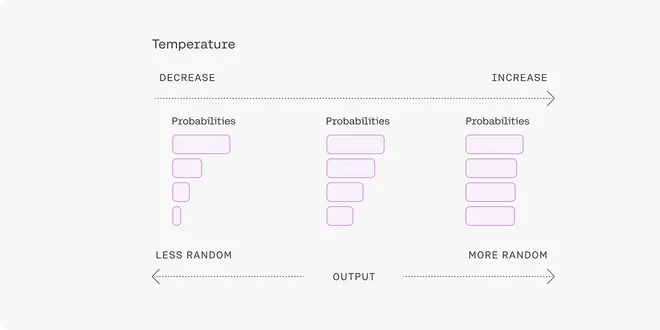

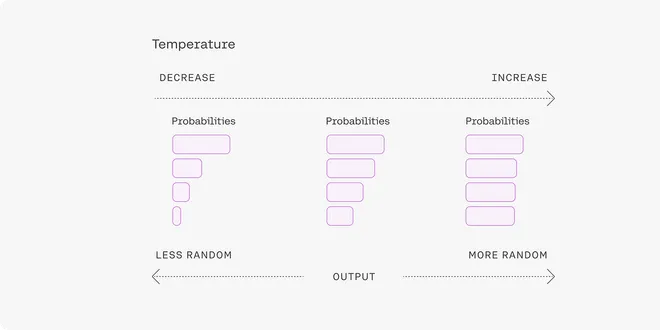

Methods for Decoding Transformers

During text generation tasks, the crucial step of decoding bridges the gap between a model’s internal vector representation and the final human-readable text output. The selection of decoding strategi...

📚 Read more at Python in Plain English🔎 Find similar documents

Methods for Decoding Transformers

During text generation tasks, the crucial step of decoding bridges the gap between a model’s internal vector representation and the final human-readable text output. The selection of decoding strategi...

📚 Read more at Level Up Coding🔎 Find similar documents

Encoder-Decoder Model for Multistep time series forecasting using Pytorch

Encoder-decoder models have provided state of the art results in sequence to sequence NLP tasks like language translation, etc. Multistep time-series forecasting can also be treated as a seq2seq…

📚 Read more at Towards Data Science🔎 Find similar documents

Understand sequence to sequence models in a more intuitive way.

Encoder — decoder models are used in Machine Translation, Conversational chatbot. It is also used in Image captioning(given an image briefly describe the image), video captioning(given a video file…

📚 Read more at Analytics Vidhya🔎 Find similar documents

TransformerEncoder

TransformerEncoder is a stack of N encoder layers. Users can build the BERT( https://arxiv.org/abs/1810.04805 ) model with corresponding parameters. encoder_layer – an instance of the TransformerEncod...

📚 Read more at PyTorch documentation🔎 Find similar documents