Data Science & Developer Roadmaps with Chat & Free Learning Resources

exploding-gradient-problem

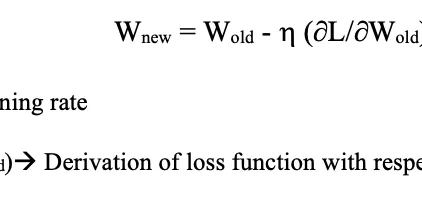

The exploding gradient problem is a significant challenge encountered during the training of deep neural networks. It occurs when large error gradients accumulate, leading to excessively large updates to the model’s weights. This instability can prevent the model from effectively learning from the training data, resulting in poor performance. The problem is particularly prevalent in deep architectures, where the gradients can grow exponentially as they propagate back through the layers. Understanding and addressing the exploding gradient problem is crucial for developing robust neural network models, ensuring stable training, and achieving better predictive accuracy.

Vanishing and Exploding Gradient Problems

One of the problems with training very deep neural network is that are vanishing and exploding gradients. (i.e When training a very deep neural network, sometimes derivatives becomes very very small…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Exploding And Vanishing Gradient Problem: Math Behind The Truth

Hello Stardust! Today we’ll see mathematical reason behind exploding and vanishing gradient problem but first let’s understand the problem in a nutshell. “Usually, when we train a Deep model using…

📚 Read more at Becoming Human: Artificial Intelligence Magazine🔎 Find similar documents

How to Avoid Exploding Gradients With Gradient Clipping

Last Updated on August 28, 2020 Training a neural network can become unstable given the choice of error function, learning rate, or even the scale of the target variable. Large updates to weights duri...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

A Gentle Introduction to Exploding Gradients in Neural Networks

Last Updated on August 14, 2019 Exploding gradients are a problem where large error gradients accumulate and result in very large updates to neural network model weights during training. This has the ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Vanishing and Exploding Gradients

In this blog, I will explain how a sigmoid activation can have both vanishing and exploding gradient problem. Vanishing and exploding gradients are one of the biggest problems that the neural network…...

📚 Read more at Level Up Coding🔎 Find similar documents

Vanishing & Exploding Gradient Problem: Neural Networks 101

What are Vanishing & Exploding Gradients? In one of my previous posts, we explained neural networks learn through the backpropagation algorithm. The main idea is that we start on the output layer and ...

📚 Read more at Towards Data Science🔎 Find similar documents

The Vanishing/Exploding Gradient Problem in Deep Neural Networks

A difficulty that we are faced with when training deep Neural Networks is that of vanishing or exploding gradients. For a long period of time, this obstacle was a major barrier for training large…

📚 Read more at Towards Data Science🔎 Find similar documents

What Are Gradients, and Why Do They Explode?

Gradients are arguably the most important fundamental concept in machine learning. In this post we will explore the concept of gradients, what makes them vanish and explode, and how to rein them in. W...

📚 Read more at Towards Data Science🔎 Find similar documents

The Vanishing Gradient Problem

The problem: as more layers using certain activation functions are added to neural networks, the gradients of the loss function approaches zero, making the network hard to train. Why: The sigmoid…

📚 Read more at Towards Data Science🔎 Find similar documents

The Problem of Vanishing Gradients

Vanishing gradients occur while training deep neural networks using gradient-based optimization methods. It occurs due to the nature of the backpropagation algorithm that is used to train the neural…

📚 Read more at Towards Data Science🔎 Find similar documents

Gradient Descent Problems and Solutions in Neural Networks

Gradient Problems are the ones which are the obstacles for Neural Networks to train. Usually you can find this in Artificial Neural Networks involving gradient based methods and back-propagation. But…...

📚 Read more at Analytics Vidhya🔎 Find similar documents

Gradient Boosting from Theory to Practice (Part 1)

Gradient boosting is a widely used machine learning technique that is based on a combination of boosting and gradient descent . Boosting is an ensemble method that combines multiple weak learners (or ...

📚 Read more at Towards Data Science🔎 Find similar documents