Adam Optimizer in Machine learning

Unlocking the Magic of Adam: The Math Behind Deep Learning’s Favorite Optimizer

Understanding the statistical mechanics: how moments and bias correction drive optimization Source: Author At the heart of every deep learning model lies a simple goal: minimizing error. We measure t...

📚 Read more at Towards AI🔎 Find similar documents

Implementation of Adam Optimizer: From Scratch

If you’ve ever spent any time in the world of machine learning (ML), you’ve probably heard of the Adam Optimizer. It’s like the MrBeast of optimization algorithms — everybody knows it, everybody uses ...

📚 Read more at Towards AI🔎 Find similar documents

Optimisation Algorithm — Adaptive Moment Estimation(Adam)

If you ever used any kind of package of deep learning, you must have used Adam as the optimiser. I remember there was a period of time when I had the notion that whenever you try to optimise…

📚 Read more at Towards Data Science🔎 Find similar documents

Adam — latest trends in deep learning optimization.

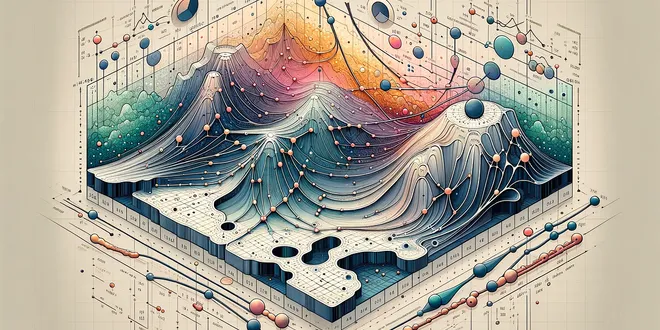

Adam [1] is an adaptive learning rate optimization algorithm that’s been designed specifically for training deep neural networks. First published in 2014, Adam was presented at a very prestigious…

📚 Read more at Towards Data Science🔎 Find similar documents

Why Should Adam Optimizer Not Be the Default Learning Algorithm?

An increasing share of deep learning practitioners is training their models with adaptive gradient methods due to their rapid training time. Adam, in particular, has become the default algorithm used ...

📚 Read more at Towards AI🔎 Find similar documents

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning

Last Updated on January 13, 2021 The choice of optimization algorithm for your deep learning model can mean the difference between good results in minutes, hours, and days. The Adam optimization algor...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

How to implement an Adam Optimizer from Scratch

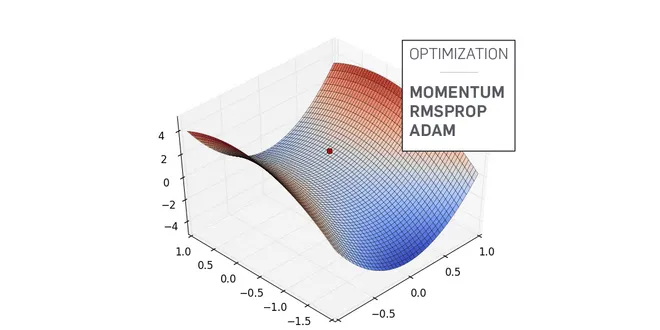

Adam is algorithm the optimizes stochastic objective functions based on adaptive estimates of moments. The update rule of Adam is a combination of momentum and the RMSProp optimizer. The rules are…

📚 Read more at Towards Data Science🔎 Find similar documents

The Math behind Adam Optimizer

The Math Behind the Adam Optimizer Why is Adam the most popular optimizer in Deep Learning? Let’s understand it by diving into its math, and recreating the algorithm Image generated by DALLE-2 If you...

📚 Read more at Towards Data Science🔎 Find similar documents

The Math Behind Nadam Optimizer

In our previous discussion on the Adam optimizer, we explored how Adam has transformed the optimization landscape in machine learning with its adept handling of adaptive learning rates. Known for its…...

📚 Read more at Towards Data Science🔎 Find similar documents

Multiclass Classification Neural Network using Adam Optimizer

I wanted to see the difference between Adam optimizer and Gradient descent optimizer in a more sort of hands-on way. So I decided to implement it instead. In this, I have taken the iris dataset and…

📚 Read more at Towards Data Science🔎 Find similar documents

The New ‘Adam-mini’ Optimizer Is Here To Cause A Breakthrough In AI

A deep dive into how Optimizers work, their developmental history, and how the 'Adam-mini' optimizer enhances LLM training like never… Continue reading on Level Up Coding

📚 Read more at Level Up Coding🔎 Find similar documents

Optimizers for machine learning

In this we are going to learn optimizers which is the most important part of machine learning , in this blog I try to explain each and every concept of Optimizers in simple terms and visualization so…...

📚 Read more at Analytics Vidhya🔎 Find similar documents