Adaptive-Gradient-Algorithm-adagrad

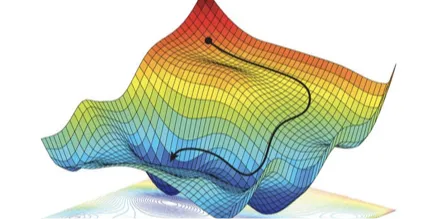

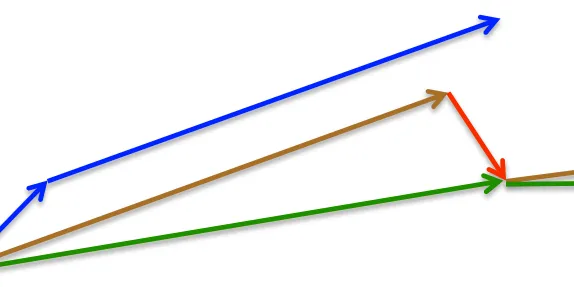

The Adaptive Gradient Algorithm, commonly known as AdaGrad, is an optimization technique designed to enhance the performance of gradient descent. Unlike traditional gradient descent, which applies a uniform learning rate across all parameters, AdaGrad adapts the learning rate for each parameter based on the historical gradients. This means that parameters with frequent updates receive smaller learning rates, while those with infrequent updates maintain larger learning rates. This adaptive approach allows AdaGrad to effectively navigate complex optimization landscapes, making it particularly useful for problems with varying curvature in different dimensions. As a result, it accelerates convergence and improves the quality of the final solution.

Adagrad

Implements Adagrad algorithm. For further details regarding the algorithm we refer to Adaptive Subgradient Methods for Online Learning and Stochastic Optimization . params ( iterable ) – iterable of p...

📚 Read more at PyTorch documentation🔎 Find similar documents

Gradient Descent With AdaGrad From Scratch

Last Updated on October 12, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Gradient Descent With Adadelta from Scratch

Last Updated on October 12, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Gradient Descent Optimization With AMSGrad From Scratch

Last Updated on October 12, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Gradient Descent Optimization With AdaMax From Scratch

Last Updated on September 25, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitatio...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Gradient Descent Algorithm Explained

Gradient Descent is a machine learning algorithm that operates iteratively to find the optimal values for its parameters. It takes into account, user-defined learning rate, and initial parameter…

📚 Read more at Towards AI🔎 Find similar documents

Gradient Descent Algorithm

Every machine learning algorithm needs some optimization when it is implemented. This optimization is performed at the core of machine learning algorithms. The Gradient Descent algorithm is one of…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Gradient Descent With RMSProp from Scratch

Last Updated on October 12, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Gradient Descent:

Gradient Descent is iterative optimization algorithm , which provides new point in each iteration based on its gradient and learning rate that we initialise at the beginning. Gradient is the vector…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Gradient descent algorithms and adaptive learning rate adjustment methods

Here is a quick concise summary for reference. For more detailed explanation please read: http://ruder.io/optimizing-gradient-descent/ Vanilla gradient descent, aka batch gradient descent, computes…

📚 Read more at Towards Data Science🔎 Find similar documents

Gradient Descent

Gradient Descent is a first order iterative optimization algorithm where optimization, often in Machine Learning refers to minimizing a cost function J(w) parameterized by the predictive model's…

📚 Read more at Towards Data Science🔎 Find similar documents

The Gradient Descent Algorithm

Image by Anja from Pixabay The What, Why, and Hows of the Gradient Descent Algorithm Author(s): Pratik Shukla “The cure for boredom is curiosity. There is no cure for curiosity.” — Dorothy Parker The ...

📚 Read more at Towards AI🔎 Find similar documents