Adversarial Training

Everything you need to know about Adversarial Training in NLP

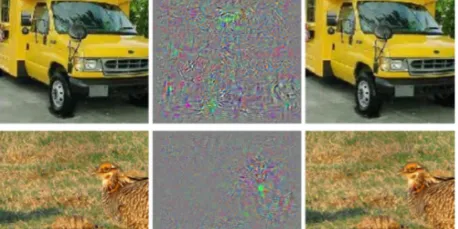

Adversarial training is a fairly recent but very exciting field in Machine Learning. Since Adversarial Examples were first introduced by Christian Szegedy[1] back in 2013, they have brought to light…

📚 Read more at Analytics Vidhya🔎 Find similar documents