Apache Airflow for ML Pipelines

Operationalization of ML Pipelines on Apache Mesos and Hadoop using Airflow

An architecture for orchestrating machine learning pipelines in production on Apache Mesos and Hadoop using Airflow

📚 Read more at Towards Data Science🔎 Find similar documents

End-to-end Machine Learning pipeline from scratch with Docker and Apache Airflow

This post describes the implementation of a sample Machine Learning pipeline on Apache Airflow with Docker, covering all the steps required to setup a working local environment from scratch. Let us…

📚 Read more at Towards Data Science🔎 Find similar documents

5 Steps to Build Efficient Data Pipelines with Apache Airflow

Uncovering best practices to optimise big data pipelines Photo by Chinh Le Duc on Unsplash Apache Airflow Airflow is an open-source workflow orchestration tool. Although used extensively to build dat...

📚 Read more at Towards Data Science🔎 Find similar documents

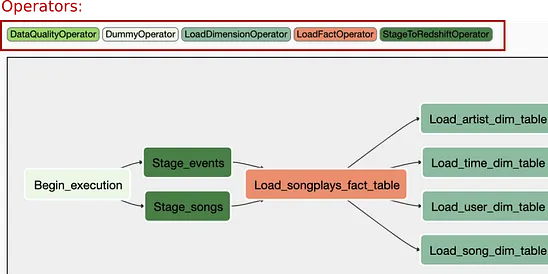

Build Data Pipelines with Apache Airflow

The beginner's guide to Apache Airflow. This is a tutorial on how to build ETL data pipelines using Airflow.

📚 Read more at Towards Data Science🔎 Find similar documents

Twitter Data Pipeline using Apache Airflow

Apache Airflow is a workflow scheduler and in essence, is a python framework which allows running any type of task which can be executed by Python. e.g. sending an email, running a Spark job…

📚 Read more at Towards Data Science🔎 Find similar documents

5 essential tips when using Apache Airflow to build an ETL pipeline for a database hosted on…

Apache Airflow is one of the best workflow management systems (WMS) that provides data engineers with a friendly platform to automate, monitor, and maintain their complex data pipelines. Started at…

📚 Read more at Towards Data Science🔎 Find similar documents

Apache Airflow for containerized data-pipelines

You have probably heard about Apache Airflow before, or you’re using it to schedule your data pipelines right now. And, your approach depending on what you’re going to run is to use an operator for…

📚 Read more at Towards Data Science🔎 Find similar documents

How to build a data extraction pipeline with Apache Airflow

Data extraction pipelines might be hard to build and manage, so it’s a good idea to use a tool that can help you with these tasks. Apache Airflow is a popular open-source management workflow platform…...

📚 Read more at Towards Data Science🔎 Find similar documents

Is Airflow the Right Choice for Machine Learning Too?

A look at the differences between ETL and machine learning tasks Photo by Jukan Tateisi from Unsplash Apache Airflow is an open source platform that can be used to author, monitor, and schedule data ...

📚 Read more at Better Programming🔎 Find similar documents

Apache Airflow — A New Way To Write DAGs

A guide on How to Build a Data Pipeline Framework on Apache Airflow to better scale your Data Infrastructure.

📚 Read more at Towards Data Science🔎 Find similar documents

How I Built CI/CD For Data Pipelines in Apache Airflow on AWS

Apache Airflow is a commonly used platform for building data engineering workloads. There are so many ways to deploy Airflow that it’s hard to provide one simple answer on how to build a continuous…

📚 Read more at Towards Data Science🔎 Find similar documents

Starting with Apache Airflow to automate a PostgreSQL database on Amazon RDS

Since some time, Apache Airflow has become an important open source tool for building pipelines and automating tasks in the world of data engineering with languages such as Python, from ETL processes…...

📚 Read more at Analytics Vidhya🔎 Find similar documents