Apache airflow

Moving fast with airflow

Simple Guide To Get Started With Apache Airflow Photo by oğuz can on Unsplash What is Airflow? Apache Airflow is an open-source platform for orchestrating complex workflows and data pipelines. It all...

📚 Read more at The Pythoneers🔎 Find similar documents

Getting started with Apache Airflow

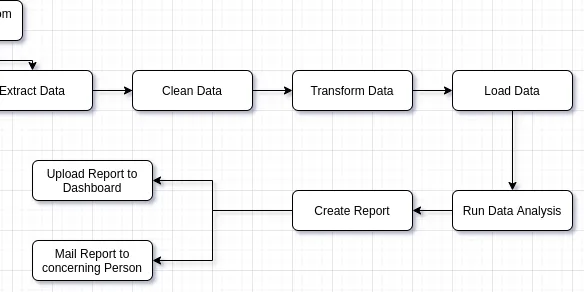

In this post, I am going to discuss Apache Airflow, a workflow management system developed by Airbnb. Earlier I had discussed writing basic ETL pipelines in Bonobo. Bonobo is cool for write ETL…

📚 Read more at Towards Data Science🔎 Find similar documents

Airflow for Beginners

Apache Airflow is a platform for programmatically author schedule and monitor workflows. It is one of the best workflow management system. Airflow was originally developed by Airbnb to manage its…

📚 Read more at Analytics Vidhya🔎 Find similar documents

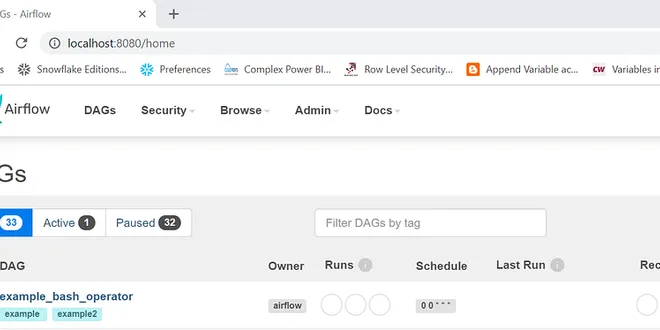

Data Engineering : Installing Apache Airflow on Windows 10 without Docker

Apache Airflow is an open-source platform to Author, Schedule and Monitor workflows. Airflow helps you to create workflows using Python programming language and these workflows can be scheduled and…

📚 Read more at Analytics Vidhya🔎 Find similar documents

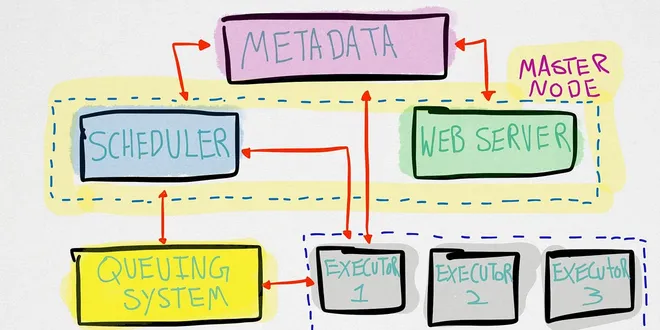

A quick primer on Apache Airflow

Airflow is a platform used to author, schedule, and monitor workflows. It’s essentially a queuing system that runs on a metadata database and a scheduler that runs tasks. Workflows are written as…

📚 Read more at Towards Data Science🔎 Find similar documents

Apache Airflow — Part 1

Every programmer loves automating their stuff. Learning and using any automation tool is fun for us. A few months ago, I came across a wonderful open source project called apache-airflow. I have…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Apache Airflow

Airflow was born out of Airbnb’s problem of dealing with large amounts of data that was being used in a variety of jobs. To speed up the end-to-end process, Airflow was created to quickly author…

📚 Read more at Towards Data Science🔎 Find similar documents

A Complete Introduction to Apache Airflow

Airflow is a tool for automating and scheduling tasks and workflows. If you want to work efficiently as a data scientist, data analyst or data engineer it is essential to have a tool that can…

📚 Read more at Towards Data Science🔎 Find similar documents

Twitter Data Pipeline using Apache Airflow

Apache Airflow is a workflow scheduler and in essence, is a python framework which allows running any type of task which can be executed by Python. e.g. sending an email, running a Spark job…

📚 Read more at Towards Data Science🔎 Find similar documents

5 essential tips when using Apache Airflow to build an ETL pipeline for a database hosted on…

Apache Airflow is one of the best workflow management systems (WMS) that provides data engineers with a friendly platform to automate, monitor, and maintain their complex data pipelines. Started at…

📚 Read more at Towards Data Science🔎 Find similar documents

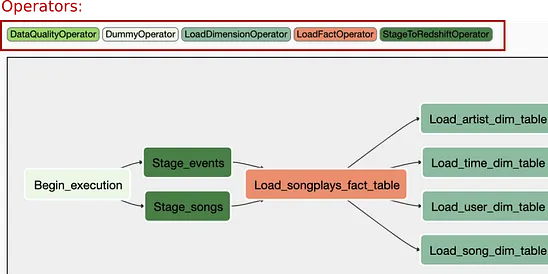

Setting Up Airflow Using Celery Executors in Docker

Apache Airflow is an open-source tool for orchestrating complex computational workflows and data processing pipelines. An Airflow workflow is designed as a directed acyclic graph (DAG). That means…

📚 Read more at Level Up Coding🔎 Find similar documents

Here Is What I Learned Using Apache Airflow over 6 Years

Apache Airflow is undoubtedly the most popular open-source project for data engineering for years. It gains popularity at the right time with The Rise Of Data Engineer. Today, I want to share my journ...

📚 Read more at Towards Data Science🔎 Find similar documents