Attention-mechanism

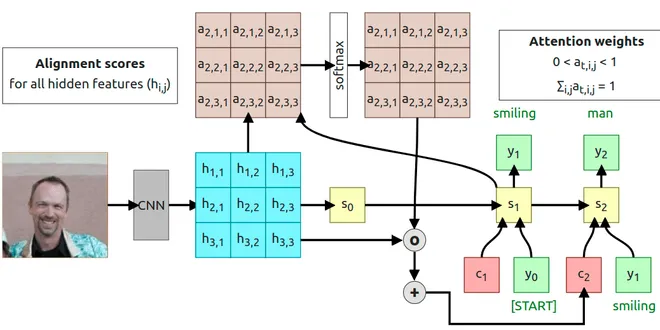

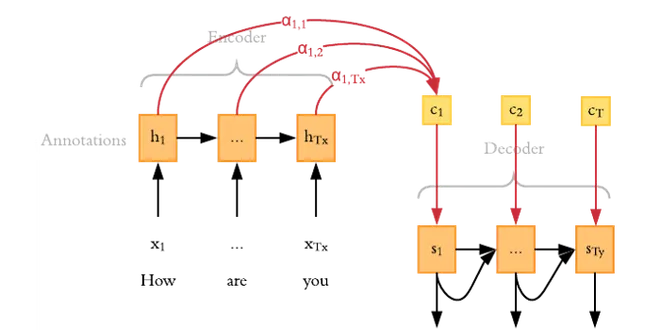

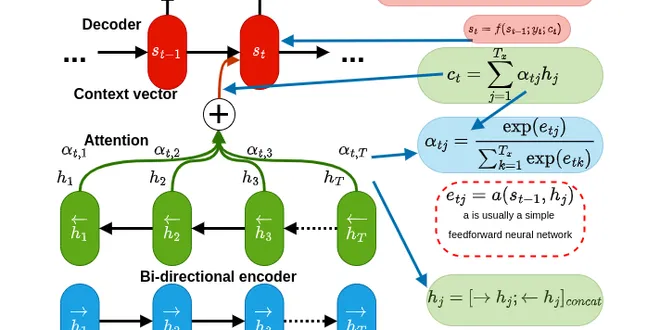

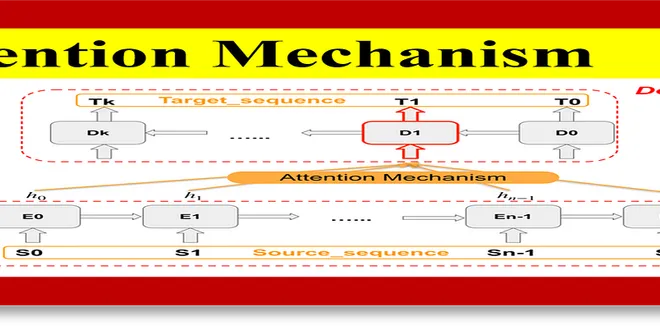

The attention mechanism is a transformative concept in machine learning, particularly within the realm of natural language processing (NLP). It addresses the challenge of learning long-range dependencies in sequences, which traditional neural networks often struggle with due to lengthy signal paths. By allowing models to focus on specific parts of the input data, the attention mechanism enhances the performance of tasks such as machine translation, image captioning, and dialogue generation. This innovation enables models to weigh the importance of different input elements dynamically, leading to more accurate and contextually relevant outputs, as exemplified in the Transformer architecture.

Attention Mechanism

Introduction to Attention Mechanism with example. Covering the self-attention mechanism, the idea of query, key, and value, and discussing the multi-head attention. Self Attention -concept At the hea...

📚 Read more at Towards AI🔎 Find similar documents

The Attention Mechanism from Scratch

Last Updated on October 19, 2022 The attention mechanism was introduced to improve the performance of the encoder-decoder model for machine translation. The idea behind the attention mechanism was to ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Introduction to Attention Mechanism

The attention mechanism is one of the most important inventions in Machine Learning, at this moment (2021) it’s used to achieve impressive results in almost every field of ML, and today I want to…

📚 Read more at Towards Data Science🔎 Find similar documents

Attention Mechanisms and Transformers

The optic nerve of a primate’s visual system receives massive sensory input, far exceeding what the brain can fully process. Fortunately, not all stimuli are created equal. Focalization and concentrat...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Understanding Attention Mechanism: Natural Language Processing

Attention mechanism is one of the recent advancements in Deep learning especially for Natural language processing tasks like Machine translation, Image Captioning, dialogue generation etc. It is a…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Rethinking Thinking: How Do Attention Mechanisms Actually Work?

The brain, the mathematics, and DL — research frontiers in 2022 Fig. 1. Attention mechanisms’ main categories. Photo by author. Table of contents 1\. Introduction: attention in the human brain 2\. At...

📚 Read more at Towards Data Science🔎 Find similar documents

Deep Dive into Attention Mechanisms: Python Code Included

Attention mechanisms are a game-changing technique in deep learning, allowing models to selectively focus on specific parts of input data. In this blog post, we’ll provide a comprehensive introduction...

📚 Read more at Python in Plain English🔎 Find similar documents

Attention Mechanism: A quick intuition

In this article, we will try to understand the basic intuition of attention mechanism and why it came into picture. We aim to understand the working of encoder-decoder models and how attention helps…

📚 Read more at Analytics Vidhya🔎 Find similar documents

The Luong Attention Mechanism

Last Updated on October 17, 2022 The Luong attention sought to introduce several improvements over the Bahdanau model for neural machine translation, notably by introducing two new classes of attentio...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Attention Mechanism in a Large Language Model: Unlocking the Power of Context

Member-only story Attention Mechanism in a Large Language Model: Unlocking the Power of Context Punyakeerthi BL · Follow Published in Python in Plain English · 5 min read · 1 day ago -- Share Learn ho...

📚 Read more at Python in Plain English🔎 Find similar documents

Smart Composer with Attention Mechanism

Here is a detailed description of attention mechanism in my previous blog it covers all the detail how the attention work. When we think about the English word “Attention”, we know that it means…

📚 Read more at Analytics Vidhya🔎 Find similar documents

The Transformer Attention Mechanism

Last Updated on October 23, 2022 Before the introduction of the Transformer model, the use of attention for neural machine translation was implemented by RNN-based encoder-decoder architectures. The T...

📚 Read more at Machine Learning Mastery🔎 Find similar documents