Backpropogation

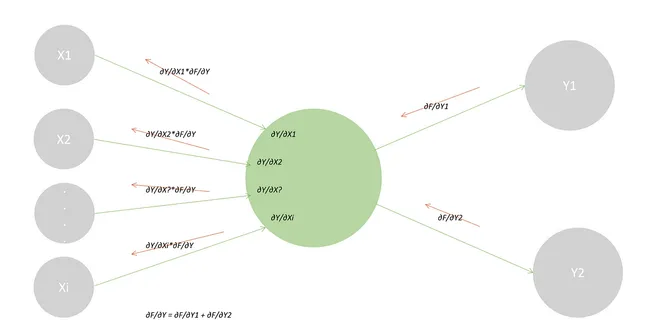

Backpropagation is a fundamental algorithm used in training artificial neural networks, enabling them to learn from data. It involves adjusting the weights of the network based on the error of its predictions compared to the actual outcomes. The process consists of two main phases: forward propagation, where inputs are passed through the network to generate outputs, and backward propagation, where the error is calculated and propagated back through the network to update the weights. This iterative process helps improve the model’s accuracy, making backpropagation essential for developing effective machine learning models.

Backpropagation

From mystery to mastery: Decoding the engine behind Neural Networks. Created with DALL-E 3 | (All the equation images in the article were created by the author) The term backpropagation, short for “b...

📚 Read more at Towards AI🔎 Find similar documents

Layman’s Introduction to Backpropagation

Backpropagation is the process of tuning a neural network’s weights to better the prediction accuracy. There are two directions in which information flows in a neural network. Backpropagation is done…...

📚 Read more at Towards Data Science🔎 Find similar documents

Backpropagation

Backpropagation Chain rule refresher Applying the chain rule Saving work with memoization Code example The goals of backpropagation are straightforward: adjust each weight in the network in proportion...

📚 Read more at Machine Learning Glossary🔎 Find similar documents

Backpropagation for people who are afraid of math

Backpropagation is one of the most important concepts in machine learning. There are many online resources that explain the intuition behind this algorithm (IMO the best of these is the…

📚 Read more at Towards Data Science🔎 Find similar documents

Backpropagation: Intuition and Explanation

Backpropagation is a popular algorithm used to train neural networks. In this article, we will go over the motivation for backpropagation and then derive an equation for how to update a weight in the…...

📚 Read more at Towards Data Science🔎 Find similar documents

Understanding Backpropagation Algorithm

Backpropagation algorithm is probably the most fundamental building block in a neural network. It was first introduced in 1960s and almost 30 years later (1989) popularized by Rumelhart, Hinton and…

📚 Read more at Towards Data Science🔎 Find similar documents

Backpropagation Through Time

If you completed the exercises in Section 9.5 , you would have seen that gradient clipping is vital to prevent the occasional massive gradients from destabilizing training. We hinted that the explodin...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Backpropagation in Neural Networks

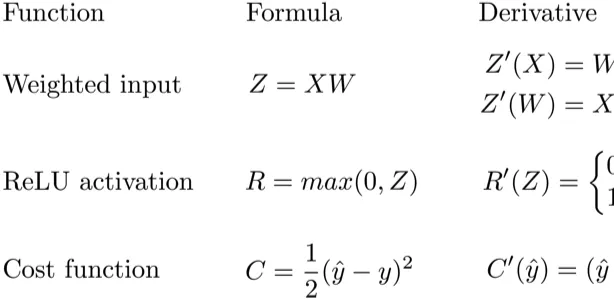

Backpropagation in artificial intelligence deep Neural Networks from scratch with Math and python code. Equations derived with chain rule

📚 Read more at Towards Data Science🔎 Find similar documents

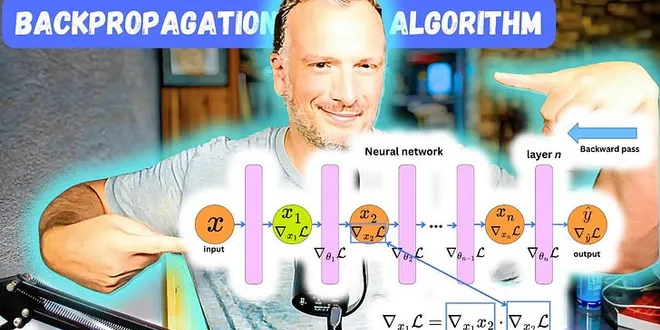

The Backpropagation Algorithm!

Deep Learning - Neural Networks

📚 Read more at The AiEdge Newsletter🔎 Find similar documents

Yes, you should listen to Andrej Karpathy, and understand Backpropagation

Backprop mechanism helps us propagate loss/error in the reverse direction, from output to input, using gradient descent for training models in Machine Learning.

📚 Read more at Towards Data Science🔎 Find similar documents

Backpropagation Unveiled: The Engine Behind Neural Network Learning

At a high level, backpropagation works by computing the gradients of the loss function with respect to the weights of the network. The loss function is a measure of how far off the network’s output is...

📚 Read more at Python in Plain English🔎 Find similar documents

A Gentle Introduction to Backpropagation Through Time

Last Updated on August 14, 2020 Backpropagation Through Time, or BPTT, is the training algorithm used to update weights in recurrent neural networks like LSTMs. To effectively frame sequence predictio...

📚 Read more at Machine Learning Mastery🔎 Find similar documents