Batch Size Effects

What Is the Effect of Batch Size on Model Learning?

And why does it matter Batch Size is one of the most crucial hyperparameters in Machine Learning. It is the hyperparameter that specifies how many samples must be processed before the internal model ...

📚 Read more at Towards AI🔎 Find similar documents

Batch Effects

What Are Batch Effects And How To Deal With Them Continue reading on Towards Data Science

📚 Read more at Towards Data Science🔎 Find similar documents

Batch effects are everywhere! Deflategate edition

In my opinion, batch effects are the biggest challenge faced by genomics research, especially in precision medicine. As we point out in this review , they are everywhere among high-throughput experime...

📚 Read more at Simply Statistics🔎 Find similar documents

Effect of Batch Size on Training Process and results by Gradient Accumulation

In this experiment, we investigate the effect of batch size and gradient accumulation on training and test accuracy. We investigate the batch size in the context of image classification, taking MNIST…...

📚 Read more at Analytics Vidhya🔎 Find similar documents

A definitive guide to effect size

As a data scientist, you will most likely come across the effect size while working on some kind of A/B testing. A possible scenario is that the company wants to make a change to the product (be it a…...

📚 Read more at Towards Data Science🔎 Find similar documents

Why Batch Sizes in Machine Learning Are Often Powers of Two: A Deep Dive

image from the author In the world of machine learning and deep learning, you’ll often encounter batch sizes that are powers of two: 2, 4, 8, 16, 32, 64, and so on. This isn’t just a coincidence or an...

📚 Read more at Towards AI🔎 Find similar documents

Odds Ratio and Effect Size

In statistics, an effect size is a number measuring the strength of the relationship between two variables in a statistical population. Logistic regression is one of the most common binary…

📚 Read more at Analytics Vidhya🔎 Find similar documents

A batch too large: finding the batch size that fits on GPUs

A batch too large: Finding the batch size that fits on GPUs A simple function to identify the batch size for your PyTorch model that can fill the GPU memory I am sure many of you had the following pa...

📚 Read more at Towards Data Science🔎 Find similar documents

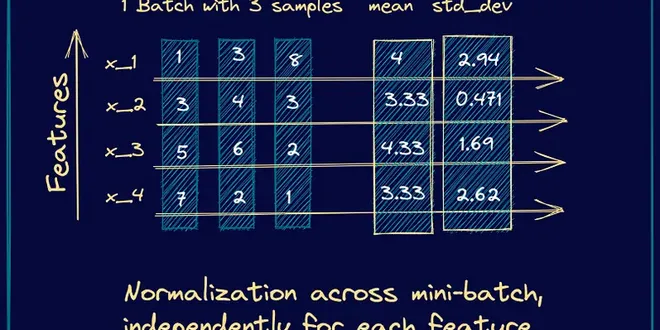

SyncBatchNorm

Applies Batch Normalization over a N-Dimensional input (a mini-batch of [N-2]D inputs with additional channel dimension) as described in the paper Batch Normalization: Accelerating Deep Network Traini...

📚 Read more at PyTorch documentation🔎 Find similar documents

How to Control the Stability of Training Neural Networks With the Batch Size

Last Updated on August 28, 2020 Neural networks are trained using gradient descent where the estimate of the error used to update the weights is calculated based on a subset of the training dataset. T...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Implementing a batch size finder in Fastai : how to get a 4x speedup with better generalization !

Batch size finder implemented in Fastai using an OpenAI paper. With a correct batch size, training can be 4 time faster while still having same or even better accuracy.

📚 Read more at Towards Data Science🔎 Find similar documents

Why does Batch Normalization work ?

Why does Batch Normalization work ? Batch Normalization is a widely used technique for faster and stable training of deep neural networks. While the reason for the effectiveness of BatchNorm is said ...

📚 Read more at Towards AI🔎 Find similar documents