Data&ML Pipelines Integration

Integrating Data CI/CD Pipeline to Machine Learning (ML) Applications

Member-only story Integrating Data CI/CD Pipeline to Machine Learning (ML) Applications A step-by-step guide to building data CI/CD in production ML systems on serverless architecture Kuriko Iwai 14 m...

📚 Read more at Level Up Coding🔎 Find similar documents

Pipelines in Spark ML

chaining multiple ML stages in a line Photo by T K on Unsplash If you are a machine learning enthusiast, you might have encountered various ML stages, such as assembling, encoding, and indexing, whic...

📚 Read more at The Pythoneers🔎 Find similar documents

Interactive Pipeline and Composite Estimators for your end-to-end ML model

A data science model development pipeline involves various components including data injection, data preprocessing, feature engineering, feature scaling, and modeling. A data scientist needs to write…...

📚 Read more at Towards Data Science🔎 Find similar documents

Big-Data Pipelines with SparkML

Pipelines are a simple way to keep your data preprocessing and modeling code organized. Specifically, a pipeline bundles preprocessing and modeling steps so you can use the whole bundle as if it were…...

📚 Read more at Towards AI🔎 Find similar documents

Diving Into Data Pipelines — Foundations of Data Engineering

A data pipeline is a set of rules that stimulates and transforms data from multiple sources to a destination where new values can be obtained. In the most simplistic form, pipelines may extract only…

📚 Read more at Towards AI🔎 Find similar documents

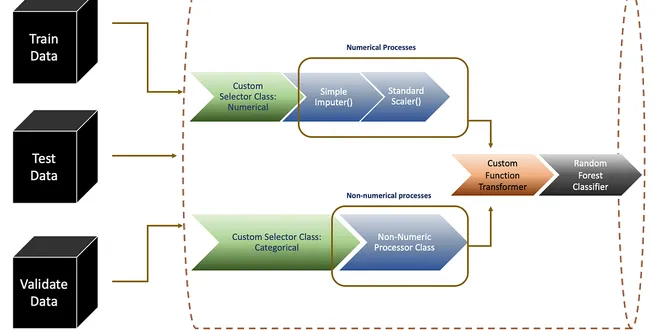

Pipelines

In this tutorial, you will learn how to use **pipelines** to clean up your modeling code. Introduction **Pipelines** are a simple way to keep your data preprocessing and modeling code organized. Speci...

📚 Read more at Kaggle Learn Courses🔎 Find similar documents

Pipelines

In this tutorial, you will learn how to use **pipelines** to clean up your modeling code. Introduction **Pipelines** are a simple way to keep your data preprocessing and modeling code organized. Speci...

📚 Read more at Kaggle Learn Courses🔎 Find similar documents

Pipelines

In this tutorial, you will learn how to use **pipelines** to clean up your modeling code. Introduction **Pipelines** are a simple way to keep your data preprocessing and modeling code organized. Speci...

📚 Read more at Kaggle Learn Courses🔎 Find similar documents

Strategy to Data Pipeline Integration, Business Intelligence Project

The main task of data integration is to secure the flow of data between different systems (for example an ERP system and a CRM system), each system dealing with the data with whatever business logic…

📚 Read more at Towards Data Science🔎 Find similar documents

Building Machine Learning Pipelines

ML pipelines automate workflows. But, what does that mean? In a crux, they help develop the sequential flow of data from one estimator/transformer to the next till it reaches the final prediction…

📚 Read more at Towards Data Science🔎 Find similar documents

Why You Need a Data Pipeline

A data pipeline is a set of steps that data follows in a series of processes. It helps us make data clearer and less prone to faults in Data Science and Machine Learning. Sometimes these steps are…

📚 Read more at Python in Plain English🔎 Find similar documents

Automating Data CI/CD for Scalable MLOps Pipelines

Member-only story Automating Data CI/CD for Scalable MLOps Pipelines A step-by-step guide to achieving continuous data integration and delivery in production ML systems Kuriko Iwai 16 min read · Just ...

📚 Read more at Towards AI🔎 Find similar documents