Evolving-Architectures-NEAT

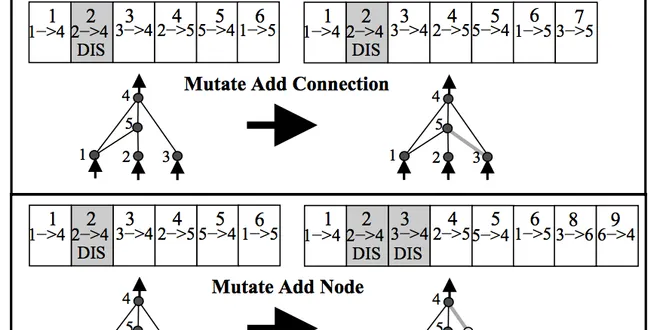

Evolving architectures through NEAT (NeuroEvolution of Augmenting Topologies) is a groundbreaking approach in artificial intelligence that combines genetic algorithms with neural network design. NEAT evolves neural networks by starting with simple structures and gradually increasing their complexity as needed, allowing for efficient exploration of the solution space. This method utilizes historical markings to facilitate crossover between different network architectures, ensuring compatibility and enhancing evolutionary progress. By leveraging indirect encodings, NEAT can represent complex patterns and traits, making it a powerful tool for developing adaptive and efficient neural networks in various applications.

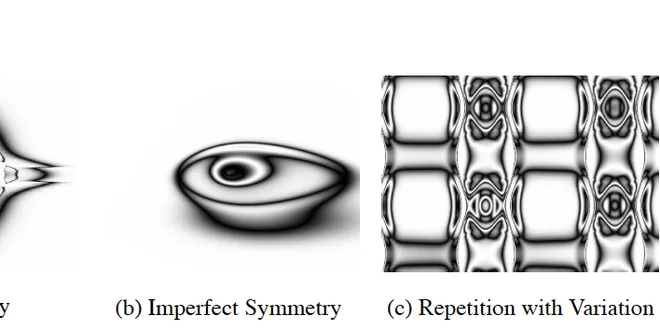

HyperNEAT: Powerful, Indirect Neural Network Evolution

Last week, I wrote an article about NEAT (NeuroEvolution of Augmenting Topologies) and we discussed a lot of the cool things that surrounded the algorithm. We also briefly touched upon how this older…...

📚 Read more at Towards Data Science🔎 Find similar documents

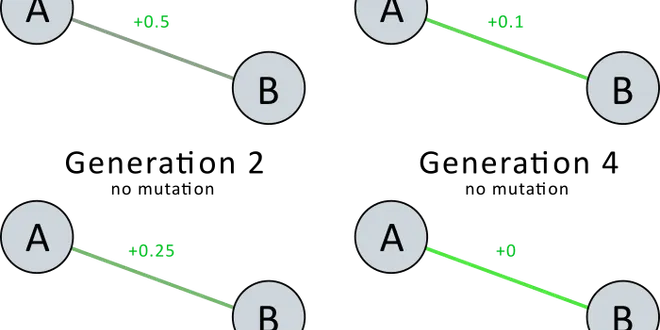

NEAT with Acceleration

While discussing with several other users in the Learn AI Together discord server (https://discord.gg/learnaitogether), one of us came up with an idea: averaging weight mutations over several generati...

📚 Read more at Towards AI🔎 Find similar documents

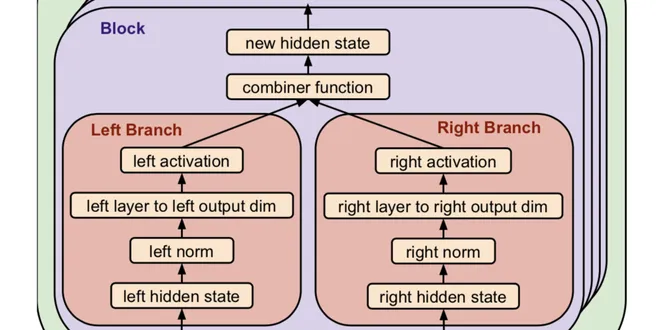

The Evolved Transformer — Enhancing Transformer with Neural Architecture Search

Neural architecture search (NAS) is the process of algorithmically searching for new designs of neural networks. Though researchers have developed sophisticated architectures over the years, the…

📚 Read more at Towards Data Science🔎 Find similar documents

Evolutionary Architecture: Supporting Constant Change

Through continuous improvement, technology adoption, and not being complacent The first principle of an evolutionary architecture is to enable incremental change in an architecture over time — Though...

📚 Read more at Better Programming🔎 Find similar documents

Architectures — Part 1

In this lecture, we look into the early deep architectures starting from LeNet over AlexNet all the way to GoogLeNet.

📚 Read more at Towards Data Science🔎 Find similar documents

Evolving Deep Neural Networks

Deep learning architectures are getting harder to design, but evolutionary algorithms may help us overcome this. This review presents important recent research in this matter.

📚 Read more at Towards Data Science🔎 Find similar documents

How do we teach a machine to program itself ? — NEAT learning.

In this post, I will try to explain a method of machine learning called Evolving Neural Networks through Augmenting Topologies (NEAT). I love to learn. It is really exciting to open up a book or a…

📚 Read more at Towards Data Science🔎 Find similar documents

The Architecture and Implementation of LeNet-5

LeNet-5- The very oldest Neural Network Architecture. This network was trained on MNIST data and it is a 7 layered architecture given by Yann Lecun.

📚 Read more at Towards AI🔎 Find similar documents

Architectures — Part 2

In this lecture we explore deeper architectures auch as Inception V2 and V3 and explain the concept of exponential feature reuse.

📚 Read more at Towards Data Science🔎 Find similar documents

Architectures — Part 4

In this lecture, we look at different ideas how to develop ResNets further. In particular, we look into ideas on how to combine this with other architectures.

📚 Read more at Towards Data Science🔎 Find similar documents

Neuroevolution — evolving Artificial Neural Networks topology from the scratch

This article presents how to build and train Artificial Neural Networks by NEAT algorithm. It will consider weakness of current Gradient Descent based training methods and shows a way to improve it.

📚 Read more at Becoming Human: Artificial Intelligence Magazine🔎 Find similar documents

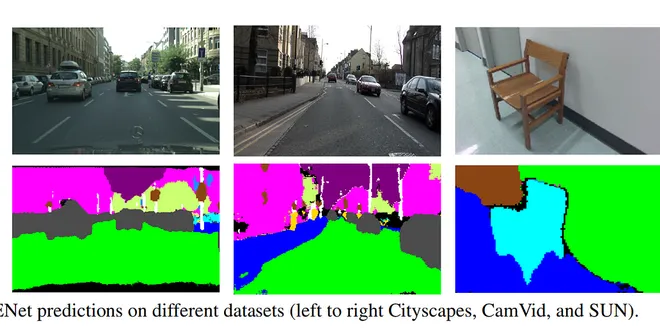

ENet — A Deep Neural Architecture for Real-Time Semantic Segmentation

This is a paper summary of the paper: ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation by Adam Paszke Paper: https://arxiv.org/abs/1606.02147 ENet (Efficient Neural…

📚 Read more at Towards Data Science🔎 Find similar documents