Extract, Transform, Load

A Friendly Introduction to ETL (Extract, Transform, Load) Process in Data Engineering with Python

What is ETL (Extract, Transform, and Load) ETL stands for Extract, Transform, and Load. It’s a three-step process in data engineering that helps move data from its source to a target system. Let’s bre...

📚 Read more at Python in Plain English🔎 Find similar documents

Transforms

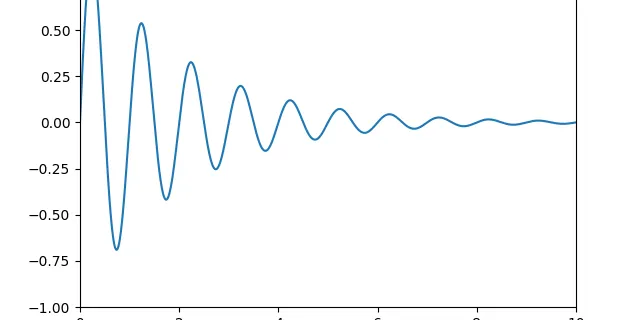

Transforms Data does not always come in its final processed form that is required for training machine learning algorithms. We use transforms to perform some manipulation of the data and make it suita...

📚 Read more at PyTorch Tutorials🔎 Find similar documents

Transformations Tutorial

Transformations Tutorial Like any graphics packages, Matplotlib is built on top of a transformation framework to easily move between coordinate systems, the userland data coordinate system, the axes c...

📚 Read more at Matplotlib Tutorials🔎 Find similar documents

What is Data Extraction? A Python Guide to Real-World Datasets

Data extraction involves pulling data from different sources and converting it into a useful format for further processing or analysis. It is the first step of the Extract-Transform-Load pipeline…

📚 Read more at Towards Data Science🔎 Find similar documents

Build The World’s Simplest ETL (Extract, Transform, Load) Pipeline in Ruby With Kiba

You can always roll your own, but a number of packages exist to make writing ETL’s clean, modular and testable. ETL stands for “extract, transform, load”, but unless you come from a data mining…

📚 Read more at Towards Data Science🔎 Find similar documents

Data Warehouse Transformation Code Smells

There is a strange paradigm in Data Engineering when it comes to transformation code. While we increasingly hold extract and load (“EL”) programming to production software standards, transform code…

📚 Read more at Towards Data Science🔎 Find similar documents

ETL Using Luigi

In computing, extract, transform, load ( ETL) is the general procedure of copying data from one or more sources into a destination system which represents the data differently from the source (s) or…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Transitioning from ETL to ELT

ETL (Extract-Transform-Load) and ELT (Extract-Load-Transform) are two terms commonly used in the realm of Data Engineering and more specifically in the context of data ingestion and transformation. Wh...

📚 Read more at Towards Data Science🔎 Find similar documents

Transformer

A transformer model. User is able to modify the attributes as needed. The architecture is based on the paper “Attention Is All You Need”. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Ll...

📚 Read more at PyTorch documentation🔎 Find similar documents

Building an ELT Pipeline in Python and Snowflake

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are two processes used for integrating and transforming data, but they have different approaches. Think of it like cooking a meal —…

📚 Read more at Towards Data Science🔎 Find similar documents

A new contender for ETL in AWS?

ETL — or Extract, Transform, Load — is a common pattern for processing incoming data. It allows efficient use of resources by bunching the “transform” into a single bulk operation, often making it…

📚 Read more at Towards Data Science🔎 Find similar documents

Google Generative AI Transformations

Large language models (LLMs) can extract information and generate information, but they can also transform it, making extract, transform, and load (ETL) a potentially different effort entirely. I’ll…

📚 Read more at Towards Data Science🔎 Find similar documents