LIME

Understanding LIME

Local Interpretable Model-agnostic Explanations (LIME) is a Python project developed by Ribeiro et al. [1] to interpret the predictions of any supervised Machine Learning (ML) model. Most ML…

📚 Read more at Towards Data Science🔎 Find similar documents

What’s Wrong with LIME

Local Interpretable Model-agnostic Explanations (LIME) is a popular Python package for explaining individual model’s predictions for text classifiers or classifiers that act on tables (NumPy arrays…

📚 Read more at Towards Data Science🔎 Find similar documents

LIME Light: Illuminating Machine Learning Models in Plain English

Machine learning models have made significant advancements in various domains, from healthcare to finance and natural language processing. However, the predictions generated by these models are often ...

📚 Read more at The Pythoneers🔎 Find similar documents

A Deep Dive on LIME for Local Interpretations

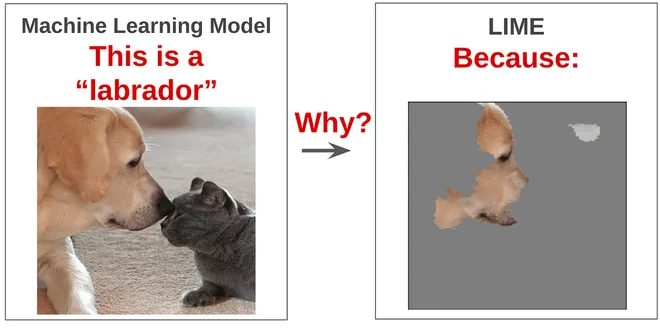

LIME is the OG of XAI methods. It allows us to understand how machine learning models work. Specifically, it can help us understand how individual predictions are made (i.e. local interpretations).

📚 Read more at Towards Data Science🔎 Find similar documents

Local Surrogate (LIME)

Local surrogate models are interpretable models that are used to explain individual predictions of black box machine learning models. Local interpretable model-agnostic explanations (LIME) 50 is a pap...

📚 Read more at Christophm Interpretable Machine Learning Book🔎 Find similar documents

ML Model Interpretability — LIME

Lime is short for Local Interpretable Model-Agnostic Explanations. Each part of the name reflects something that we desire in explanations. Local refers to local fidelity i.e "around" the instance bei...

📚 Read more at Analytics Vidhya🔎 Find similar documents

LIME — Explaining Any Machine Learning Prediction

The main goal of the LIME package is to explain any black-box machine learning models. It is used for both classification and regression problems. Let’s try to understand why we need to explain…

📚 Read more at Towards AI🔎 Find similar documents

Interpretable Machine Learning for Image Classification with LIME

Local Interpretable Model-agnostic Explanations (LIME) provides explanations for the predictions of any ML algorithm. For images, it finds superpixels strongly associated with a class label.

📚 Read more at Towards Data Science🔎 Find similar documents

LIME : Explaining Machine Learning Models with Confidence

LIME: Explaining Machine Learning Models with Confidence Photo by Yousz on Pixabay Machine learning models have become increasingly complex and accurate over the years, but their opacity remains a s...

📚 Read more at Python in Plain English🔎 Find similar documents

Build a LIME explainer dashboard with the fewest lines of code

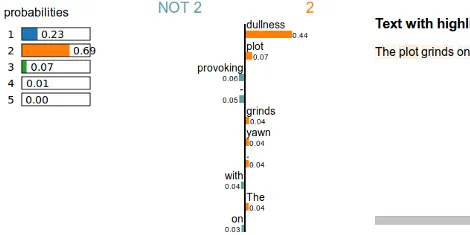

In an earlier post, I described how to explain a fine-grained sentiment classifier’s results using LIME ( Local Interpretable Model-agnostic Explanations). To recap, the following six models were…

📚 Read more at Towards Data Science🔎 Find similar documents

How the LIME algorithm fails

You maybe know the LIME algorithm from some of my earlier blog posts. It can be quite useful to “debug” data sets and understand machine learning models better. But LIME is fooled very easily.

📚 Read more at Depends on the definition🔎 Find similar documents

Instability of LIME explanations

In this article, I’d like to go very specific on the LIME framework for explaining machine learning predictions. I already covered the description of the method in this article, in which I also gave…

📚 Read more at Towards Data Science🔎 Find similar documents