Model Serving Techniques

🍮 Edge#147: MLOPs – Model Serving

In this issue: we explain what model serving is; we explore the TensorFlow serving paper; we cover TorchServe, a super simple serving framework for PyTorch. 💡 ML Concept of the Day: Model Serving Con...

📚 Read more at TheSequence🔎 Find similar documents

Serving ML Models with TorchServe

A complete end-to-end example of serving an ML model for image classification task Image by author Motivation This post will walk you through a process of serving your deep learning Torch model with ...

📚 Read more at Towards Data Science🔎 Find similar documents

🌀Edge#12: The challenges of Model Serving~

In this issue: we explain the concept of model serving; we review a paper in which Google Research outlined the architecture of a serving pipeline for TensorFlow models; we discuss MLflow, one of the ...

📚 Read more at TheSequence🔎 Find similar documents

Model serving architectures

Lecture 5 of MLOps with Databricks course

📚 Read more at Marvelous MLOps Substack🔎 Find similar documents

101 For Serving ML Models

Learn to write robust APIs Me at Spiti Valley in Himachal Pradesh → ML in production series Productionizing NLP Models 10 Useful ML Practices For Python Developers Serving ML Models My love for unders...

📚 Read more at Pratik’s Pakodas 🍿🔎 Find similar documents

Stateful model serving: how we accelerate inference using ONNX Runtime

Stateless model serving is what one usually thinks about when using a machine-learned model in production. For instance, a web application handling live traffic can call out to a model server from…

📚 Read more at Towards Data Science🔎 Find similar documents

Serving an Image Classification Model with Tensorflow Serving

This is the second part of a blog series that will cover Tensorflow model training, Tensorflow Serving, and its performance. In the previous post, we took an object oriented approach to train an…

📚 Read more at Level Up Coding🔎 Find similar documents

Several Ways for Machine Learning Model Serving (Model as a Service)

No matter how well you build a model, no one knows it if you cannot ship model. However, lots of data scientists want to focus on model building and skipping the rest of the stuff such as data…

📚 Read more at Towards AI🔎 Find similar documents

Serving TensorFlow models with TensorFlow Serving

TensorFlow Serving is a flexible, high-performance serving system for machine learning models, designed for production environments.

📚 Read more at Towards Data Science🔎 Find similar documents

Deploying Machine Learning models with TensorFlow Serving — an introduction

Deploying Machine Learning models with TensorFlow Serving — an introduction Step-by-step tutorial from initial environment setup to serving and managing multiple model versions with TensorFlow Servin...

📚 Read more at Towards Data Science🔎 Find similar documents

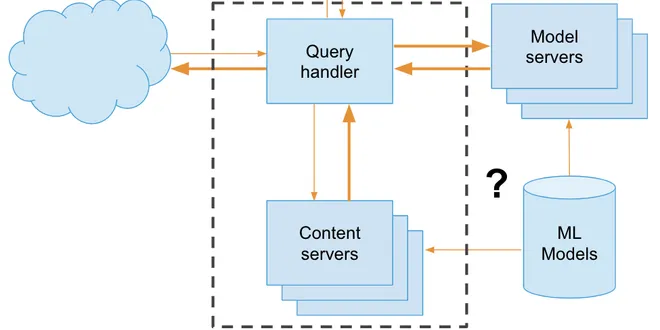

Model serving architectures on Databricks

Many different components are required to bring machine learning models to production. I believe that machine learning teams should aim to simplify the architecture and minimize the amount of tools th...

📚 Read more at Marvelous MLOps Substack🔎 Find similar documents

How To Effectively Manage Deployed Models

Most models never make it to production. We previously looked at deploying Tensorflow models using Tensorflow Serving. Once that process is completed, we may think that our work is all done. In…

📚 Read more at Towards Data Science🔎 Find similar documents