Momentum

Momentum is a widely-used optimization technique in machine learning, particularly in training deep neural networks. It enhances the gradient descent algorithm by introducing a velocity component that helps accelerate convergence in directions of low curvature while stabilizing updates in high curvature areas. By accumulating past gradients, momentum smooths out oscillations and allows the optimization process to navigate through narrow valleys and local minima more effectively. This results in faster training times and improved performance. Understanding momentum and its tuning is essential for practitioners aiming to optimize their models efficiently.

Why Momentum Really Works

Here’s a popular story about momentum [1, 2, 3] : gradient descent is a man walking down a hill. He follows the steepest path downwards; his progress is slow, but steady. Momentum is a heavy ball rol...

📚 Read more at Distill🔎 Find similar documents

Why 0.9? Towards Better Momentum Strategies in Deep Learning.

Momentum is a widely-used strategy for accelerating the convergence of gradient-based optimization techniques. Momentum was designed to speed up learning in directions of low curvature, without…

📚 Read more at Towards Data Science🔎 Find similar documents

An Intuitive and Visual Demonstration of Momentum in Machine Learning

Speedup machine learning model training with little effort.

📚 Read more at Daily Dose of Data Science🔎 Find similar documents

Momentum: A simple, yet efficient optimizing technique

What are gradient descent, moving average and how can they be applied to optimize Neural Networks? How is Momentum better than gradient Descent?

📚 Read more at Analytics Vidhya🔎 Find similar documents

Why to Optimize with Momentum

Momentum optimiser and its advantages over Gradient Descent

📚 Read more at Analytics Vidhya🔎 Find similar documents

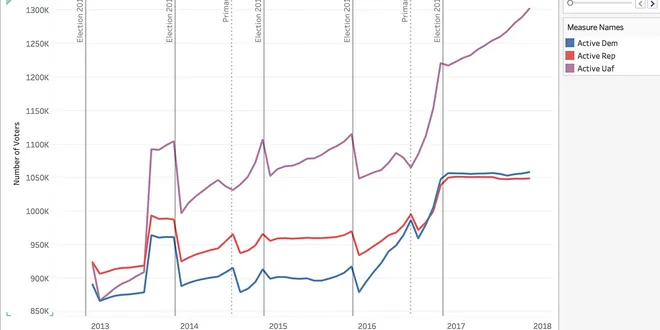

Quantifying Political Momentum with Data

Political commentators get paid to talk about the current political landscape every day, and while watching these talking heads do their thing on TV, I always hear the words “Political Momentum” over…...

📚 Read more at Towards Data Science🔎 Find similar documents

From Standstill to Momentum: MLP as Your First Gear in tidymodels

Embarking on a machine learning journey often feels like being handed the keys to a high-end sports car. The possibilities seem endless, the power under the hood palpable, and the anticipation of spee...

📚 Read more at R-bloggers🔎 Find similar documents

Algorithmic Momentum Trading Strategy

Infusing Big Data + Machine Learning & Technical Indicators for a Robust Algorithmic Momentum Trading Strategy Big data is completely revolutionizing how the stock markets across the world are…

📚 Read more at Analytics Vidhya🔎 Find similar documents

The Generative Audio Momentum

Next Week in The Sequence: Edge 303: Our series about new methods in generative AI continues with an exploration of different retrieval-augmented foundation model techniques. We discuss Meta AI’s famo...

📚 Read more at TheSequence🔎 Find similar documents

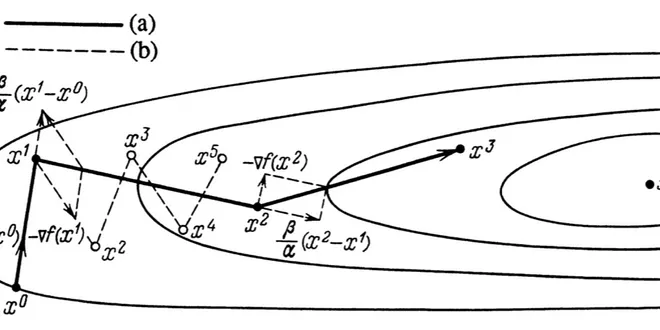

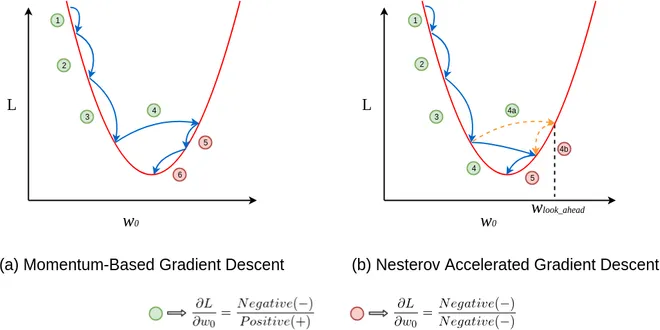

Learning Parameters, Part 2: Momentum-Based And Nesterov Accelerated Gradient Descent

In this post, we look at how the gentle-surface limitation of Gradient Descent can be overcome using the concept of momentum to some extent. Make sure you check out my blog post — Learning…

📚 Read more at Towards Data Science🔎 Find similar documents

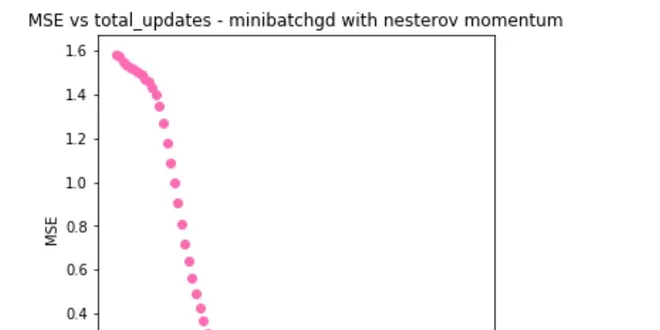

Optimizers — Momentum and Nesterov momentum algorithms (Part 2)

Welcome to the second part on optimisers where we will be discussing momentum and Nesterov accelerated gradient. If you want a quick review of vanilla gradient descent algorithms and its variants…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Gradient Descent With Nesterov Momentum From Scratch

Last Updated on October 12, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents