Multitask Learning

A Primer on Multi-task Learning — Part 1

Multi-task Learning (MTL) is a collection of techniques intended to learn multiple tasks simultaneously instead of learning them separately. The motivation behind MTL is to create a “Generalist”…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Optimizing Multi-task Learning Models in Practice

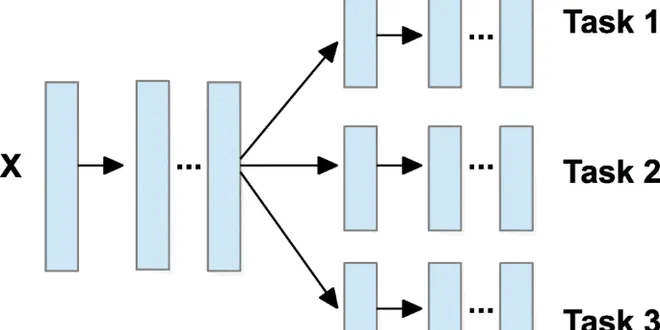

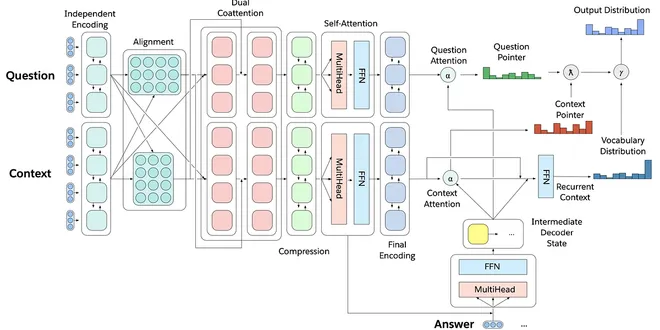

Why Multi-task learning Multi-task learning Multi-task learning (MTL) [1] is a field in machine learning in which we utilize a single model to learn multiple tasks simultaneously. Multi-task learning ...

📚 Read more at Towards Data Science🔎 Find similar documents

Multi-task Learning: All You Need to Know(Part-1)

Figure: Framework of Multi-task learning Multi-task learning is becoming incredibly popular. This article provides an overview of the current state of multi-task learning. It discusses the extensive m...

📚 Read more at Python in Plain English🔎 Find similar documents

Multi-task learning with Multi-gate Mixture-of-experts

Multi-task learning is a machine learning method in which a model learns to solve multiple tasks simultaneously. The assumption is that by learning to complete multiple correlated tasks with the same…...

📚 Read more at Towards Data Science🔎 Find similar documents

A Primer on Multi-task Learning — Part 2

Towards building a “Generalist” model. “A Primer on Multi-task Learning — Part 2” is published by Neeraj Varshney in Analytics Vidhya.

📚 Read more at Analytics Vidhya🔎 Find similar documents

Multitask learning in TensorFlow with the Head API

A fundamental characteristic of human learning is that we learn many things simultaneously. The equivalent idea in machine learning is called multi-task learning (MTL), and it has become increasingly…...

📚 Read more at Towards Data Science🔎 Find similar documents

A Primer on Multi-task Learning — Part 3

Towards building a “Generalist” model. “A Primer on Multi-task Learning — Part 3” is published by Neeraj Varshney in Analytics Vidhya.

📚 Read more at Analytics Vidhya🔎 Find similar documents

Multi-Task Learning with torch in R

Multi-task learning (MTL) is an approach where a single neural network model is trained to perform multiple related tasks simultaneously. This methodology can improve model generalization, reduce over...

📚 Read more at R-bloggers🔎 Find similar documents

Multi-Task Machine Learning: Solving Multiple Problems Simultaneously

Single-task learning is the process of learning to predict a single outcome (binary, multi-class, or continuous) from a labeled data set. By contrast, multi-task learning is the process of jointly…

📚 Read more at Towards Data Science🔎 Find similar documents

Deep Multi-Task Learning — 3 Lessons Learned

For the past year, my team and I have been working on a personalized user experience in the Taboola feed. We used Multi-Task Learning (MTL) to predict multiple Key Performance Indicators (KPIs) on…

📚 Read more at Towards Data Science🔎 Find similar documents