Nadam

NAdam

Implements NAdam algorithm. For further details regarding the algorithm we refer to Incorporating Nesterov Momentum into Adam . params ( iterable ) – iterable of parameters to optimize or dicts defini...

📚 Read more at PyTorch documentation🔎 Find similar documents

Gradient Descent Optimization With Nadam From Scratch

Last Updated on October 12, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

RAdam

Implements RAdam algorithm. For further details regarding the algorithm we refer to On the variance of the adaptive learning rate and beyond . params ( iterable ) – iterable of parameters to optimize ...

📚 Read more at PyTorch documentation🔎 Find similar documents

Nomad

Nomad is different from the previous two solutions, as it is not focused solely on containers. It is a general-purpose orchestrator with support for Docker, Podman, Qemu Virtual Machines, isolated for...

📚 Read more at Software Architecture with C plus plus🔎 Find similar documents

Adam

Implements Adam algorithm. For further details regarding the algorithm we refer to Adam: A Method for Stochastic Optimization . params ( iterable ) – iterable of parameters to optimize or dicts defini...

📚 Read more at PyTorch documentation🔎 Find similar documents

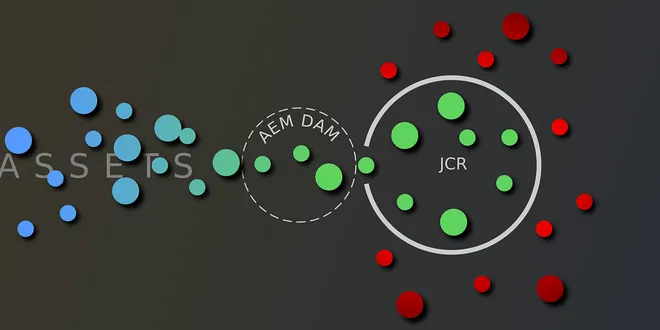

Adobe Experience Manager: Forcing the use of the DAM for images by closing loopholes

The DAM (Digital Asset Manager) is AEM’s asset management system and should be the single point of entry for all assets into the AEM platform and the one-stop-shop for using those assets in content…

📚 Read more at Level Up Coding🔎 Find similar documents

Reading REDATAM databases in R

REDATAM REDATAM (Retrieval of Data for Small Areas by Microcomputer) is a data storage and retrieval system created by ECLAC and it is widely used by national statistics offices to store and manipulat...

📚 Read more at R-bloggers🔎 Find similar documents

Adamax

Implements Adamax algorithm (a variant of Adam based on infinity norm). For further details regarding the algorithm we refer to Adam: A Method for Stochastic Optimization . params ( iterable ) – itera...

📚 Read more at PyTorch documentation🔎 Find similar documents

Introduction to BanditPAM

BanditPAM, with a less evocative name than its famous brother KMeans, is a clustering algorithm. It belongs to the KMedoids family of algorithms and was presented at the NeurIPS conference in 2020…

📚 Read more at Towards Data Science🔎 Find similar documents

‘NaN’ You May Not Know

When we use Reflect.getOwnPropertyDescriptor to get the property descriptor of NaN, it tells us that NaN is not deleteable, not changeable, not enumerable. So when we try to delete it using…

📚 Read more at Level Up Coding🔎 Find similar documents