Parameter Norm Penalties

Prevent Parameter Pollution in Node.JS

HTTP Parameter Pollution or HPP in short is a vulnerability that occurs due to passing of multiple parameters having the same name. HTTP Parameter Pollution or HPP in short is a vulnerability that…

📚 Read more at Level Up Coding🔎 Find similar documents

Parameter Constraints & Significance

Setting the values of one or more parameters for a GARCH model or applying constraints to the range of permissible values can be useful. Continue reading: Parameter Constraints & Significance

📚 Read more at R-bloggers🔎 Find similar documents

SGD: Penalties

SGD: Penalties Contours of where the penalty is equal to 1 for the three penalties L1, L2 and elastic-net. All of the above are supported by SGDClassifier and SGDRegressor .

📚 Read more at Scikit-learn Examples🔎 Find similar documents

Parameters

Section 4.3 Parameters I f a subroutine is a black box , then a parameter is something that provides a mechanism for passing information from the outside world into the box. Parameters are part of the...

📚 Read more at Introduction to Programming Using Java🔎 Find similar documents

Parameter

A kind of Tensor that is to be considered a module parameter. Parameters are Tensor subclasses, that have a very special property when used with Module s - when they’re assigned as Module attributes t...

📚 Read more at PyTorch documentation🔎 Find similar documents

UninitializedParameter

A parameter that is not initialized. Unitialized Parameters are a a special case of torch.nn.Parameter where the shape of the data is still unknown. Unlike a torch.nn.Parameter , uninitialized paramet...

📚 Read more at PyTorch documentation🔎 Find similar documents

Parameter Servers

As we move from a single GPU to multiple GPUs and then to multiple servers containing multiple GPUs, possibly all spread out across multiple racks and network switches, our algorithms for distributed ...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

ParametrizationList

A sequential container that holds and manages the original or original0 , original1 , … parameters or buffers of a parametrized torch.nn.Module . It is the type of module.parametrizations[tensor_name]...

📚 Read more at PyTorch documentation🔎 Find similar documents

Parameter Management

Once we have chosen an architecture and set our hyperparameters, we proceed to the training loop, where our goal is to find parameter values that minimize our loss function. After training, we will ne...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

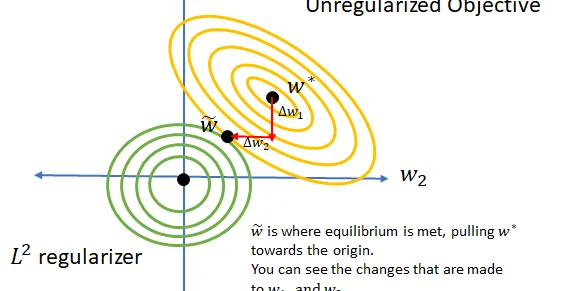

Norms, Penalties, and Multitask learning

A regularizer is commonly used in machine learning to constrain a model’s capacity to cerain bounds either based on a statistical norm or on prior hypotheses. This adds preference for one solution…

📚 Read more at Towards Data Science🔎 Find similar documents

Stealing User Information Via XSS Via Parameter Pollution

How To Steal User Information by chaining Http Parameter Pollution (HPP) with Cross Site Scripting vulnerabilities . Ethical Hacking | Bug Bounty | Medium Blog.

📚 Read more at Level Up Coding🔎 Find similar documents

Path Parameters and Numeric Validations

Path Parameters and Numeric Validations The same way you can declare more validations and metadata for query parameters with Query , you can declare the same type of validations and metadata for path...

📚 Read more at FastAPI Documentation🔎 Find similar documents