ReLu

ReLU

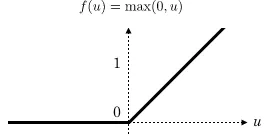

Applies the rectified linear unit function element-wise: ReLU ( x ) = ( x ) + = max ( 0 , x ) \text{ReLU}(x) = (x)^+ = \max(0, x) ReLU ( x ) = ( x ) + = max ( 0 , x ) inplace ( bool ) – can optional...

📚 Read more at PyTorch documentation🔎 Find similar documents

ReLU

Essential AI Math Excel Blueprints

📚 Read more at AI by Hand ✍️🔎 Find similar documents

A Gentle Introduction to the Rectified Linear Unit (ReLU)

Last Updated on August 20, 2020 In a neural network, the activation function is responsible for transforming the summed weighted input from the node into the activation of the node or output for that ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

How ReLU works?

Since the 2012 publication of the AlexNet paper, by Ilya Krizhevsky and Geoffrey Hinton, the true potential of the neural networks began to unravel by itself. A major part of it is the ReLU…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Is GELU, the ReLU successor ?

Is GELU the ReLU Successor? Photo by Willian B. on Unsplash Can we combine regularization and activation functions? In 2016 a paper from authors Dan Hendrycks and Kevin Gimpel came out. Since then, t...

📚 Read more at Towards AI🔎 Find similar documents

ReLU Activation : Increase accuracy by being Greedy!

This article will help you decide where exactly to use ReLU (Rectified Linear Unit) and how it plays a role in increasing the accuracy of your model. Use this GitHub link to view the source code. The…...

📚 Read more at Analytics Vidhya🔎 Find similar documents

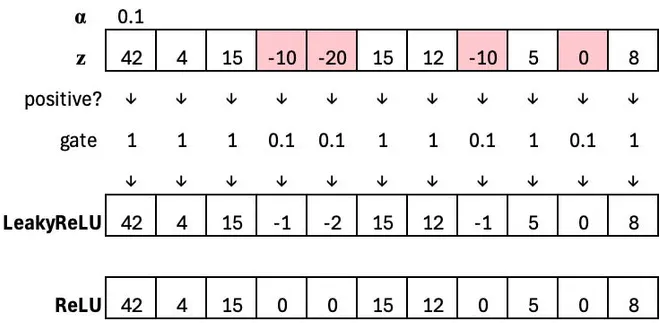

Leaky ReLU

Essential AI Math Excel Blueprints

📚 Read more at AI by Hand ✍️🔎 Find similar documents

ReLU Rules: Let’s Understand Why Its Popularity Remains Unshaken

For anybody who is just knocking on the door of Deep Learning or is a seasoned practitioner of it, ReLU is as commonplace as air. Air is exceptionally necessary for our survival, but are ReLUs that…

📚 Read more at Towards Data Science🔎 Find similar documents

Neural Networks: an Alternative to ReLU

Above is a graph of activation (pink) for two neurons (purple and orange) using a well-trod activation function: the Rectified Linear Unit, or ReLU. When each neuron’s summed inputs increase, the…

📚 Read more at Towards Data Science🔎 Find similar documents

RReLU

Applies the randomized leaky rectified liner unit function, element-wise, as described in the paper: Empirical Evaluation of Rectified Activations in Convolutional Network . The function is defined as...

📚 Read more at PyTorch documentation🔎 Find similar documents

Convolution and ReLU

<!--TITLE: Convolution and ReLU--/n Introduction In the last lesson, we saw that a convolutional classifier has two parts: a convolutional **base** and a **head** of dense layers. We learned that the ...

📚 Read more at Kaggle Learn Courses🔎 Find similar documents

Convolution and ReLU

<!--TITLE: Convolution and ReLU--/n Introduction In the last lesson, we saw that a convolutional classifier has two parts: a convolutional **base** and a **head** of dense layers. We learned that the ...

📚 Read more at Kaggle Learn Courses🔎 Find similar documents