Regularization

Regularization

Regularization Data Augmentation Dropout Early Stopping Ensembling Injecting Noise L1 Regularization L2 Regularization What is overfitting? From Wikipedia overfitting is, The production of an analysis...

📚 Read more at Machine Learning Glossary🔎 Find similar documents

The game of Regularization

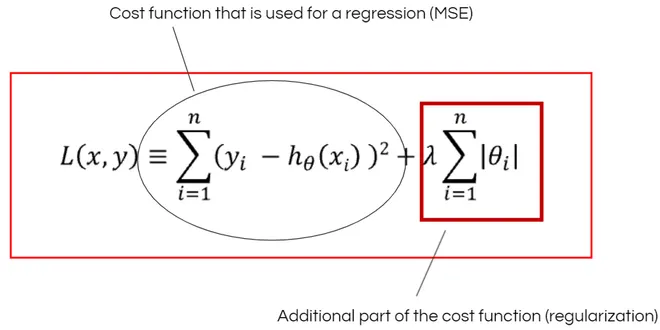

In machine learning regularization is a method to solve over-fitting problem by adding a penalty term with the cost function. Let’s first understand, While solving a machine learning problem, we…

📚 Read more at Towards Data Science🔎 Find similar documents

Regularization. What, Why, When, and How?

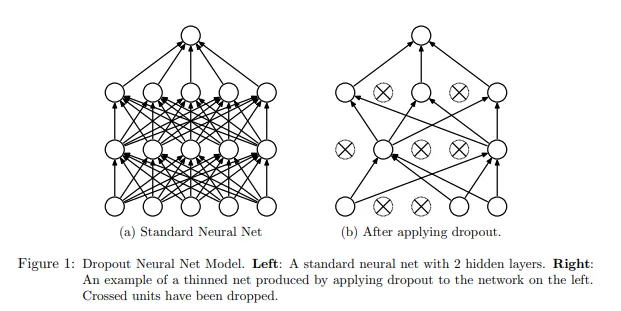

Regularization is a method to constraint the model to fit our data accurately and not overfit. It can also be thought of as penalizing unnecessary complexity in our model. There are mainly 3 types of…...

📚 Read more at Towards Data Science🔎 Find similar documents

Regularization in Machine Learning

Regularization is a technique used to reduce the error by fitting a function appropriately on a given training data set & to avoid noise & overfitting issues.

📚 Read more at Analytics Vidhya🔎 Find similar documents

Understanding Regularization in Plain Language: L1 and L2 Regularization

The meaning of the word regularization is “the act of changing a situation or system so that it follows laws or rules”. That’s what it does in the machine learning world as well. Regularization is a…

📚 Read more at Towards Data Science🔎 Find similar documents

The Affect of Regularization Techniques

Regularization aims to prevent overfitting on a machine learning model. It increases the model efficiency and helps the model to generalize the input data. In that part, I create some three models…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Intuition behind L1-L2 Regularization

Regularization is the process of making the prediction function fit the training data less well in the hope that it generalises new data better.That is the very generic definition of regularization…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Regularization!

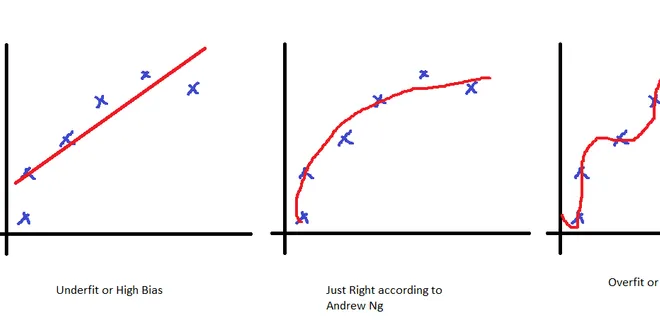

This blogpost will help you to understand why regularization is important in training the Machine Learning models, and also why it is most talked about topic in ML domain. So, lets look at this plot…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Regularization: Avoiding Overfitting in Machine Learning

Regularization is a technique used in machine learning to help fix a problem we all face in this space; when a model performs well on training data but poorly on new, unseen data — a problem known as…...

📚 Read more at Towards Data Science🔎 Find similar documents

Everything You Need To Know About Regularization

Image by Dall-E 2. Different ways to prevent overfitting in machine learning If you’re working with machine learning models, you’ve probably heard of regularization. But do you know what it is and how...

📚 Read more at Towards Data Science🔎 Find similar documents

Regularization in Gradient Point of View [ Manual Back Propagation in Tensorflow ]

Regularization is just like the cat shown above when some of the weights want to be ‘big’ in magnitude, we penalize them. And today I wanted to see what kind of changes does regularization term…

📚 Read more at Towards Data Science🔎 Find similar documents

Regularization in Machine Learning

This article introduces regularization technique and its various types used in machine learning. Regularization is performed to generalize a model so that it can output more accurate results on…

📚 Read more at Level Up Coding🔎 Find similar documents