Residual Connections

What is Residual Connection?

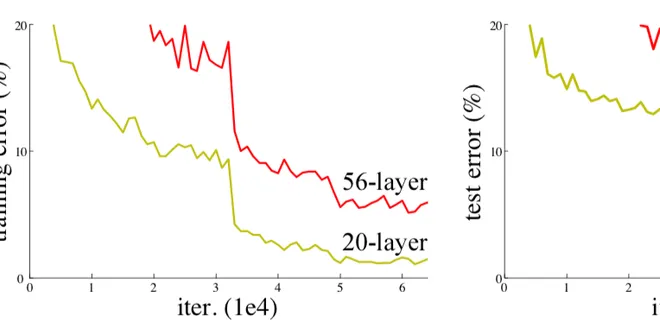

One of the dilemmas of training neural networks is that we usually want deeper neural networks for better accuracy and performance. However, the deeper the network, the harder it is for the training…

📚 Read more at Towards Data Science🔎 Find similar documents

The Unreasonable Richness of Residual Plot

In machine learning, residual is the ‘delta’ between the actual target value and the fitted value. Residual is a crucial concept in regression problems. It is the building block of any regression…

📚 Read more at Towards Data Science🔎 Find similar documents

Weight Decay is Useless Without Residual Connections

How do residual connections secretly fight overfitting? Photo by ThisisEngineering RAEng on Unsplash Introduction The idea in broad strokes is fairly simple: we can render weight decay practically us...

📚 Read more at Towards Data Science🔎 Find similar documents

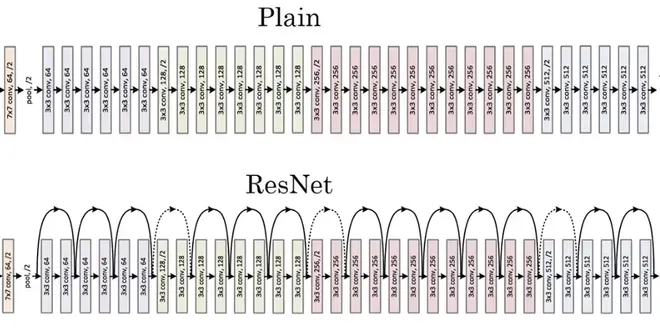

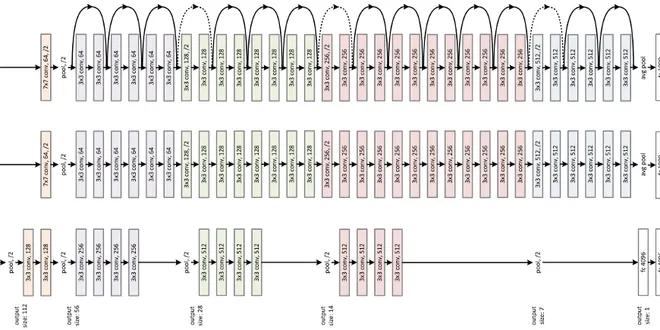

Residual blocks — Building blocks of ResNet

Understanding a residual block is quite easy. In traditional neural networks, each layer feeds into the next layer. In a network with residual blocks, each layer feeds into the next layer and…

📚 Read more at Towards Data Science🔎 Find similar documents

Understanding Residual Networks (ResNets) Intuitively

ResNets or Residual networks are the reason we could finally go very, very deep in neural networks. Everybody needs to know why they work, so, they can take better decisions and make sense of why…

📚 Read more at Towards Data Science🔎 Find similar documents

Paper Walkthrough: Residual Network (ResNet)

Implementing Residual Network from scratch using PyTorch. Photo by Patrick Federi on Unsplash In today’s paper walkthrough, I want to talk about a popular deep learning model: Residual Network. Here ...

📚 Read more at Python in Plain English🔎 Find similar documents

Understanding and implementation of Residual Networks(ResNets)

Residual learning framework to ease the training of networks that are substantially deeper than those used previously. This article is primarily based on research paper “Deep Residual Learning for…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Did You Know There Are At Least 5 Kinds Of Skip Connections?

If you’ve ever worked with deep neural networks, you’ve probably wrestled with vanishing gradients, exploding gradients, or just plain sluggish training. Training neural networks is a bit of an art, b...

📚 Read more at Towards AI🔎 Find similar documents

Residual Blocks in Deep Learning

Residual block, first introduced in the ResNet paper solves the neural network degradation problem Figure 0: Real Life Analogy of Degradation in Deep Neural Networks as they go deeper (Image by Autho...

📚 Read more at Towards Data Science🔎 Find similar documents

Unlocking Resnets

Residual Networks(Resnets from here on) were introduced by Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun of Microsoft Research in their seminal paper — Deep Residual Learning for Image…

📚 Read more at Analytics Vidhya🔎 Find similar documents

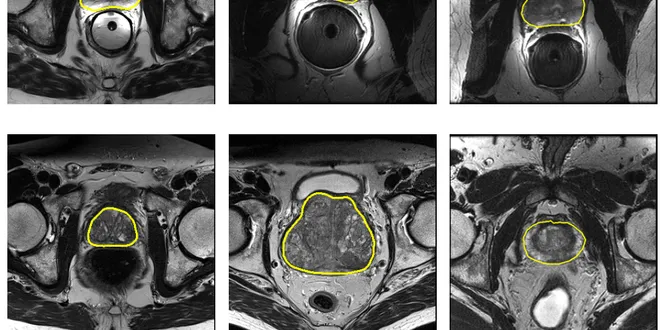

Review: 3D U-Net+ResNet — Volumetric Convolutions + Long & Short Residual Connections (Biomedical…

In this story, a paper “Volumetric ConvNets with Mixed Residual Connections for Automated Prostate Segmentation from 3D MR Images” is reviewed. This is a network using concepts of 3D U-Net+ResNet…

📚 Read more at Towards Data Science🔎 Find similar documents

Introduction to Residual Neural Networks

This article isn’t meant to be a technical explanation of residual neural networks. I’m sure many tutorials and books already exist and do a much better job at that. This article is meant as an…

📚 Read more at Analytics Vidhya🔎 Find similar documents