Stochastic gradient descent

Stochastic Gradient Descent

In earlier chapters we kept using stochastic gradient descent in our training procedure, however, without explaining why it works. To shed some light on it, we just described the basic principles of g...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

1.5. Stochastic Gradient Descent

Stochastic Gradient Descent (SGD) is a simple yet very efficient approach to fitting linear classifiers and regressors under convex loss functions such as (linear) Support Vector Machines and Logis......

📚 Read more at Scikit-learn User Guide🔎 Find similar documents

Stochastic Gradient Descent — Clearly Explained !!

Stochastic gradient descent is a very popular and common algorithm used in various Machine Learning algorithms, most importantly forms the basis of Neural Networks. In this article, I have tried my…

📚 Read more at Towards Data Science🔎 Find similar documents

Early stopping of Stochastic Gradient Descent

Early stopping of Stochastic Gradient Descent Stochastic Gradient Descent is an optimization technique which minimizes a loss function in a stochastic fashion, performing a gradient descent step sampl...

📚 Read more at Scikit-learn Examples🔎 Find similar documents

Stochastic Gradient Descent: Explanation and Complete Implementation from Scratch

Stochastic gradient descent is a widely used approach in machine learning and deep learning. This article explains stochastic gradient descent using a single perceptron, using the famous iris…

📚 Read more at Towards Data Science🔎 Find similar documents

Stochastic Gradient Descent (SGD)

Gradient Descent, a first order optimization used to learn the weights of classifier. However, this implementation of gradient descent will be computationally slow to reach the global minima. If you…

📚 Read more at Analytics Vidhya🔎 Find similar documents

Stochastic Gradient Descent & Momentum Explanation

Let’s talk about stochastic gradient descent(SGD), which is probably the second most famous gradient descent method we’ve heard most about. As we know, the traditional gradient descent method…

📚 Read more at Towards Data Science🔎 Find similar documents

Understanding Stochastic Gradient Descent in a Different Perspective

The stochastic optimization [1] is a prevalent approach when training a neural network. And based on that, there are methods like SGD with Momentum, Adagrad, and RMSProp, which can give decent…

📚 Read more at Towards Data Science🔎 Find similar documents

Stochastic Gradient Descent: Math and Python Code

Deep Dive on Stochastic Gradient Descent. Algorithm, assumptions, benefits, formula, and practical implementation Image by DALL-E-2 Introduction The image above is not just an appealing visual that d...

📚 Read more at Towards Data Science🔎 Find similar documents

Stochastic Gradient Descent with momentum

This is part 2 of my series on optimization algorithms used for training neural networks and machine learning models. Part 1 was about Stochastic gradient descent. In this post I presume basic…

📚 Read more at Towards Data Science🔎 Find similar documents

Gradient Descent

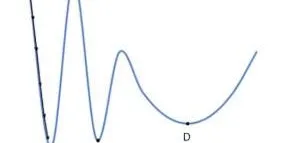

In this section we are going to introduce the basic concepts underlying gradient descent . Although it is rarely used directly in deep learning, an understanding of gradient descent is key to understa...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Why Stochastic Gradient Descent Works?

Optimizing a cost function is one of the most important concepts in Machine Learning. Gradient Descent is the most common optimization algorithm and the foundation of how we train an ML model. But it…...

📚 Read more at Towards Data Science🔎 Find similar documents