adagrad algorithm

Adagrad

Implements Adagrad algorithm. For further details regarding the algorithm we refer to Adaptive Subgradient Methods for Online Learning and Stochastic Optimization . params ( iterable ) – iterable of p...

📚 Read more at PyTorch documentation🔎 Find similar documents

Gradient Descent With AdaGrad From Scratch

Last Updated on October 12, 2021 Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function. A limitation ...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

Adaptive Boosting: A stepwise Explanation of the Algorithm

Photo by Sawyer Bengtson on Unsplash Adaptive Boosting (or AdaBoost), a supervised ensemble learning algorithm, was the very first Boosting algorithm used in practice and developed by Freund and Schap...

📚 Read more at Towards Data Science🔎 Find similar documents

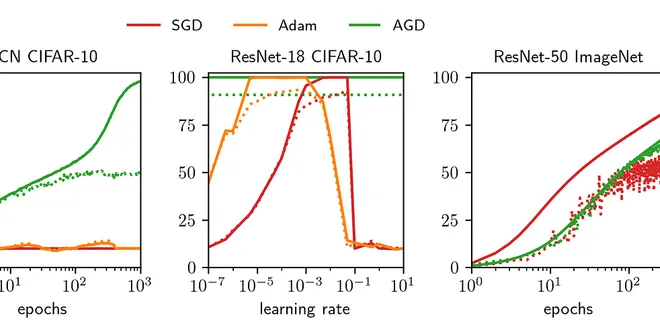

Train ImageNet without Hyperparameters with Automatic Gradient Descent

Towards architecture-aware optimisation TL;DR We’ve derived an optimiser called automatic gradient descent (AGD) that can train ImageNet without hyperparameters. This removes the need for expensive a...

📚 Read more at Towards Data Science🔎 Find similar documents