decoder transformers

TransformerDecoder

TransformerDecoder is a stack of N decoder layers decoder_layer – an instance of the TransformerDecoderLayer() class (required). num_layers – the number of sub-decoder-layers in the decoder (required)...

📚 Read more at PyTorch documentation🔎 Find similar documents

Methods for Decoding Transformers

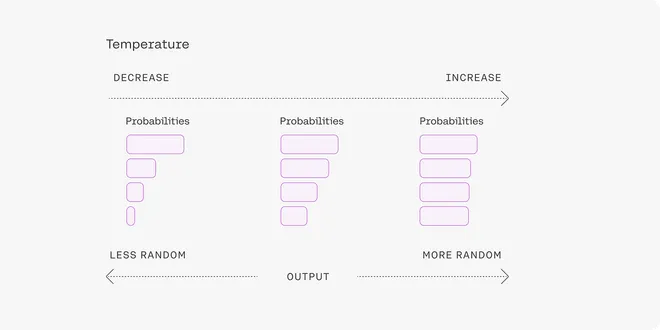

During text generation tasks, the crucial step of decoding bridges the gap between a model’s internal vector representation and the final human-readable text output. The selection of decoding strategi...

📚 Read more at Python in Plain English🔎 Find similar documents

Methods for Decoding Transformers

During text generation tasks, the crucial step of decoding bridges the gap between a model’s internal vector representation and the final human-readable text output. The selection of decoding strategi...

📚 Read more at Level Up Coding🔎 Find similar documents

LLMs and Transformers from Scratch: the Decoder

As always, the code is available on our GitHub . One Big While Loop After describing the inner workings of the encoder in transformer architecture in our previous article , we shall see the next segme...

📚 Read more at Towards Data Science🔎 Find similar documents

TransformerDecoderLayer

TransformerDecoderLayer is made up of self-attn, multi-head-attn and feedforward network. This standard decoder layer is based on the paper “Attention Is All You Need”. Ashish Vaswani, Noam Shazeer, N...

📚 Read more at PyTorch documentation🔎 Find similar documents

The Transformer Architecture From a Top View

There are two components in a Transformer Architecture: the Encoder and the Decoder. These components work in conjunction with each other and they share several similarities. Encoder : Converts an inp...

📚 Read more at Towards AI🔎 Find similar documents

Simplifying Transformers: State of the Art NLP Using Words You Understand — part 5— Decoder and…

Simplifying Transformers: State of the Art NLP Using Words You Understand , Part 5: Decoder and Final Output The final part of the Transformer series Image from the original paper. This 4th part of t...

📚 Read more at Towards Data Science🔎 Find similar documents

Transformer Architecture Part -2

In the first part of this series(Transformer Architecture Part-1), we explored the Transformer Encoder, which is essential for capturing complex patterns in input data. However, for tasks like machine...

📚 Read more at Towards AI🔎 Find similar documents

De-coded: Transformers explained in plain English

No code, maths, or mention of Keys, Queries and Values Since their introduction in 2017, transformers have emerged as a prominent force in the field of Machine Learning, revolutionizing the capabilit...

📚 Read more at Towards Data Science🔎 Find similar documents

Implementing the Transformer Decoder from Scratch in TensorFlow and Keras

Last Updated on October 26, 2022 There are many similarities between the Transformer encoder and decoder, such as their implementation of multi-head attention, layer normalization, and a fully connect...

📚 Read more at Machine Learning Mastery🔎 Find similar documents

TransformerEncoder

TransformerEncoder is a stack of N encoder layers. Users can build the BERT( https://arxiv.org/abs/1810.04805 ) model with corresponding parameters. encoder_layer – an instance of the TransformerEncod...

📚 Read more at PyTorch documentation🔎 Find similar documents

Implementing a Transformer Encoder from Scratch with JAX and Haiku

Understanding the fundamental building blocks of Transformers. Transformers, in the style of Edward Hopper (generated by Dall.E 3) Introduced in 2017 in the seminal paper “Attention is all you need”[...

📚 Read more at Towards Data Science🔎 Find similar documents