optimizers deep learning

Understand Optimizers in Deep Learning

Optimizers are the paradigm of machine learning particularly in deep learning make a moon in the beauty of its working by reducing or minimizing losses in our model. Optimizers are the methods or…

📚 Read more at Towards AI🔎 Find similar documents

OPTIMIZERS IN DEEP LEARNING

Optimizers are algorithms or methods used to change the attributes of your neural network such as weights and learning rate in order to reduce the losses. In BGD it will take all training dataset and…...

📚 Read more at Analytics Vidhya🔎 Find similar documents

Optimizers

In machine/deep learning main motive of optimizers is to reduce the cost/loss by updating weights, learning rates and biases and to improve model performance. Many people are already training neural…

📚 Read more at Towards Data Science🔎 Find similar documents

Optimization Algorithms for Deep Learning

Optimization algorithms for Deep learning like Batch and Minibatch gradient descent, Momentum, RMS prop, and Adam optimizer

📚 Read more at Analytics Vidhya🔎 Find similar documents

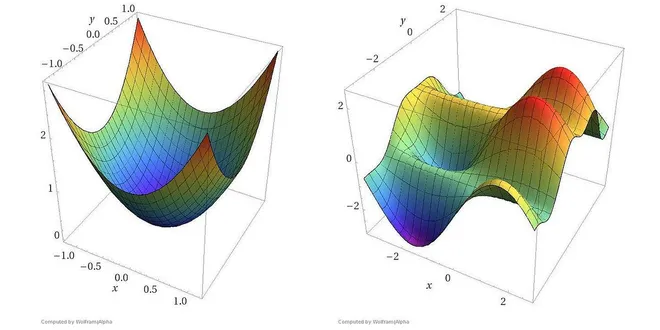

Optimization and Deep Learning

In this section, we will discuss the relationship between optimization and deep learning as well as the challenges of using optimization in deep learning. For a deep learning problem, we will usually ...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Deep Learning Optimizers

This blog post explores how the advanced optimization technique works. We will be learning the mathematical intuition behind the optimizer like SGD with momentum, Adagrad, Adadelta, and Adam…

📚 Read more at Towards Data Science🔎 Find similar documents

Mastering Optimizers with Tensorflow: A Deep Dive Into Efficient Model Training

Optimizing neural networks for peak performance is a critical pursuit in the ever-changing world of machine learning. TensorFlow, a popular open-source framework, includes several optimizers that are ...

📚 Read more at Python in Plain English🔎 Find similar documents

AdaHessian: a second order optimizer for deep learning

Most of the optimizers used in deep learning are (stochastic) gradient descent methods. They only consider the gradient of the loss function. In comparison, second order methods also take the…

📚 Read more at Towards Data Science🔎 Find similar documents

Overview of various Optimizers in Neural Networks

Optimizers are algorithms or methods used to change the attributes of the neural network such as weights and learning rate to reduce the losses. Optimizers are used to solve optimization problems by…

📚 Read more at Towards Data Science🔎 Find similar documents

Deep Learning Part — 9 : Optimizers are what you need.

Optimizers in Neural Networks will play a key role in faster convergence. Continue reading on Towards AI

📚 Read more at Towards AI🔎 Find similar documents

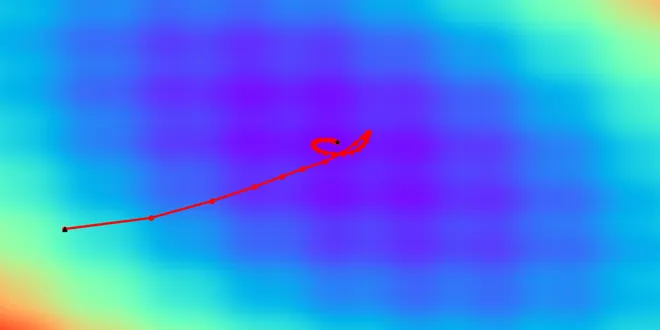

Understanding Deep Learning Optimizers: Momentum, AdaGrad, RMSProp & Adam

Gain intuition behind acceleration training techniques in neural networks Introduction Deep learning made a gigantic step in the world of artificial intelligence. At the current moment, neural networ...

📚 Read more at Towards Data Science🔎 Find similar documents

Optimizers

Optimizers What is Optimizer ? It is very important to tweak the weights of the model during the training process, to make our predictions as correct and optimized as possible. But how exactly do you ...

📚 Read more at Machine Learning Glossary🔎 Find similar documents