small-language-model

Small Language Models (SLMs) are a new class of AI language models designed to operate efficiently on standard hardware, making them accessible for a wider range of applications. Unlike their larger counterparts, which can have tens of billions to trillions of parameters, SLMs typically contain a few million to a few billion parameters. This compact size allows them to run on edge devices such as mobile phones and personal computers, offering a cost-effective and energy-efficient alternative. SLMs are gaining traction in various fields, demonstrating that smaller models can be just as effective, if not more so, than larger ones in specific tasks.

Small Language Models

If you are not a Medium member, you can read this article here . Large language models have become very popular recently due to the amazing capabilities shown by these models. Their applicability to a...

📚 Read more at Towards AI🔎 Find similar documents

Why Small Language Models Make Business Sense

Image generated by Gemini AI Small Language Models are changing the way businesses implement AI by providing solutions that operate efficiently using standard hardware. Despite the attention given to ...

📚 Read more at Towards AI🔎 Find similar documents

It is raining Language Models! All about the new Small Language Models — Phi-2

It is raining Language Models! All about the new Small Language Model— Phi-2 The Dawn of Small Language Models: Introducing Phi-2 that outperformed Llama-2(70B), which is 25 times its size! Image by ...

📚 Read more at Towards AI🔎 Find similar documents

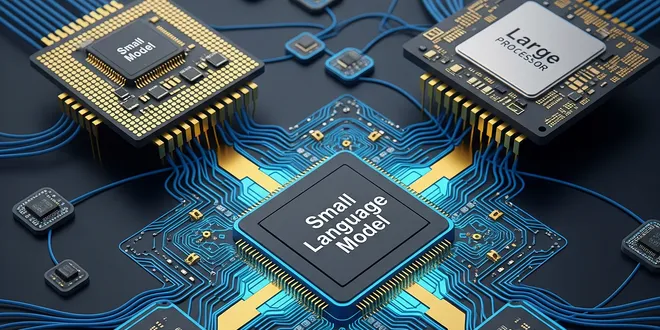

Your Company Needs Small Language Models

Image generated by Stable Diffusion When specialized models outperform general-purpose models “Bigger is always better” — this principle is deeply rooted in the AI world. Every month, larger models ar...

📚 Read more at Towards Data Science🔎 Find similar documents

Exploring the Power of Small Language Models

AI Generated In the rapidly evolving landscape of Artificial Intelligence, Large Language Models (LLMs) have captivated the public imagination with their remarkable capabilities in understanding and g...

📚 Read more at Towards AI🔎 Find similar documents

Small Language Models (SLMs): A Practical Guide to Architecture and Deployment

SLM visual showcase with points. (Image Generated By OpenAI) I. Introduction Small Language Models (SLMs) are reshaping how we think about AI efficiency. Unlike their massive counterparts — think GPT-...

📚 Read more at Towards AI🔎 Find similar documents

Small Language Models (SLMs) in Enterprise: A Focused Approach to AI

One size does not fit all. Large language models (LLMs) like GPT-4 have certainly grabbed headlines with their broad knowledge and versatility. Yet, there’s a growing sense that sometimes, bigger isn...

📚 Read more at Towards AI🔎 Find similar documents

Some Technical Notes About Phi-3: Microsoft’s Marquee Small Language Model

The model ius able to outperform much larger alternatives and now run locally on mobile devices. Created Using Ideogram I recently started an AI-focused educational newsletter, that already has over ...

📚 Read more at Towards AI🔎 Find similar documents

Not-So-Large Language Models: Good Data Overthrows the Goliath

(Image generated by DALL·E) How to make a million-sized language model that tops a billion-size one In this article, we will see how Language Models (LM) can focus on better data and training strategi...

📚 Read more at Towards Data Science🔎 Find similar documents

Small But Mighty — The Rise of Small Language Models

Our world has been strongly impacted by the launch of Large Language Models (LLMs). They exploded onto the scene, with GPT-3.5 amassing a million users in a single app in just five days — a testament ...

📚 Read more at Towards Data Science🔎 Find similar documents

Exploring “Small” Vision-Language Models with TinyGPT-V

TinyGPT-V is a “small” vision-language model that can run on a single GPU Summary AI technologies are continuing to become embedded in our everyday lives. One application of AI includes going multi-m...

📚 Read more at Towards Data Science🔎 Find similar documents

Large Language Models: DistilBERT — Smaller, Faster, Cheaper and Lighter

Large Language Models: DistilBERT — Smaller, Faster, Cheaper and Lighter Unlocking the secrets of BERT compression: a student-teacher framework for maximum efficiency Introduction In recent years, th...

📚 Read more at Towards Data Science🔎 Find similar documents