Batch-Normalization

Batch normalization is a technique used in deep learning to improve the training of neural networks. Introduced by Sergey Ioffe and Christian Szegedy in 2015, it addresses the issue of internal covariate shift by normalizing the inputs of each layer within the network. This process involves scaling the outputs to have a mean of zero and a variance of one, which accelerates training and enhances model stability. By reducing sensitivity to weight initialization and acting as a form of regularization, batch normalization has become a fundamental component in modern deep learning architectures, enabling the training of deeper networks effectively.

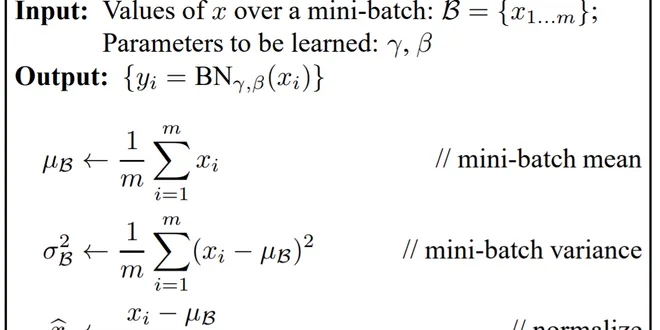

The Math Behind Batch Normalization

Batch Normalization is a key technique in neural networks as it standardizes the inputs to each layer. It tackles the problem of internal covariate shift, where the input distribution of each layer…

📚 Read more at Towards Data Science🔎 Find similar documents

What is batch normalization?

Batch normalization was introduced by Sergey Ioffe’s and Christian Szegedy’s 2015 paper Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. Batch…

📚 Read more at Towards Data Science🔎 Find similar documents

Batch Normalization

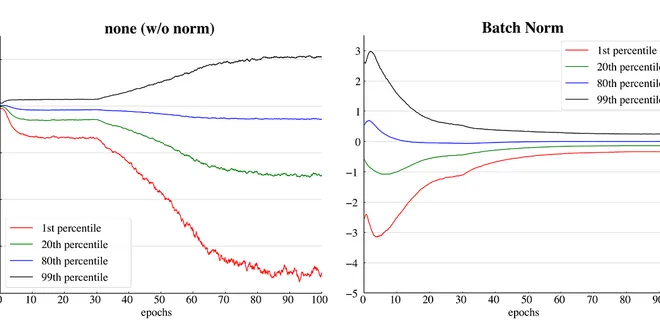

Training deep neural networks is difficult. Getting them to converge in a reasonable amount of time can be tricky. In this section, we describe batch normalization , a popular and effective technique ...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

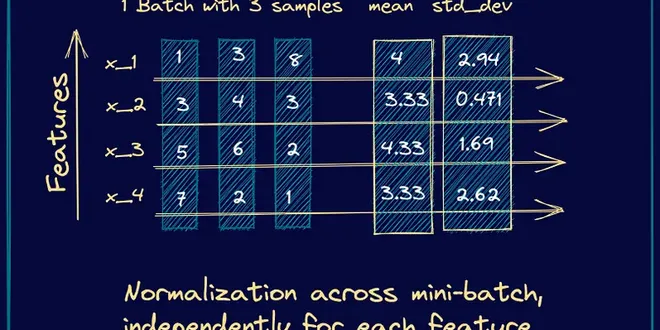

Batch Normalization

The idea is that, instead of just normalizing the inputs to the network, we normalize the inputs to layers within the network. It’s called “batch” normalization because during training, we normalize…

📚 Read more at Towards Data Science🔎 Find similar documents

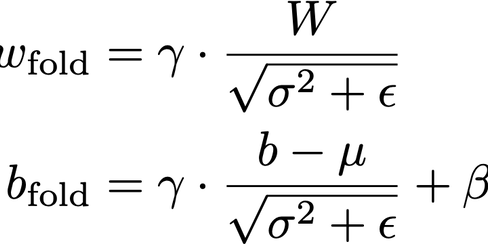

Speed-up inference with Batch Normalization Folding

Batch Normalization is a technique which takes care of normalizing the input of each layer to make the training process faster and more stable. In practice, it is an extra layer that we generally add…...

📚 Read more at Towards Data Science🔎 Find similar documents

A Novel Way to Use Batch Normalization

Batch normalization is essential for every modern deep learning algorithm. Normalizing output features before passing them on to the next layer stabilizes the training of large neural networks. Of…

📚 Read more at Towards Data Science🔎 Find similar documents

Why does Batch Normalization work ?

Why does Batch Normalization work ? Batch Normalization is a widely used technique for faster and stable training of deep neural networks. While the reason for the effectiveness of BatchNorm is said ...

📚 Read more at Towards AI🔎 Find similar documents

An Alternative To Batch Normalization

The development of Batch Normalization(BN) as a normalization technique was a turning point in the development of deep learning models, it enabled various networks to train and converge. Despite its…

📚 Read more at Towards Data Science🔎 Find similar documents

Why Batch Normalization Matters?

Batch Normalization(BN) has become the-state-of-the-art right from its inception. It enables us to opt for higher learning rates and use sigmoid activation functions even for Deep Neural Networks. It…...

📚 Read more at Towards AI🔎 Find similar documents

Implementing Batch Normalization in Python

Batch normalization deals with the problem of poorly initialization of neural networks. It can be interpreted as doing preprocessing at every layer of the network. It forces the activations in a netwo...

📚 Read more at Towards Data Science🔎 Find similar documents

Curse of Batch Normalization

Batch Normalization is Indeed one of the major breakthrough in the field of Deep Learning and is one of the hot topics for discussion among researchers in the past few years. Batch Normalization is a…...

📚 Read more at Towards Data Science🔎 Find similar documents

Deep learning basics — batch normalization

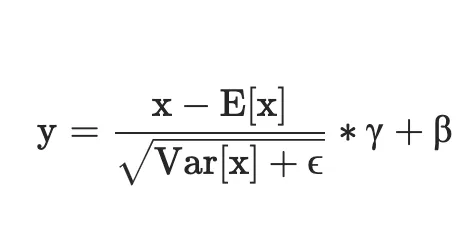

Batch normalization normalizes the activations of the network between layers in batches so that the batches have a mean of 0 and a variance of 1. The batch normalization is normally written as…

📚 Read more at Analytics Vidhya🔎 Find similar documents