Parameter-Penalties

Parameter penalties are techniques used in machine learning and statistical modeling to prevent overfitting and improve model generalization. They work by adding a penalty term to the loss function, which discourages the model from fitting the training data too closely. Common types of parameter penalties include L1 (Lasso) and L2 (Ridge) regularization, each imposing different constraints on the model’s parameters. L1 regularization encourages sparsity, leading to simpler models, while L2 regularization tends to distribute the error across all parameters. Understanding and applying these penalties is crucial for developing robust predictive models.

Prevent Parameter Pollution in Node.JS

HTTP Parameter Pollution or HPP in short is a vulnerability that occurs due to passing of multiple parameters having the same name. HTTP Parameter Pollution or HPP in short is a vulnerability that…

📚 Read more at Level Up Coding🔎 Find similar documents

Parameter Constraints & Significance

Setting the values of one or more parameters for a GARCH model or applying constraints to the range of permissible values can be useful. Continue reading: Parameter Constraints & Significance

📚 Read more at R-bloggers🔎 Find similar documents

Parameter Management

Once we have chosen an architecture and set our hyperparameters, we proceed to the training loop, where our goal is to find parameter values that minimize our loss function. After training, we will ne...

📚 Read more at Dive intro Deep Learning Book🔎 Find similar documents

Parameters

Section 4.3 Parameters I f a subroutine is a black box , then a parameter is something that provides a mechanism for passing information from the outside world into the box. Parameters are part of the...

📚 Read more at Introduction to Programming Using Java🔎 Find similar documents

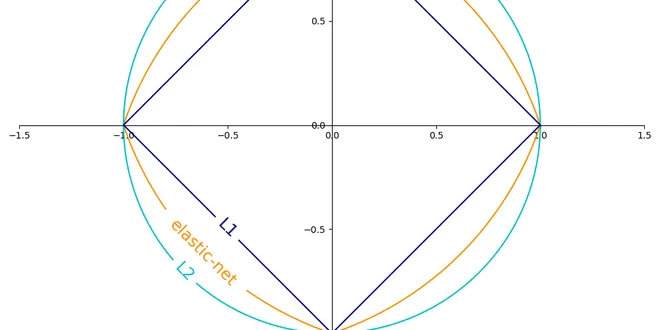

SGD: Penalties

SGD: Penalties Contours of where the penalty is equal to 1 for the three penalties L1, L2 and elastic-net. All of the above are supported by SGDClassifier and SGDRegressor .

📚 Read more at Scikit-learn Examples🔎 Find similar documents

UninitializedParameter

A parameter that is not initialized. Unitialized Parameters are a a special case of torch.nn.Parameter where the shape of the data is still unknown. Unlike a torch.nn.Parameter , uninitialized paramet...

📚 Read more at PyTorch documentation🔎 Find similar documents

Parameter

A kind of Tensor that is to be considered a module parameter. Parameters are Tensor subclasses, that have a very special property when used with Module s - when they’re assigned as Module attributes t...

📚 Read more at PyTorch documentation🔎 Find similar documents

The Hidden Costs of Optional Parameters

Member-only story The Hidden Costs of Optional Parameters — and Why Separate Methods Are Often Better René Reifenrath · Follow Published in Level Up Coding · 7 min read · Just now -- Share In this art...

📚 Read more at Level Up Coding🔎 Find similar documents

Parameter Updates

In PyTorch, parameter updates refer to adjusting the model’s weights during training to minimize the loss. This is done using an optimizer, which updates the parameters based on the gradients computed...

📚 Read more at Codecademy🔎 Find similar documents

Parametrizations Tutorial

Implementing parametrizations by hand Assume that we want to have a square linear layer with symmetric weights, that is, with weights X such that X = Xᵀ . One way to do so is to copy the upper-triangu...

📚 Read more at PyTorch Tutorials🔎 Find similar documents

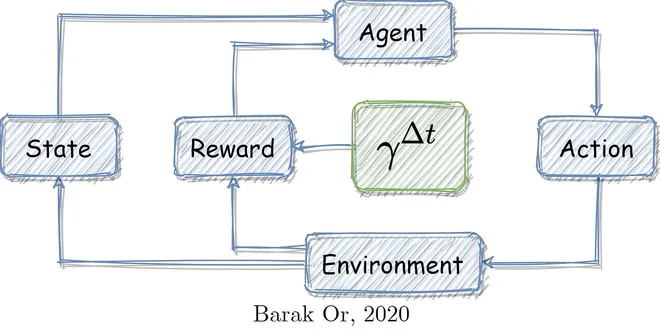

Penalizing the Discount Factor in Reinforcement Learning

This post deals with the key parameter I found as a high influence: the discount factor. It discusses the time-based penalization to achieve better performances, where discount factor is modified…

📚 Read more at Towards Data Science🔎 Find similar documents

Optimizing Model Parameters

Optimizing Model Parameters Now that we have a model and data it’s time to train, validate and test our model by optimizing its parameters on our data. Training a model is an iterative process; in eac...

📚 Read more at PyTorch Tutorials🔎 Find similar documents