What is GPT

GPT — Intuitively and Exhaustively Explained

In this article we’ll be exploring the evolution of OpenAI’s GPT models. We’ll briefly cover the transformer, describe variations of the transformer which lead to the first GPT model, then we’ll go th...

📚 Read more at Towards Data Science🔎 Find similar documents

What Is GPT-3 And Why It is Revolutionizing Artificial Intelligence?

Generative Pre-trained Transformer 3 (GPT-3) is an auto-regressive language model that uses deep learning to produce human-like text. It is the third-generation language prediction model in the GPT-n…...

📚 Read more at Analytics Vidhya🔎 Find similar documents

GPT-2 (GPT2) vs GPT-3 (GPT3): The OpenAI Showdown

The Generative Pre-Trained Transformer (GPT) is an innovation in the Natural Language Processing (NLP) space developed by OpenAI. These models are known to be the most advanced of its kind and can…

📚 Read more at Becoming Human: Artificial Intelligence Magazine🔎 Find similar documents

GPT-3 101: a brief introduction

Let’s start with the basics. GPT-3 stands for Generative Pretrained Transformer version 3, and it is a sequence transduction model. Simply put, sequence transduction is a technique that transforms an…...

📚 Read more at Towards Data Science🔎 Find similar documents

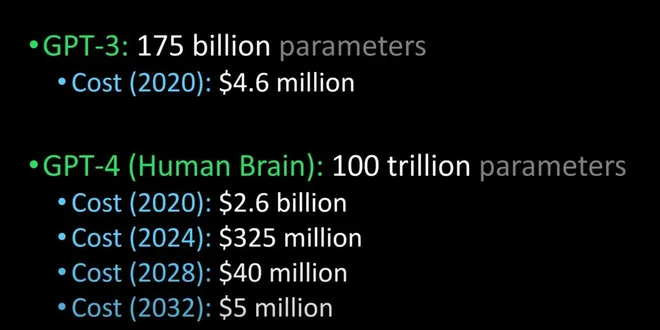

What is GPT-4 (and when?)

GPT-4 is a natural language processing model produced by openAI as a successor to GPT-3 Continue reading on Towards AI

📚 Read more at Towards AI🔎 Find similar documents

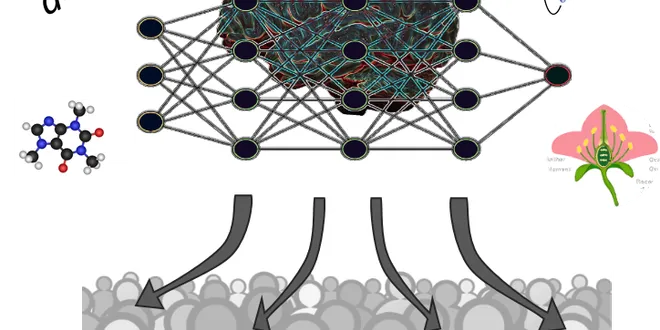

Devising tests to measure GPT-3's knowledge of the basic sciences

Generative Pre-trained Transformers (GPTs) are deep-learned autoregressive language models trained on a large corpus of text that, given an input prompt, synthesize an output that intends to pass as…

📚 Read more at Towards Data Science🔎 Find similar documents

Large Language Models, GPT-1 — Generative Pre-Trained Transformer

Large Language Models, GPT-1 — Generative Pre-Trained Transformer Diving deeply into the working structure of the first ever version of gigantic GPT-models Introduction 2017 was a historical year in ...

📚 Read more at Towards Data Science🔎 Find similar documents

GPT Model: How Does it Work?

During the last few years, the buzz around AI has been enormous, and the main trigger of all this is obviously the advent of GPT-based large language models. Interestingly, this approach itself is not...

📚 Read more at Towards Data Science🔎 Find similar documents

Email Assistant Powered by GPT-3

GPT-3 stands for Generative Pre-trained Transformer 3. It is an autoregressive language model that uses deep learning to produce human-like results in various language tasks. It is the…

📚 Read more at Towards AI🔎 Find similar documents

Everything You Need to Know about GPT-4

GPT-4 is the latest and most advanced language model developed by OpenAI, a Microsoft-backed company that aims to create artificial intelligence that can benefit humanity. GPT-4 is a successor of…

📚 Read more at Towards AI🔎 Find similar documents

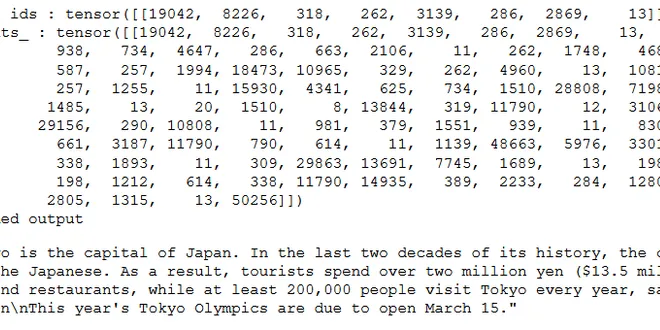

Fine-tune GPT-2

In this post, I will try to show simple usage and training of GPT-2. I assume you have basic knowledge about GPT-2. GPT is a auto-regressive Language model. It can generate text for us with it’s huge…...

📚 Read more at Analytics Vidhya🔎 Find similar documents

GPT-3 A Powerful New Beginning

OpenAI’s GPT-3 is a powerful text-generating neural network pre-trained on the largest corpus of text to date, capable of uncanny predictive text response based on its input, and is currently by far…

📚 Read more at Level Up Coding🔎 Find similar documents