Our world has been undergoing a significant transformation since the launch of GPT-3.5. With a staggering 1 million users in less than a week, it became one of the fastest-adopted technologies globally, clearly set to revolutionise everything.

But how does this model work?

This is a common question among those curious about how Large Language Models or LLMs can generate natural language. The short answer is that these models are trained with vast amounts of data to excel in natural language processing.

The real magic, however, relies on the Transformer architecture.

First introduced in 2017 in the seminal paper "Attention is All You Need" by Google, the Transformer architecture underpins groundbreaking models like ChatGPT and many others like Mistral or Gemini, sparking a new wave of advancement in the AI field.

As one of the pivotal technologies driving the latest innovations, this article aims to explain the secrets behind the Transformer architecture.

So let’s try to discover it all together.

The Transformer concept

A transformer model is a type of neural network that excels at understanding the context of sequential data and generating new data from it. Initially developed to tackle sequence transduction tasks, such as neural machine translation, Transformers are designed to transform an input sequence into an output sequence.

This capability is the reason behind their name, "Transformers."

Transformers are considered an evolution of the encoder-decoder architecture. Unlike traditional encoder-decoder models that rely on Recurrent Neural Networks (RNNs) to process sequential information, Transformers don’t use recurrence.

This architecture is engineered to grasp context and meaning by examining the relationships between different elements within the data, thanks to a mathematical technique known as attention. This allows them to focus on relevant parts of the input sequence and understand the dependencies between them.

But before diving too deep into this architecture, let’s contextualize this technology.

Historical Background

Transformers, introduced in the groundbreaking 2017 Google research paper "Attention is All You Need," have become one of the most recent and influential advancements in ML. This seminal model, which marked a significant leap in both theoretical and practical terms, was initially implemented in TensorFlow's Tensor2Tensor package. The Harvard NLP group further contributed by providing an annotated guide to the paper along with a PyTorch implementation, making the concept accessible to a wider audience.

The introduction of Transformers triggered a major surge in the field of ML, often referred to as Transformer AI. This revolutionary model laid the groundwork for subsequent breakthroughs in LLMs, including BERT.

In 2020, OpenAI unveiled GPT-3, demonstrating its versatility by generating poems, programs, songs, websites, and more. This model captivated users worldwide within weeks, showcasing the expansive capabilities of Transformer models. By 2021, Stanford researchers had coined the term "foundation models" in a paper, underscoring the foundational impact of Transformers in reshaping AI. Their work highlighted how Transformer models have not only revolutionized the field but also expanded the boundaries of what is achievable in artificial intelligence, ushering in a new era of possibilities.

And this leads us to the following question…

What is the Transformer Architecture?

Transformers excel at converting NL input sequences into NL output sequences. They are the first transduction models to rely entirely on self-attention, computing representations of inputs and outputs without using sequence-aligned Recurrent Neural Networks (RNNs) or convolution.

If we think of a Transformer for language translation as a simple black box, it takes a sentence in one language, like Spanish, as input and outputs its translation in another language, such as English.

This process demonstrates the model's ability to handle complex translation tasks effectively by leveraging the power of self-attention. However, for now, we see the Transformer as a black box.

If we get a little bit closer, we observe that there are two main components:

1. The Encoder

This part takes our input and converts it into a matrix representation. For example, it processes the Spanish sentence "¿De quién es?" and transforms it into a structured format that captures the essence of the input.

2. The Decoder

This component receives the encoded representation and iteratively generates the output. In our case, it takes the encoded data and produces the translated sentence "Whose is it?" in English.

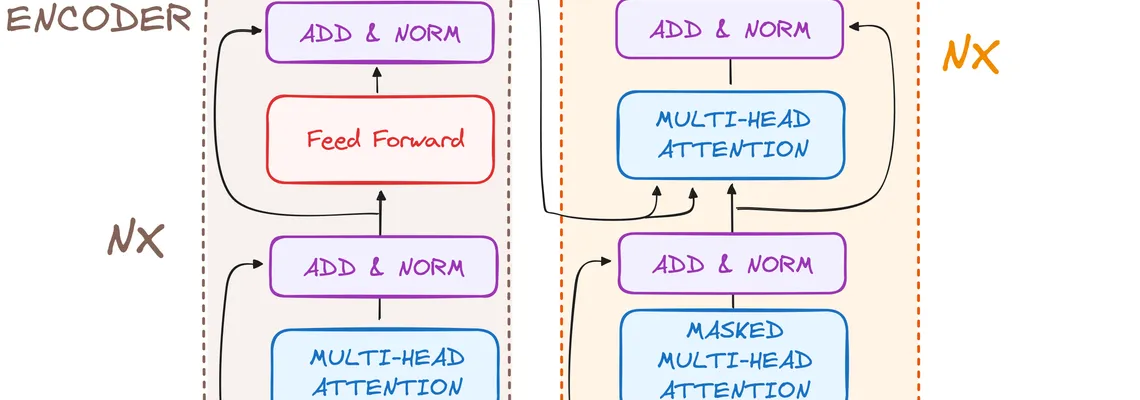

It is important noting that both the encoder and decoder are stacks comprising multiple layers, with each stack containing the same number of layers. Each encoder follows an identical structure, sequentially processing the input through each layer before passing it to the next. Similarly, each decoder follows a uniform structure, receiving input from the final encoder layer and the previous decoder layer.

The original Transformer architecture consisted of 6 encoders and 6 decoders, but this configuration is flexible. We can replicate as many layers as needed, so let's assume we have N layers for both the encoders and decoders.

Both the encoder and the decoder have an internal architecture that makes possible the generation of this natural language. So let’s try to understand the architecture that builds up both entities.

The Encoder

The encoder is a crucial part of the Transformer architecture. Its primary function is to convert input tokens into contextualized representations. Unlike earlier models that processed tokens in isolation, the Transformer encoder captures the context of each token within the entire sequence.

Its structure is composed of the following elements:

Let's break down the workflow into its most basic elements:

1.Preprocessing with Input Embedding and Positional Encoding

Input Embedding

The first step is to embed the input text so our model can handle it. This embedding process occurs only in the bottom-most encoder.

The encoder starts by converting input tokens (words or subwords) into vectors using embedding layers. These embeddings extract the semantic meaning of the input and transform them into numerical vectors.

Positional Encoding

Since Transformers lack the recurrence mechanism found in RNNs, they use positional encodings added to the input embeddings to convey the position of each token within the sequence.

Researchers proposed using a combination of sine and cosine functions to generate positional vectors, making this encoding method adaptable to sentences of any length. Each dimension of these positional vectors is represented by unique frequencies and offsets of sine and cosine waves, with values ranging from -1 to 1, effectively encoding the position of each token.

2.Stack of Encoder Layers

The Transformer encoder contains a stack of identical layers. Each encoder layer converts the input sequences into an abstract representation that keeps information from the whole sequence.

- Each layer comprises two sub-modules:

- A multi-headed attention mechanism that allows the model to focus on different parts of the input sequence simultaneously.

- A fully connected feed-forward network that processes the output of the attention mechanism.

- Additionally, residual connections are incorporated around each sublayer, followed by layer normalization, to improve the flow of gradients and stabilize the training process.

2.1. Multi-Headed Self-Attention Mechanism

In the encoder, the multi-headed attention mechanism uses self-attention, which allows the model to relate each word in the input to other words. For example, it can connect the word “de” with “quién”.

- This mechanism allows the encoder to pay attention to multiple parts of the input sequence as it processes each token.

- It computes attention scores based on three components:

- Query: A vector that represents a token from the input sequence text.

- Key: A vector corresponding to each word or token in the input sequence.

- Value: Vectors that are associated with every key and are used to create the output of the attention layer. When a query and key have a high attention score, the corresponding value is emphasized in the output.

Instead of performing a single attention function, queries, keys, and values are linearly projected multiple times (h times). Each of these projections goes through the attention mechanism in parallel, producing h-dimensional output values.

This approach allows the model to simultaneously focus on different parts of the input sequence, capturing complex relationships and improving the overall contextual understanding.

2.2. Matrix Multiplication (MatMul) - Dot Product of Query and Key

After the query, key, and value vectors are processed through a linear layer, the next step involves performing matrix multiplication between the queries and keys. This dot product operation results in the creation of a score matrix.

The score matrix determines the emphasis each word should place on other words within the sequence. Each word is assigned a score relative to every other word in the same sequence. A higher score indicates a greater focus on that word, thereby capturing the contextual relationships between words.

2.3. Scaling the Attention Scores

To ensure stable gradients and prevent excessively large values, the attention scores are scaled down by dividing them by the square root of the dimension of the query and key vectors. This step helps maintain numerical stability and improves the performance of the model by mitigating the impact of large values resulting from the dot product operations.

2.4. Applying Softmax to the Scaled Scores

Next, a softmax function is applied to the scaled scores to convert them into attention weights. This transformation produces probability values ranging from 0 to 1. The softmax function highlights higher scores while reducing the impact of lower ones, thereby enhancing the model's capability to determine which words should receive more focus and attention.

2.5. Combining Softmax Results with the Value Vector

The next step in the attention mechanism involves multiplying the weights obtained from the softmax function by the value vectors, resulting in an output vector. This operation ensures that only the words with high softmax scores are emphasized and preserved. The resulting output vector is then passed through a linear layer for further processing, refining the model's understanding and contextual representation of the input sequence.

2.6. Normalization and Residual Connections

A normalization step follows each sub-layer in an encoder. Additionally, the output of each sub-layer is added to its input through residual connections.

This helps mitigate the vanishing gradient problem, allowing for the construction of deeper models. This normalization and residual connection process is also applied after the Feed-Forward Neural Network, ensuring stability and efficiency throughout the model.

2.7. Feed-Forward Neural Network:

The journey of the normalized residual output continues as it passes through a pointwise feed-forward network, a crucial phase for additional refinement.

Think of this network as two linear layers with a ReLU activation in between, serving as a bridge. After processing through this structure, the output is combined with the input of the pointwise feed-forward network via residual connections.

This integration is followed by another round of normalization, ensuring that the output is well-adjusted and ready for the next steps. This repeated process of normalization and residual merging helps maintain stability and enhances the model's learning capabilities.

3. Encoder’s Output

The output of the final encoder layer is a set of vectors, each capturing a rich contextual understanding of the input sequence. This output then serves as the input for the decoder in a Transformer model.

Imagine building a tower, where you can stack up N encoder layers. Each layer in this stack has the opportunity to explore and learn different aspects of attention, much like accumulating layers of knowledge.

The Decoder

The decoder's primary function is to generate text sequences. Like the encoder, the decoder consists of a similar set of sub-layers. It includes two multi-headed attention layers and a pointwise feed-forward layer. Additionally, it utilizes residual connections and layer normalization after each sub-layer to ensure stability and enhance performance.

These components function similarly to the encoder's layers, but with a key difference: each multi-headed attention layer in the decoder has a distinct role. The final step in the decoder's process involves a linear layer that acts as a classifier, followed by a softmax function to calculate the probabilities of different words.

The Transformer decoder is designed to generate output by decoding the encoded information step by step. This is why it works in an autoregressive manner, beginning with a start token. It uses a list of previously generated outputs as its inputs together with the encoder's outputs enriched with attention information from the initial input.

This sequential decoding process continues until the decoder generates a token that signals the end of its output creation.

1.Preprocessing - Output Embeddings and Positional Encoding

The decoder starts similarly to the encoder, where the input first passes through an embedding layer, converting tokens into vectors.

Following the embedding layer, the input goes through a positional encoding layer, which adds positional information to the embeddings. These positional embeddings are then fed into the first multi-head attention layer of the decoder, where the attention scores specific to the decoder’s input are computed.

2.Stack of Decoder Layers

The decoder is composed of a stack of identical layers (6 in the original Transformer model). Each layer has three main sub-components:

2.1 Masked Self-Attention Mechanism

This mechanism is similar to the self-attention mechanism in the encoder but with a key difference: it prevents positions from attending to subsequent positions. This ensures that each word in the sequence is not influenced by future tokens.

For instance, when computing the attention scores for the word "quién," it is crucial that it does not consider "es," which appears later in the sequence. This masking maintains the autoregressive property of the decoder, ensuring that predictions are made based only on previously generated tokens.

2.2 Encoder-Decoder Multi-Head Attention (Cross Attention):

In the second multi-headed attention layer of the decoder, the outputs from the encoder serve as the keys and values, while the queries come from the first multi-headed attention layer of the decoder. This configuration allows the decoder to align its input with the encoder's, enabling it to focus on the most relevant parts of the encoder's output.

Following this cross-attention mechanism, the output is further processed through a pointwise feedforward layer, refining the information and enhancing the overall representation.

2.3 Feed-Forward Neural Network:

The normalized residual output is then passed through a pointwise feed-forward network, which consists of two linear layers with a ReLU activation in between. This network provides additional refinement to the output. The processed output is then combined with the input of the feed-forward network via residual connections, followed by another round of normalization to ensure stability and synchronization for subsequent steps.

2.4 Output probabilities with Linear Classifier and Softmax

The data's journey through the transformer model culminates in its passage through a final linear layer, functioning as a classifier. The size of this classifier corresponds to the total number of classes involved, which equates to the number of words in the vocabulary. For example, if there are 1000 distinct words, the classifier's output will be an array with 1000 elements.

This output is then fed into a softmax layer, transforming it into a range of probability scores between 0 and 1. The highest probability score indicates the model's prediction for the next word in the sequence, with its corresponding index pointing directly to that word.

2.5 Normalization and Residual Connections

All these steps (masked self-attention, the encoder-decoder cross-attention, and the feed-forward network) are followed by a normalization step and a residual connection.

This structure ensures stability and efficient gradient flow, allowing the model to train deeper networks effectively.

3. Decoder’s Output

The final layer's output is transformed into a predicted sequence through a linear layer followed by a softmax function, generating probabilities over the entire vocabulary.

The decoder's operational flow incorporates the newly generated output into its list of inputs and proceeds with the decoding process. This cycle continues until the model predicts a specific token, typically the end token, signaling the completion of the sequence.

The token with the highest probability is selected as the next word in the sequence.

It's important to note that the decoder is not limited to a single layer. It can be structured with multiple (N) layers, each building upon the input from the encoder and the preceding layers. This layered architecture allows the model to diversify its focus and capture various attention patterns across different heads.

Such a multi-layered approach significantly enhances the model’s predictive capabilities, enabling it to develop a more nuanced understanding of the input sequence and extract meaningful attention combinations.

And finally, we get the whole structure.

In Brief

Transformers have not only revolutionized the field of NLP but have also set a new standard for what AI can achieve. The architecture's ability to handle complex language tasks with remarkable efficiency has opened up an infinite number of possibilities, from conversational agents to advanced machine translation systems.

As research continues, the flexibility and power of Transformer models will undoubtedly drive further breakthroughs, solidifying their role as foundational elements in the evolution of artificial intelligence.